Few people were asking me last week, “Hey Steve, what did you do over the easter break?” and I told them “you know, just relaxed.” And that’s sort of true, but the real truth is I benchmarked the Radeon RX 7900 GRE and GeForce RTX 4070 Super across 58 different game configurations.

In that process, the idea was to take an in-depth look at rasterization, ray tracing, and upscaling performance. So as you can imagine, there’s a shipload of data to go over…

For this test we’ve used graphics card models selling at the MSRP. Representing the Radeon 7900 GRE is Gigabyte’s Gaming OC which can be had for $550 right now. Then for the RTX 4070 Super we have Gigabyte’s Eagle OC. This model is available for $600.

Nvidia is asking just shy of a 10% premium here, so it will be interesting to see if the GeForce GPU is worth the extra cash. For benchmarking we’re using our Ryzen 7 7800X3D test system with 32GB of DDR5-6000 CL30 memory on the Gigabyte X670E Aorus Master, using the latest BIOS revision.

(A Ton of) Gaming Benchmarks

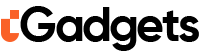

First up, we have a quick look at Rainbow Six Siege using competitive type quality settings with the medium preset. Here, the 4070 Super and 7900 GRE are neck-and-neck, delivering identical performance at all three tested resolutions. Overall, the performance is great; there’s just no clear winner here.

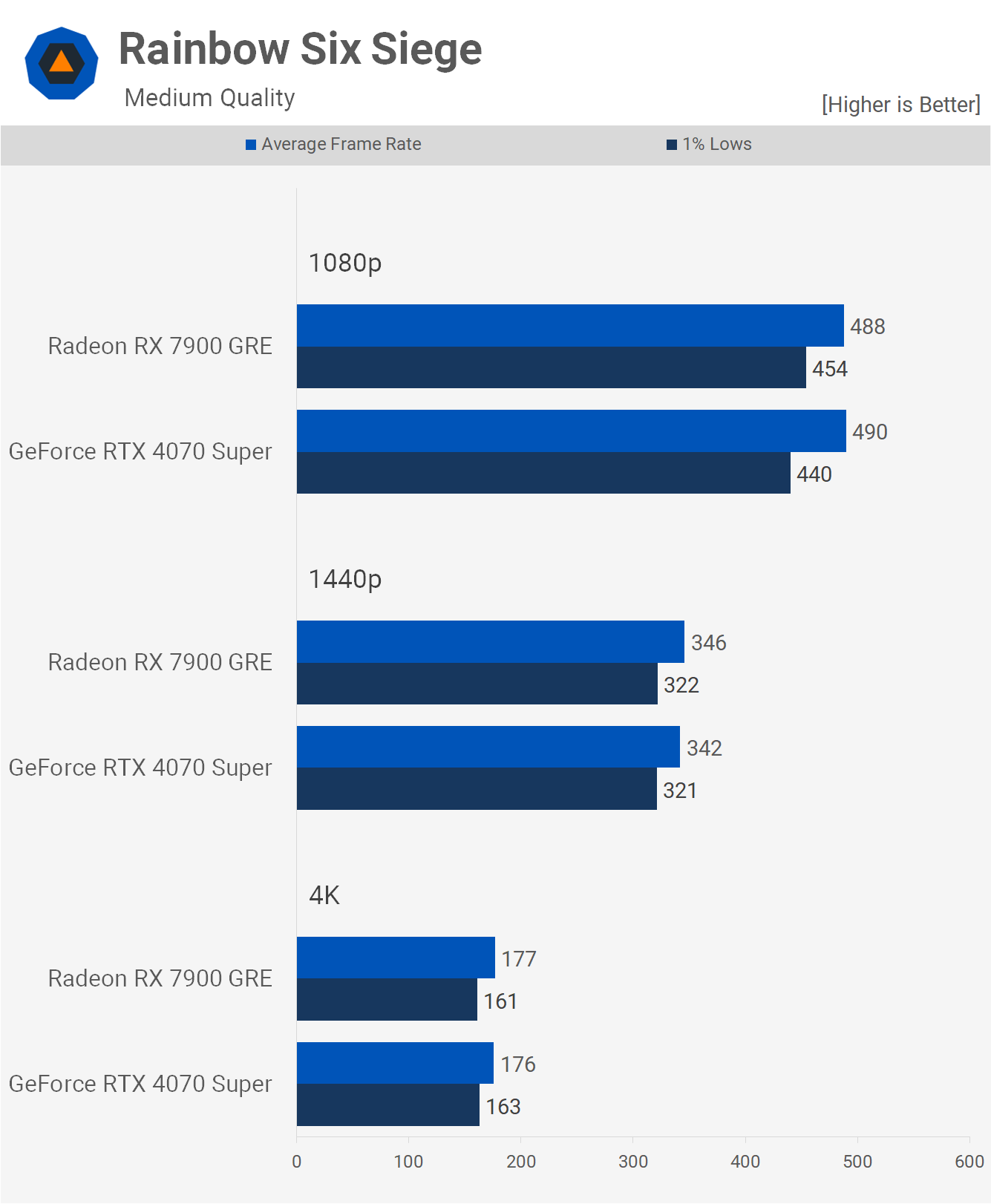

Next, we examine Counter-Strike 2, again tested with more competitive quality settings. At 1080p, the results are CPU limited using the 7800X3D, but at 1440p, the 7900 GRE pulls ahead by a significant 20% margin, as seen when comparing the average frame rate. Then, at 4K, that margin slightly extends to 22%, with the GRE averaging 283 fps.

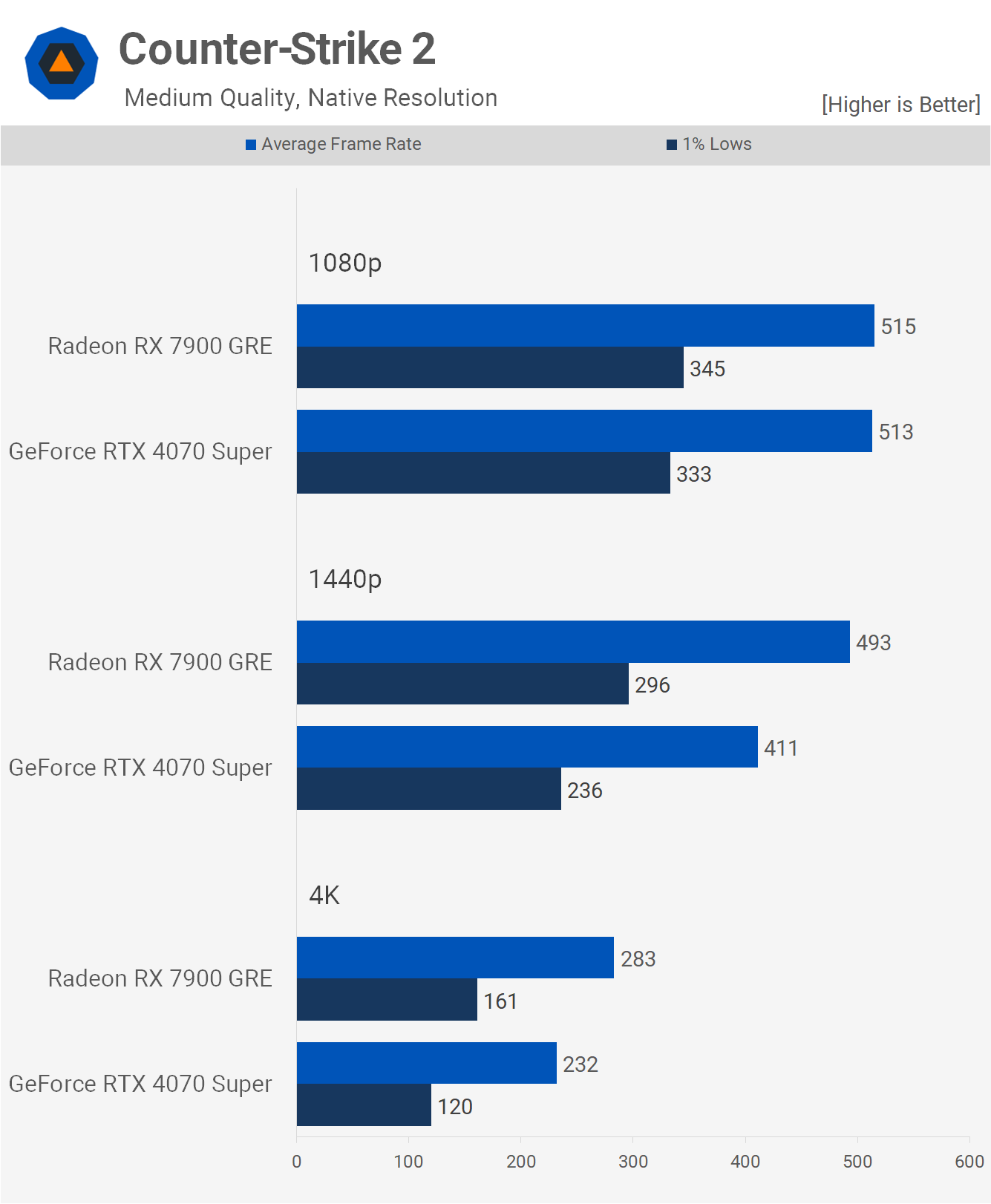

One of the newest games in this extensive benchmark is Helldivers 2. For this title, we’re using the ultra preset, as this isn’t a competitive shooter, and we believe gamers here would appreciate higher quality visuals.

At 1080p, the two GPUs were evenly matched. Then at 1440p we can see the RTX 4070 Super takes off with a big 25% performance advantage, and a massive 34% faster at 4K, making the GeForce GPU the best option for this title without a doubt.

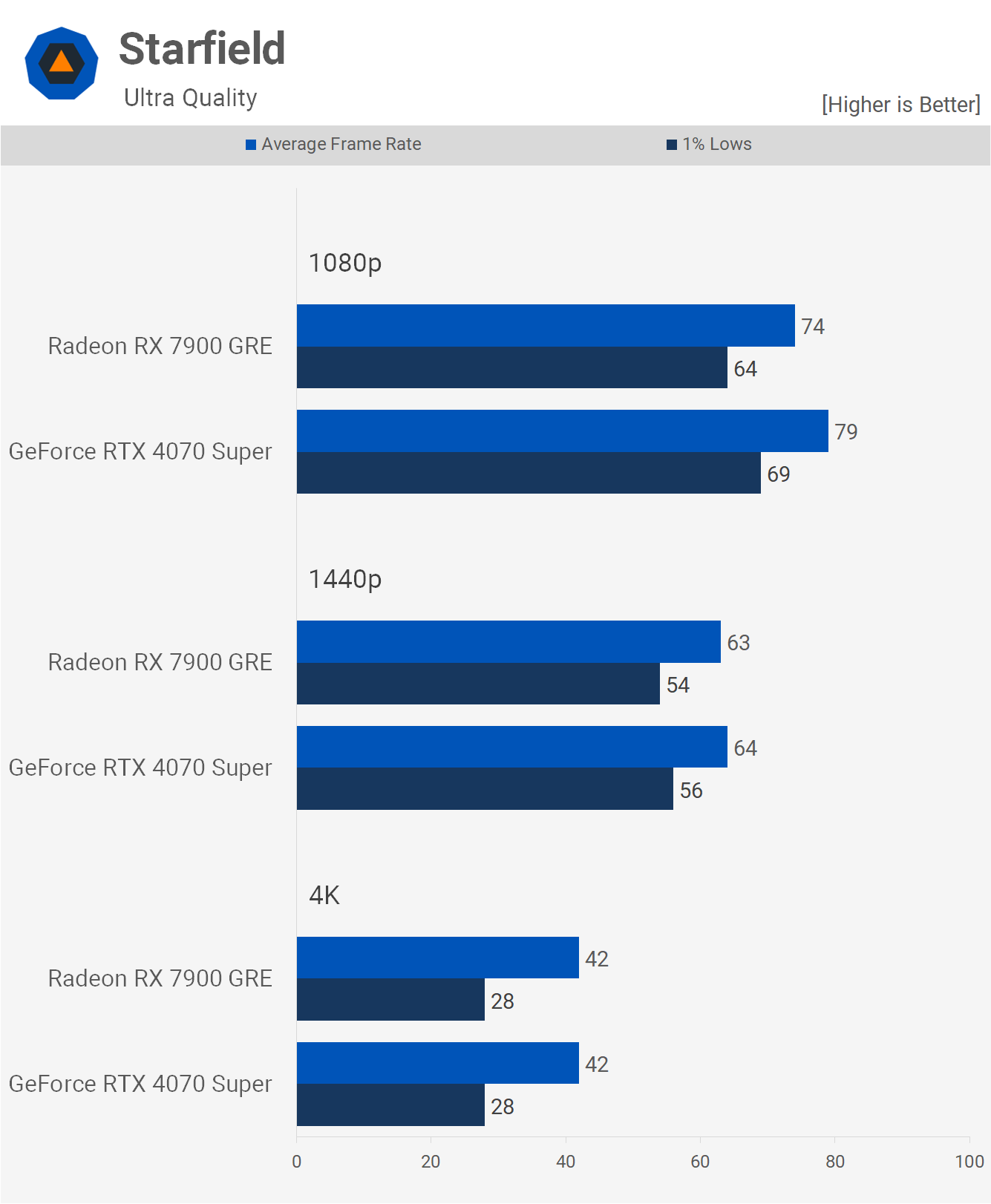

Moving on to Starfield, we find more competitive results here. The GeForce GPU was just 7% faster at 1080p, with similar results seen at 1440p, and then identical performance at 4K. These results are promising for the 7900 GRE as they suggest it being the better value product, but of course, there’s much more to examine.

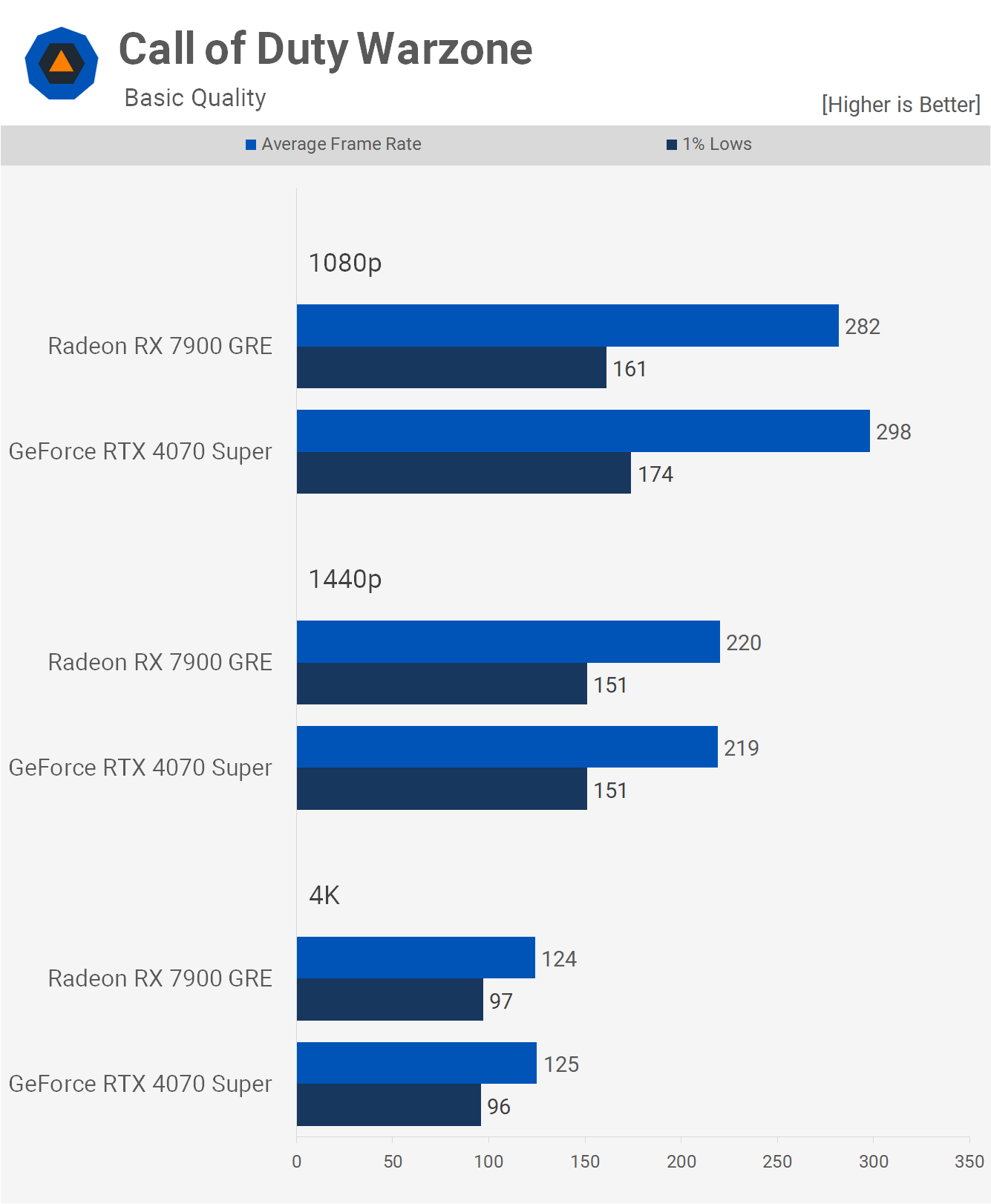

Next, we discuss Call of Duty Warzone, with yet more competitive results. The 4070 Super managed to edge out the GRE at 1080p, but beyond that, performance was nearly identical.

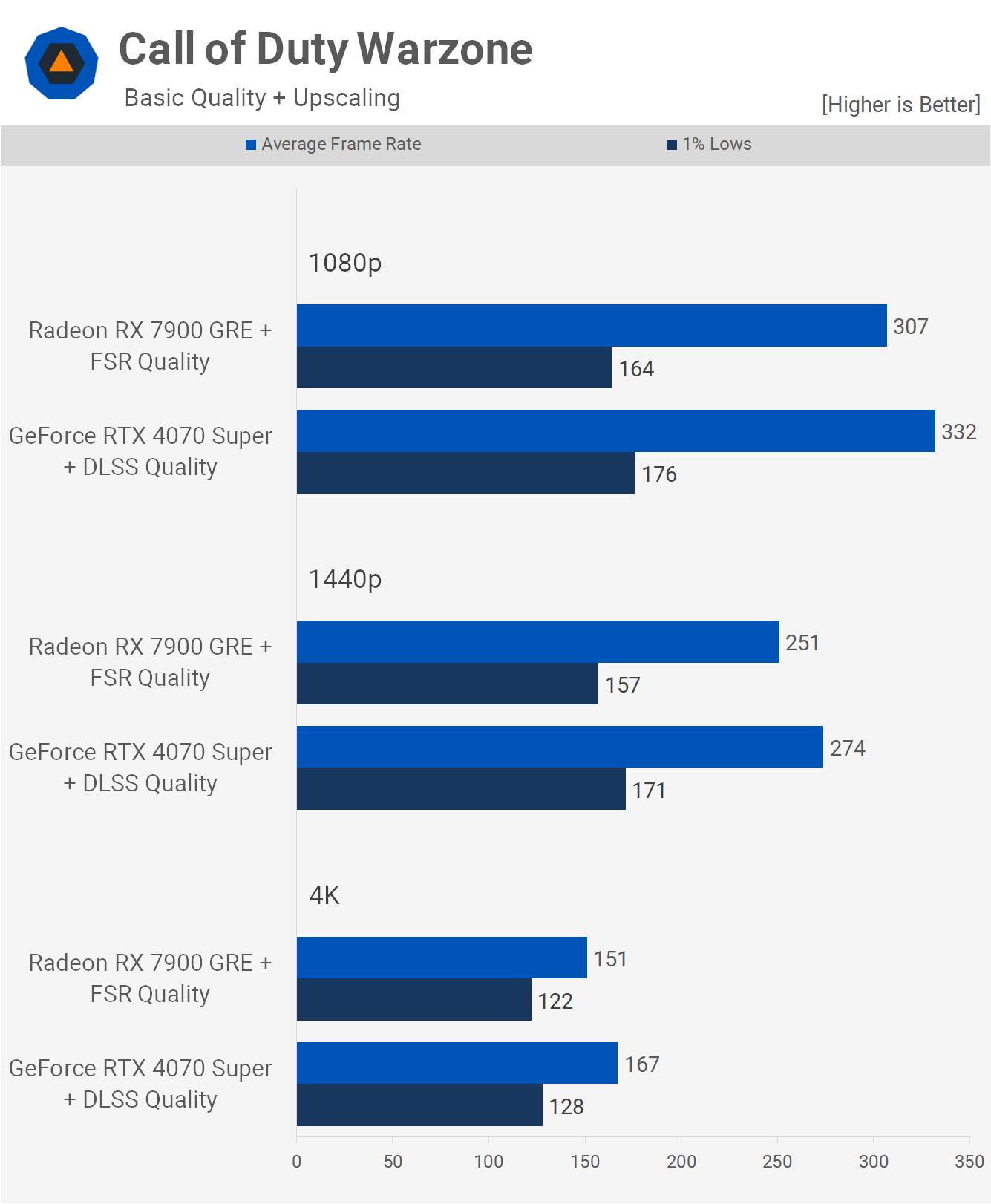

However, when we enable upscaling with DLSS for the GeForce GPU set to the quality mode, and FSR for the Radeon GPU, also set to the quality mode, the 4070 Super gains a slight performance advantage. At 1080p, the 4070 Super was 8% faster, 9% faster at 1440p, and 11% faster at 4K. These margins are not vast, but with upscaling enabled, the GeForce GPU does have a performance and possibly visual quality advantage.

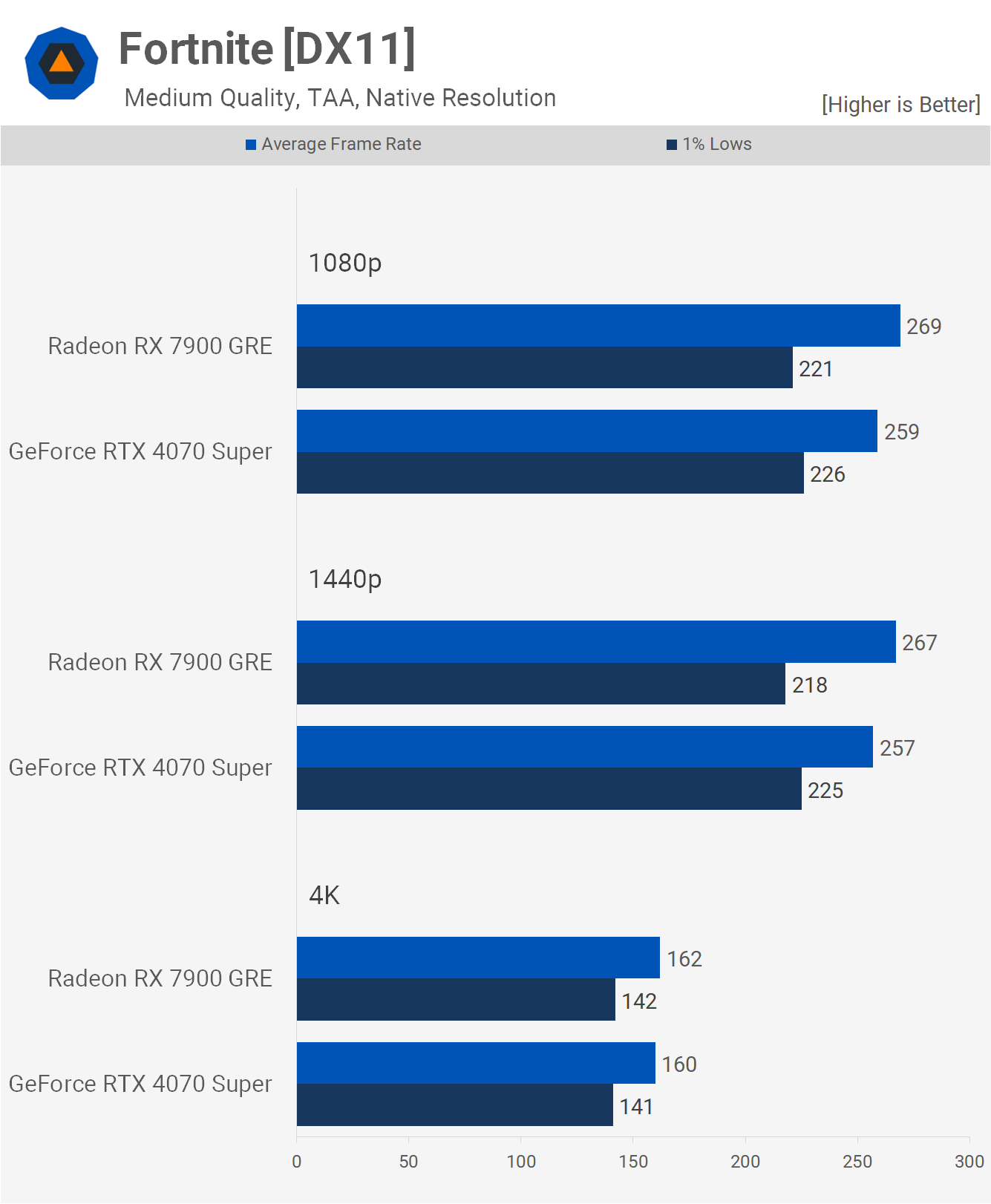

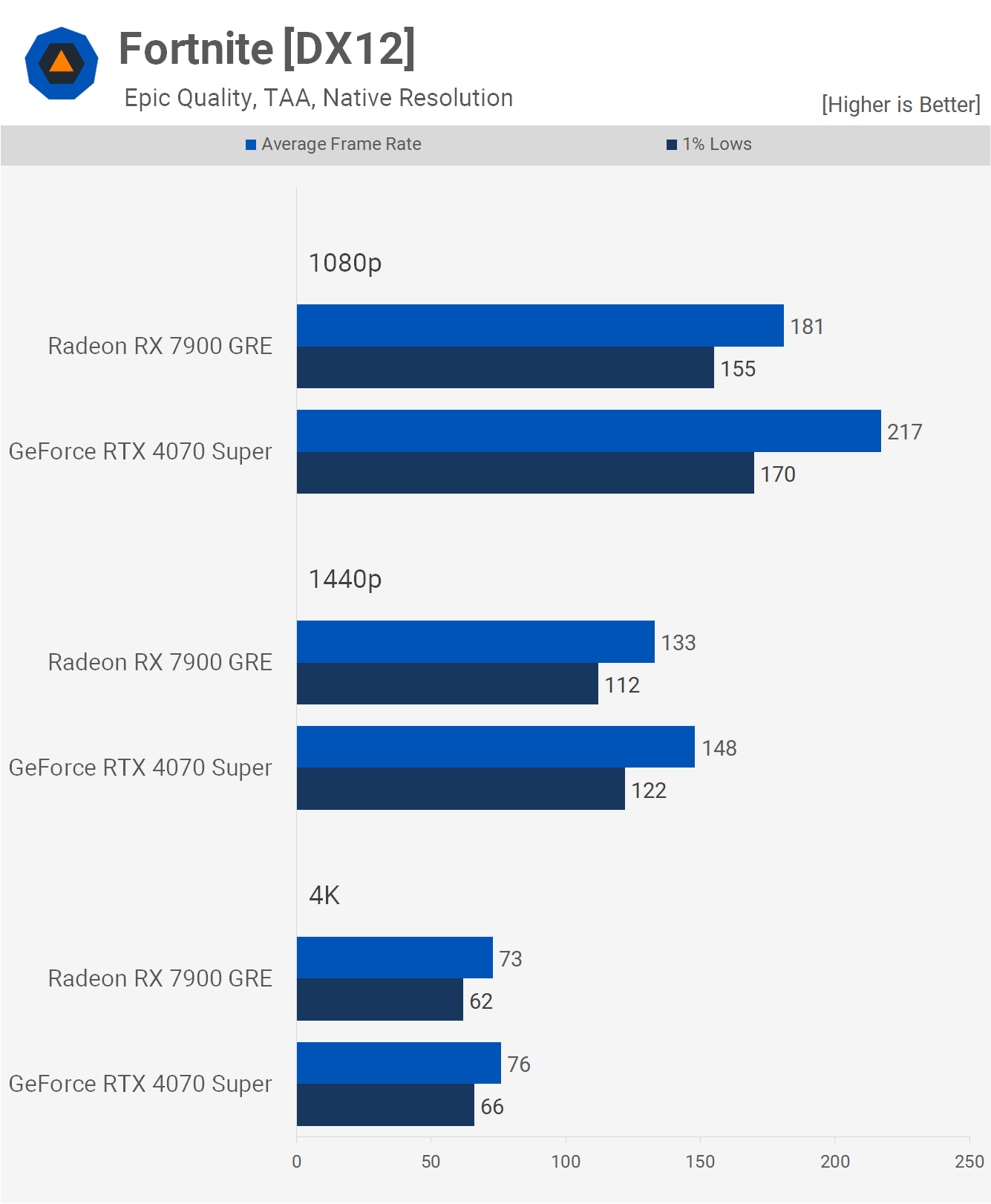

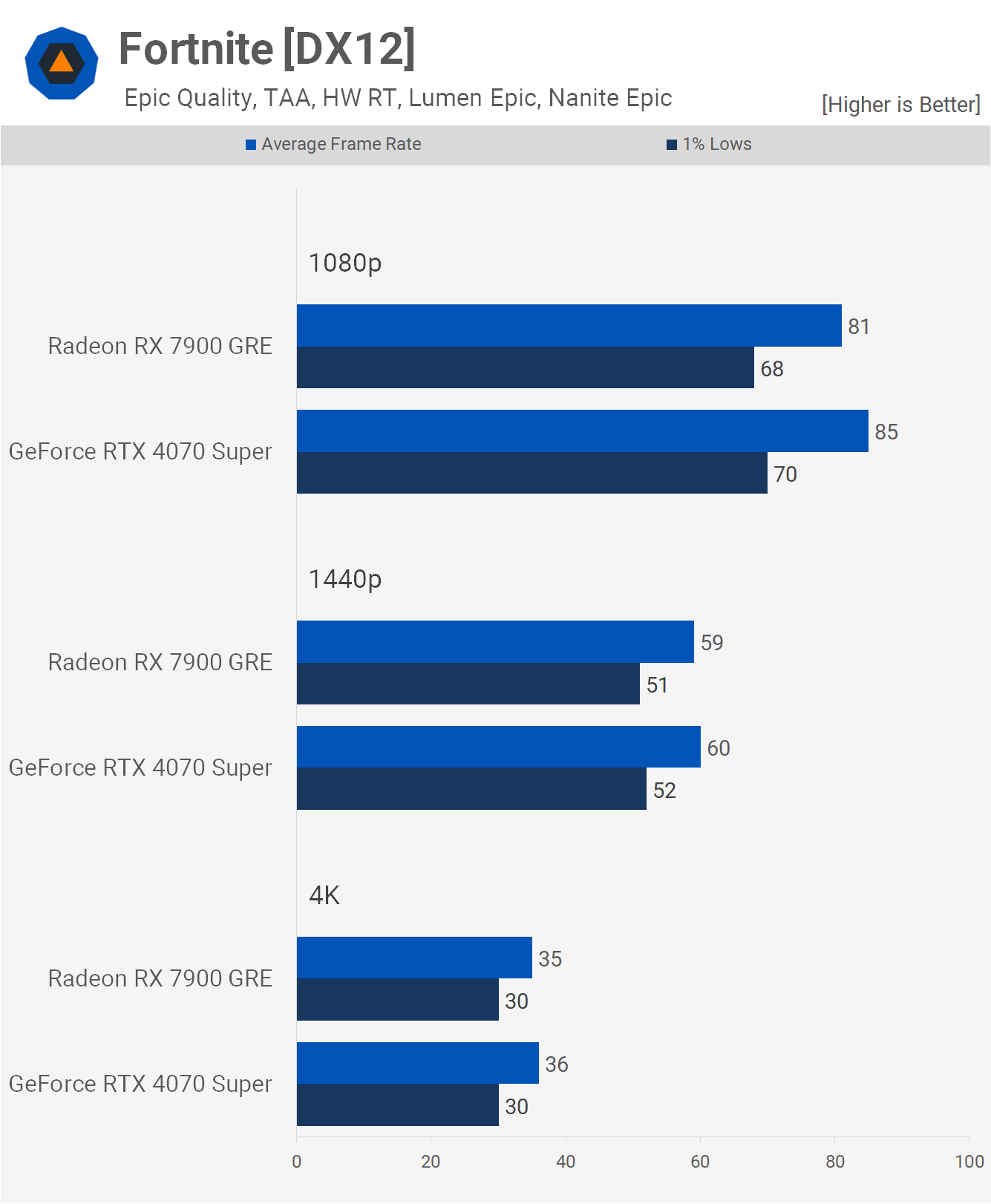

Next, we turn to Fortnite, starting with the more competitive type quality settings by using the medium preset. Just a few percent separate the GRE and 4070 Super at 1080p and 1440p, with the Radeon GPU slightly faster when comparing the average frame rate, while the 4070 Super had the edge for 1% lows. Then, at the 4K resolution, we’re looking at comparable performance.

But if we enable the epic quality settings using the DirectX 12 mode, the 4070 Super pulls ahead, offering an impressive 20% performance gain at 1080p and then an 11% boost at 1440p, before the results become nearly identical at 4K.

Then, quite surprisingly, when we enable hardware ray tracing, the results become very competitive at all three resolutions.

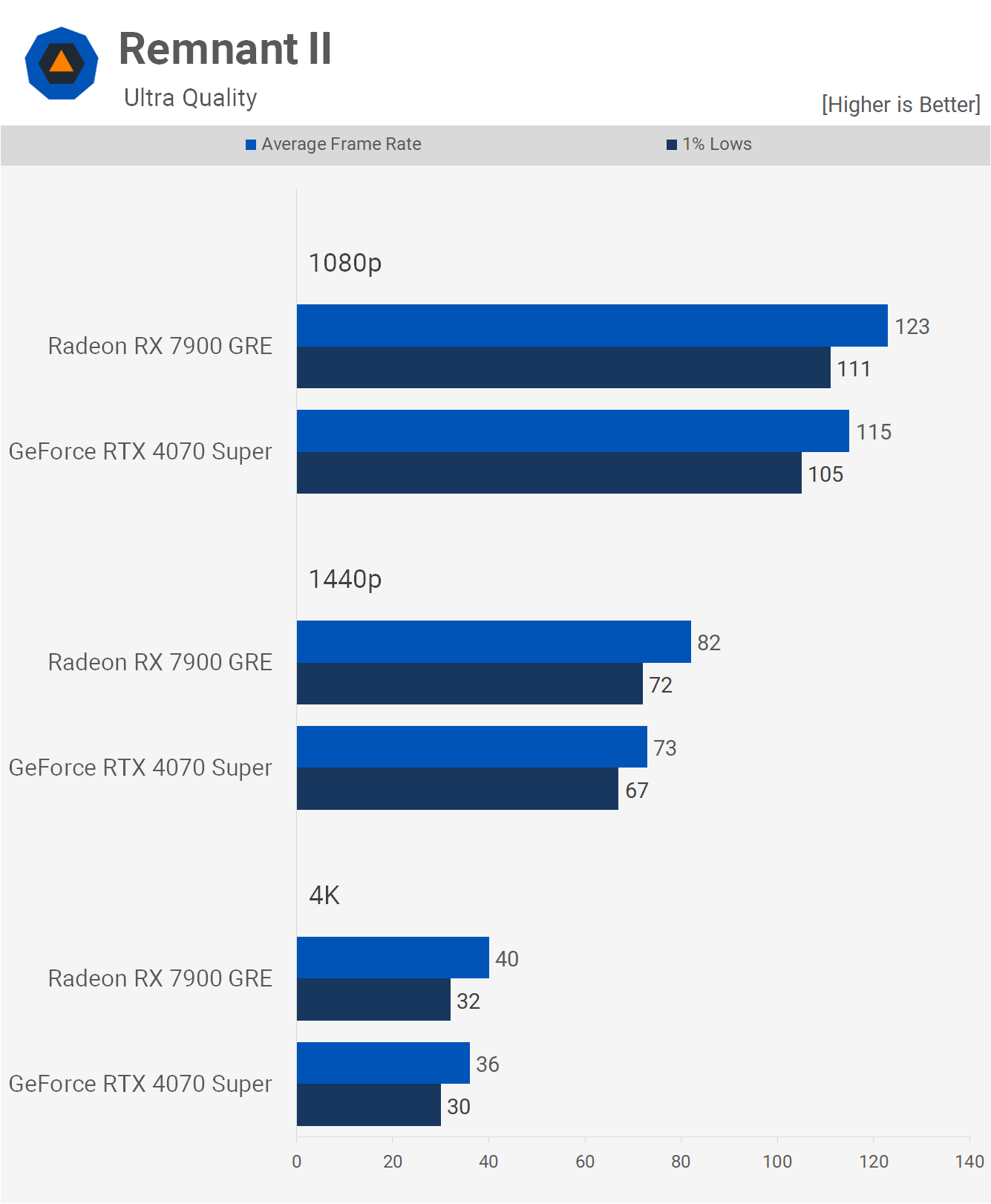

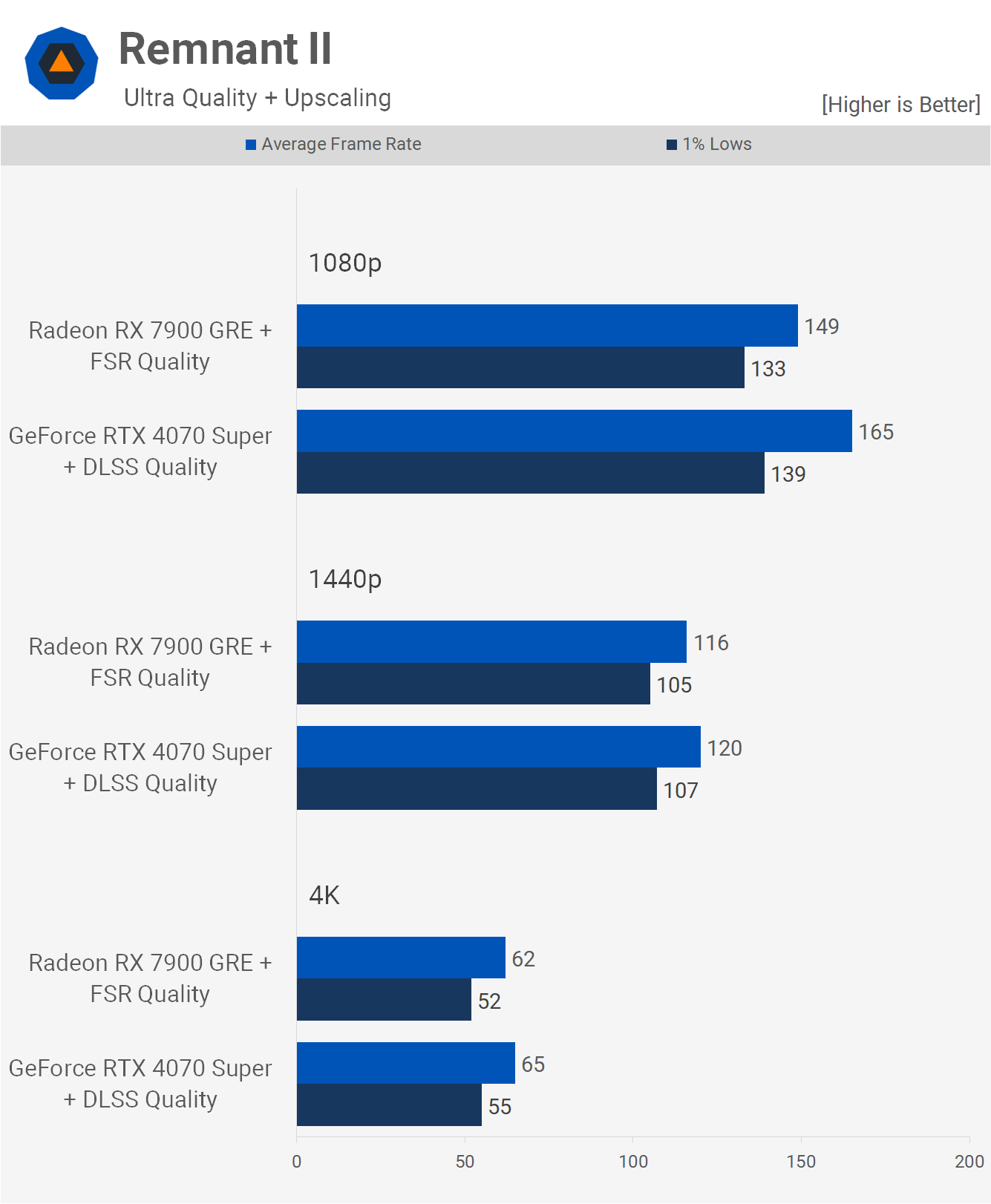

Remnant II seems to perform best with the 7900 GRE, though the margins aren’t substantial, with a mere 7% performance advantage at 1080p, 12% at 1440p, and 11% at 4K. The extra frames at 1440p and 4K will be noticeable, making this a significant win for the GRE.

However, enabling upscaling changes the scenario, and now performance is very similar at 1440p and 4K, with a very slight advantage shifting towards the 4070 Super. The GeForce GPU was also 11% faster at 1080p, though upscaling doesn’t work nearly as well at this resolution, especially when compared to its effectiveness at 1440p, and particularly at 4K.

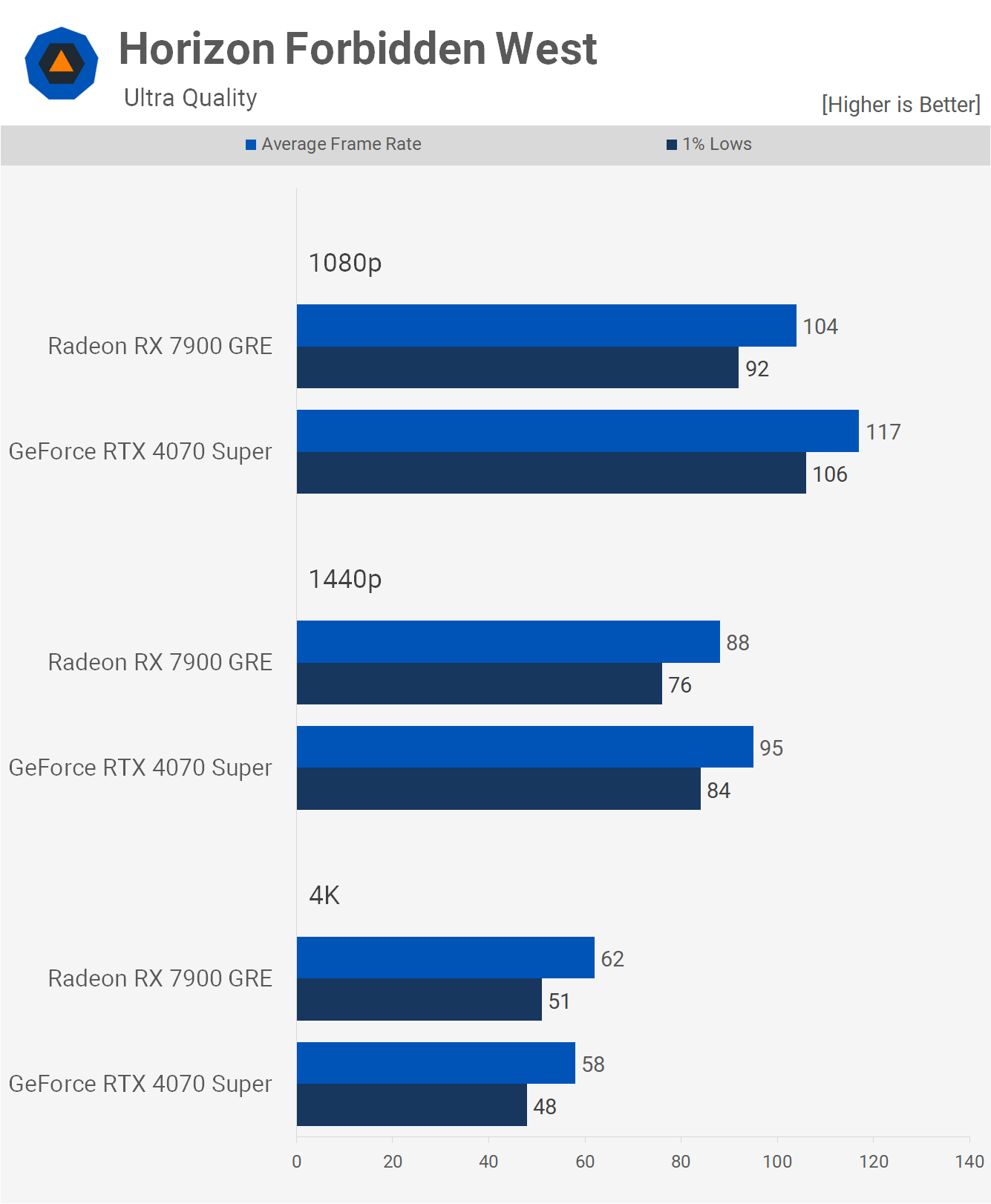

Horizon Forbidden West presents some mixed results for us. At 1080p, the 4070 Super delivered 13% more frames than the 7900 GRE, with an average of 117 fps. That margin was reduced to 8% at 1440p before flipping in favor of the GRE at 4K, handing it a small 7% win.

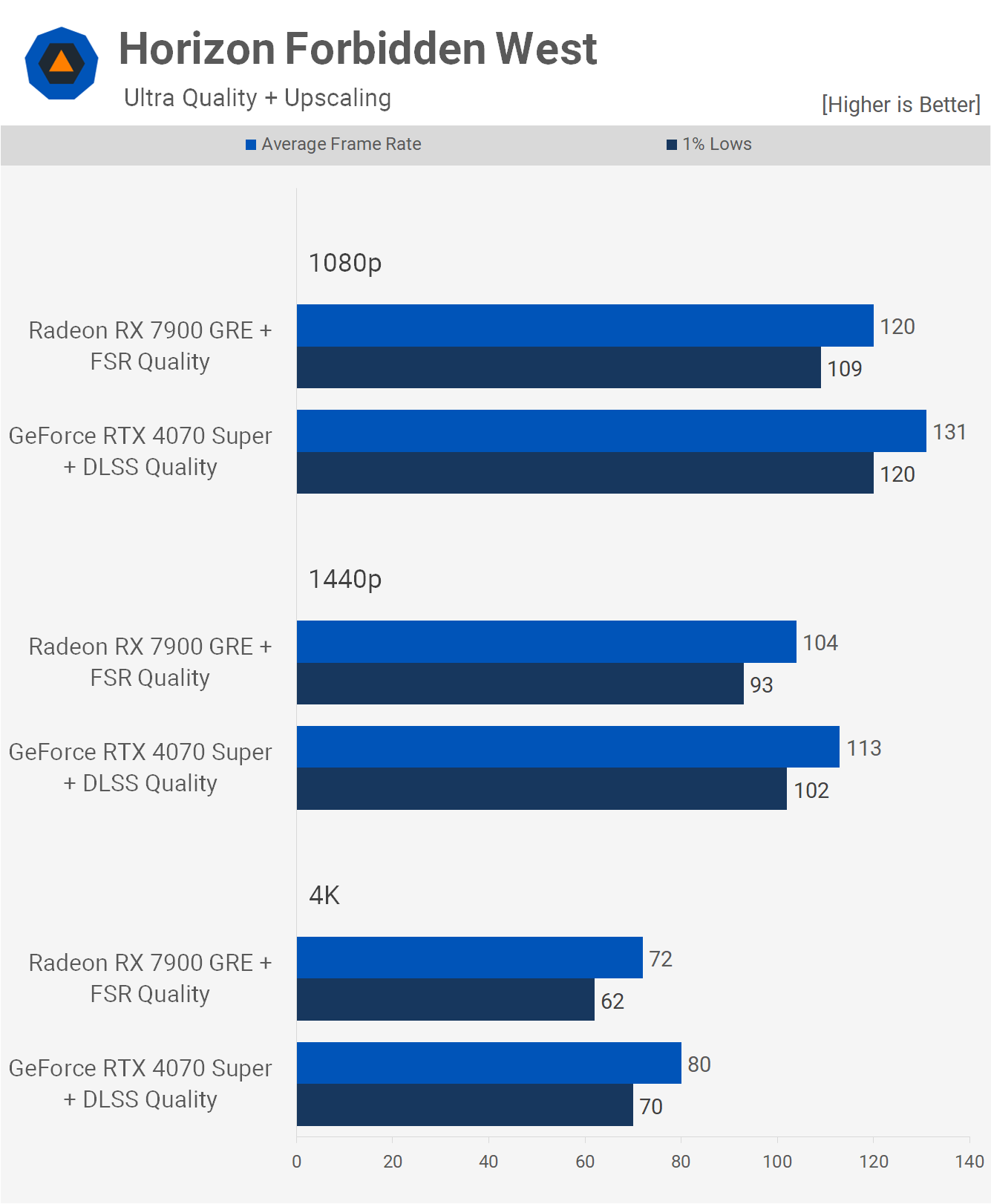

Once again, enabling upscaling benefits the 4070 Super and its limited memory bandwidth more, and now the GeForce GPU is seen to be 9% faster at 1080p and 1440p, with an 11% advantage at 4K. Essentially, the 4070 Super saw a 38% uplift with upscaling enabled, whereas the 7900 GRE saw just a 16% boost.

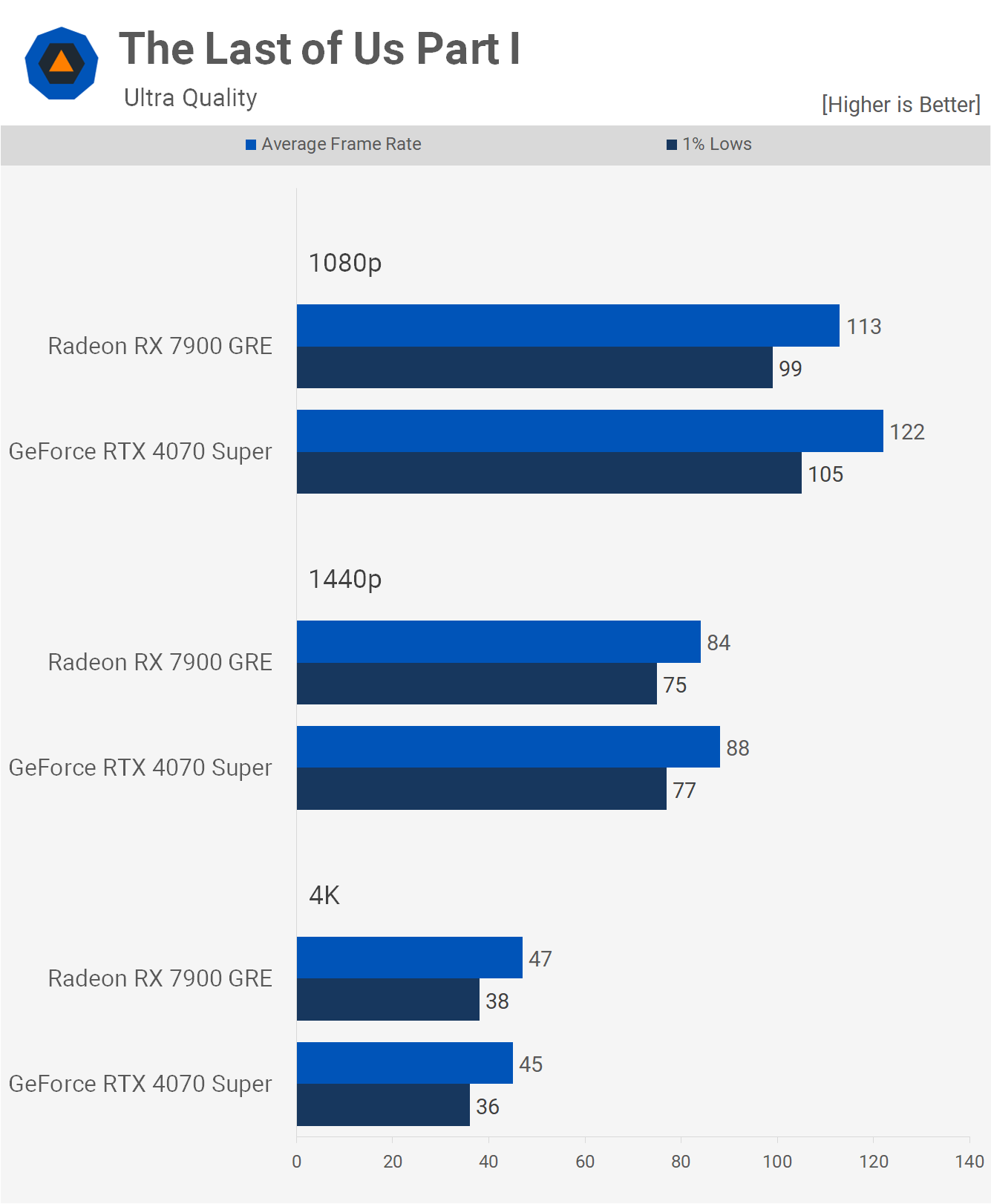

Moving on to The Last of Us Part I, we find relatively competitive performance at all three tested resolutions as the 4070 Super was just 8% faster at 1080p, 5% faster at 1440p, and then 4% slower at 4K.

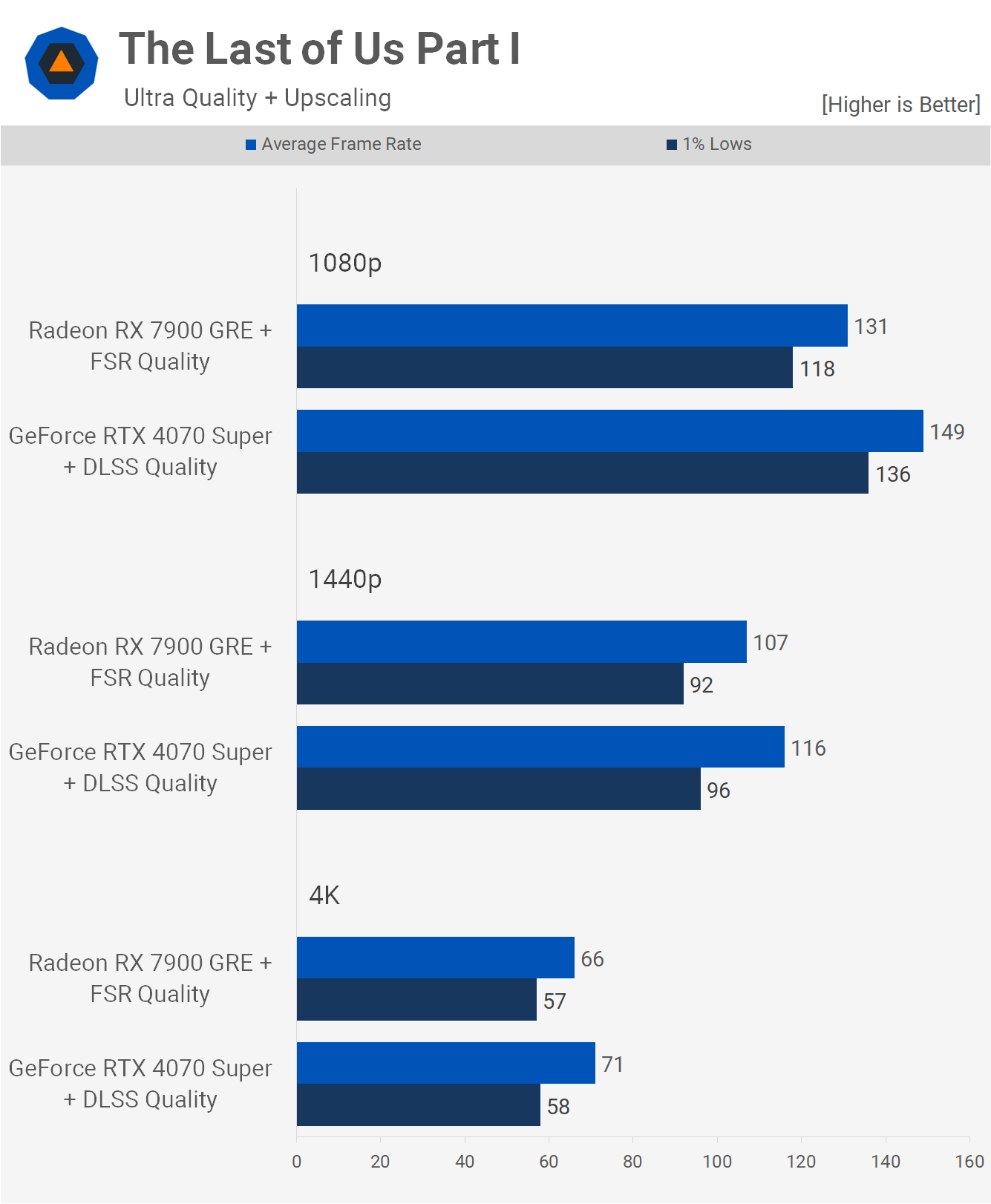

Again, enabling upscaling does further benefit Nvidia in this matchup, as the 4070 Super is now 14% faster at 1080p, 8% faster at 1440p, and 4K. So, the margins at the higher resolutions are not vast, but they are improved for Nvidia compared to the native performance.

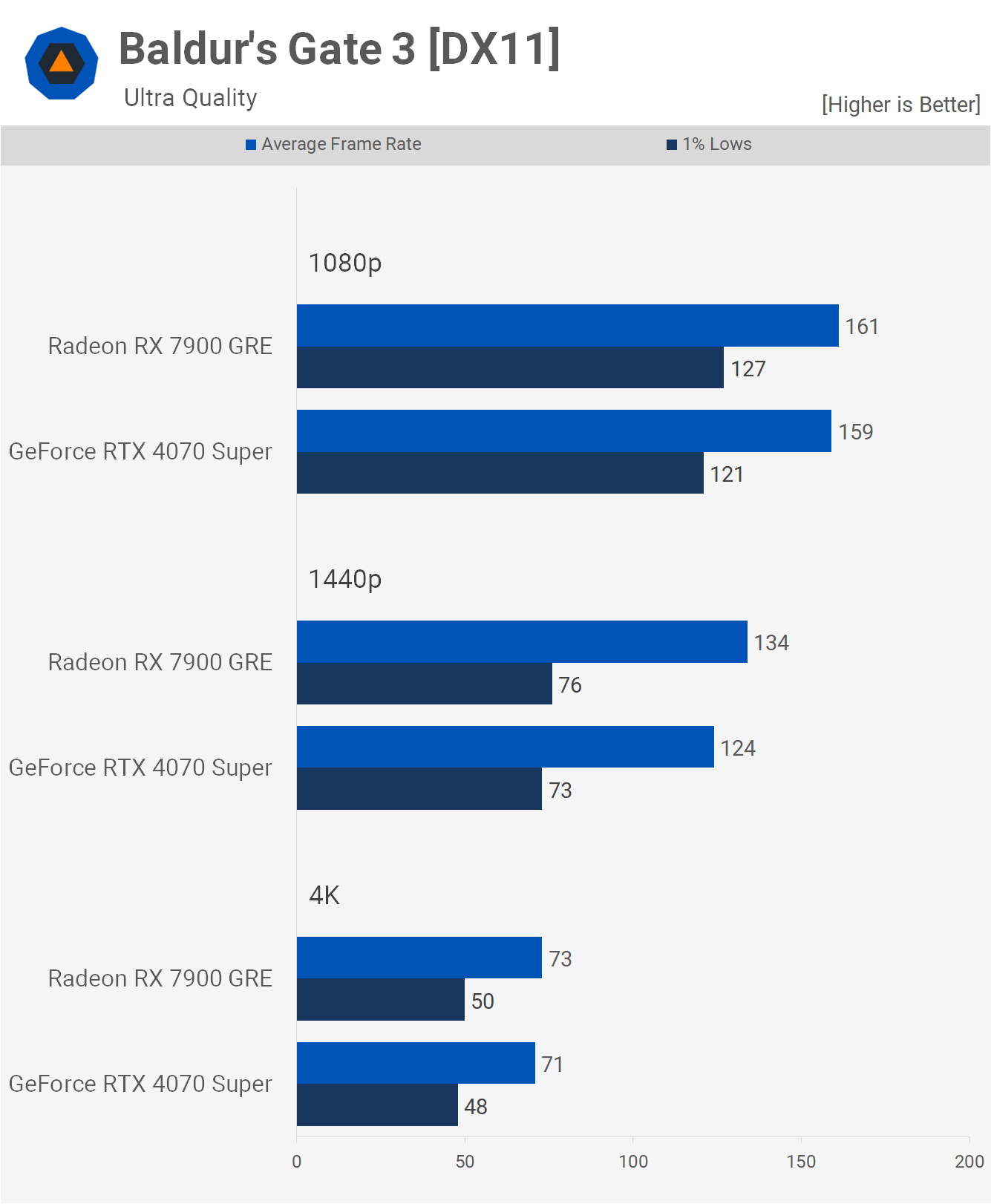

Baldur’s Gate 3 provides us with competitive results, especially at 1080p and 4K, as the margin at 1440p extended to 8%. It’s likely the 7900 GRE could offer greater performance at 1080p as well, had it not been limited by CPU performance.

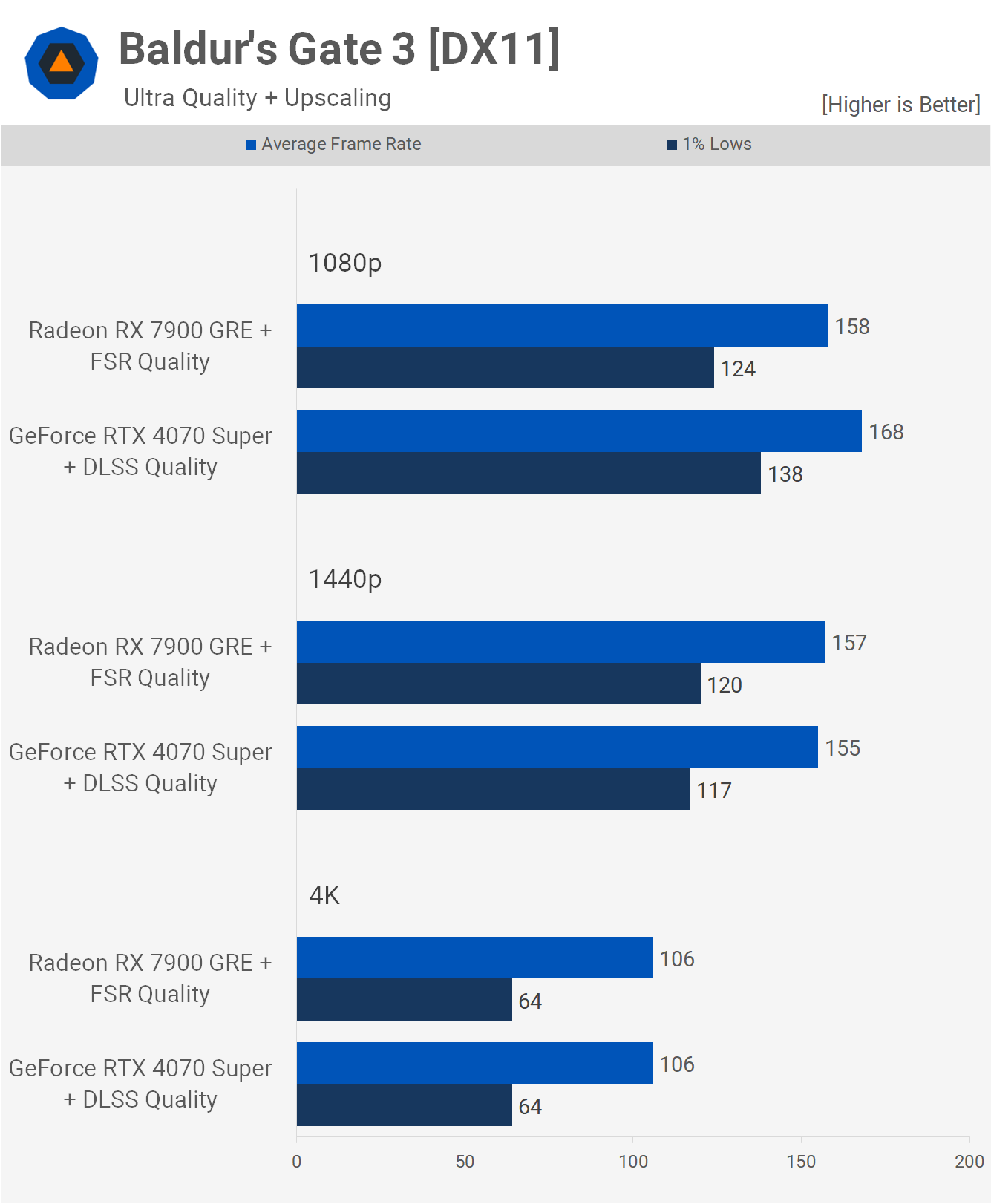

However, if we enable upscaling, it’s actually the 4070 Super that performs best at 1080p, though we are still clearly CPU limited here, as evidenced by the fact that the 1080p and 1440p frame rates are very similar. Then, at 4K, performance is identical, so there’s no real winner in this title.

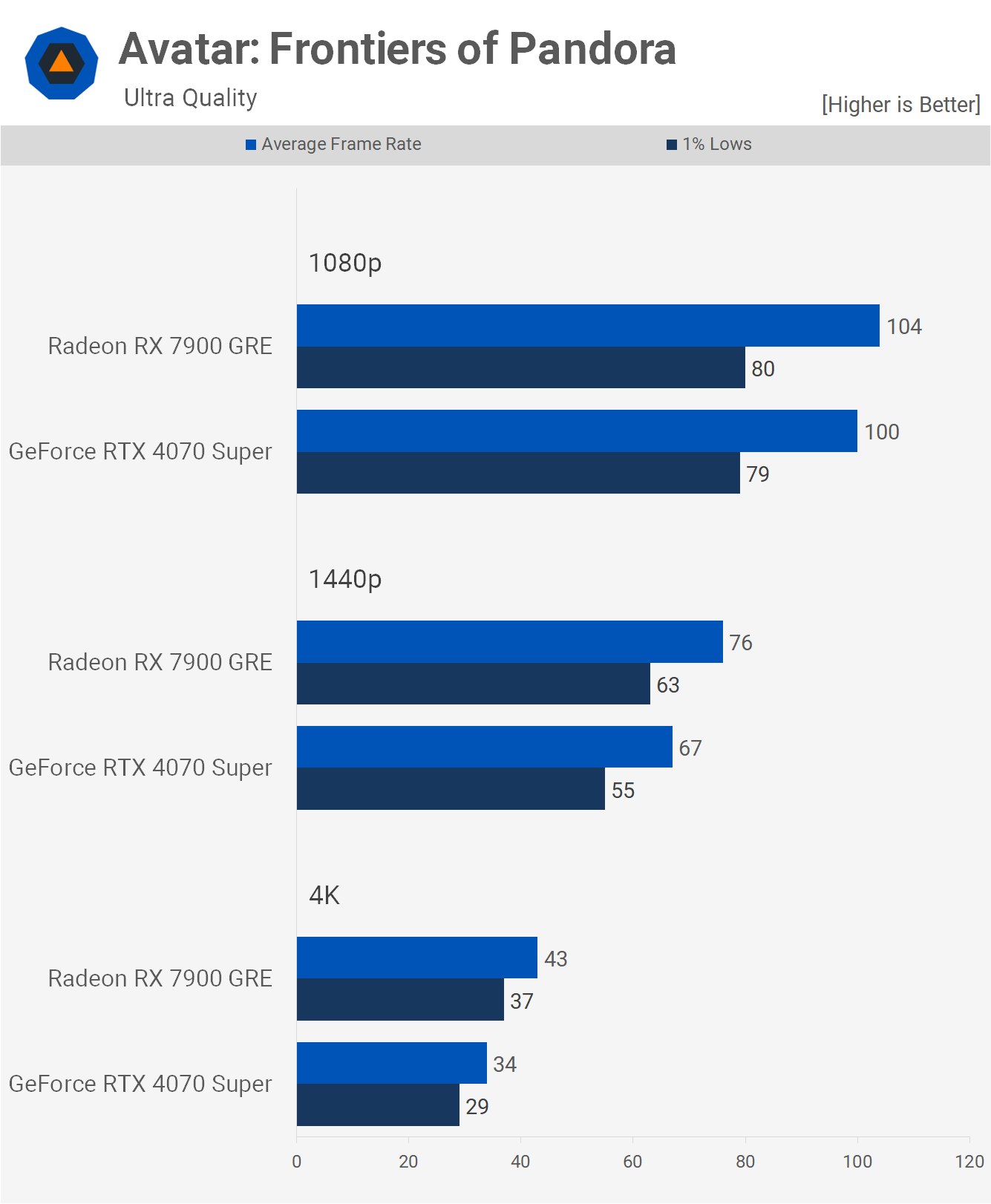

Next, we have Avatar, which enables some level of ray tracing by default. Even so, the 7900 GRE does exceptionally well here, just nudging out the 4070 Super by a 4% margin at 1080p, 13% at 1440p, and a rather substantial 26% margin at 4K.

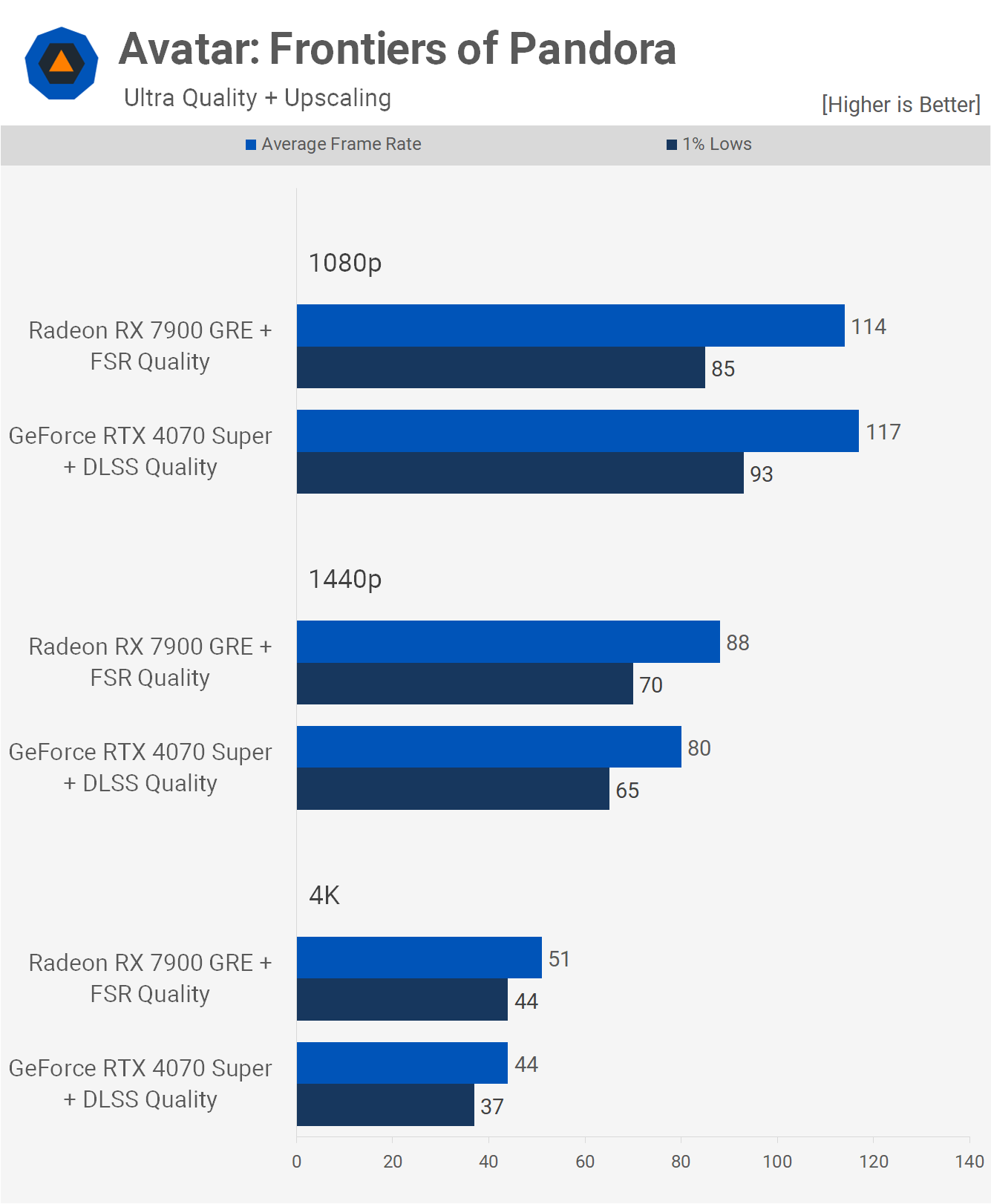

Enabling upscaling doesn’t change much; the 4070 Super ends up being a mere 3% faster at 1080p, but beyond that, the 7900 GRE regains the lead at 1440p, offering 10% more performance and 16% more at 4K.

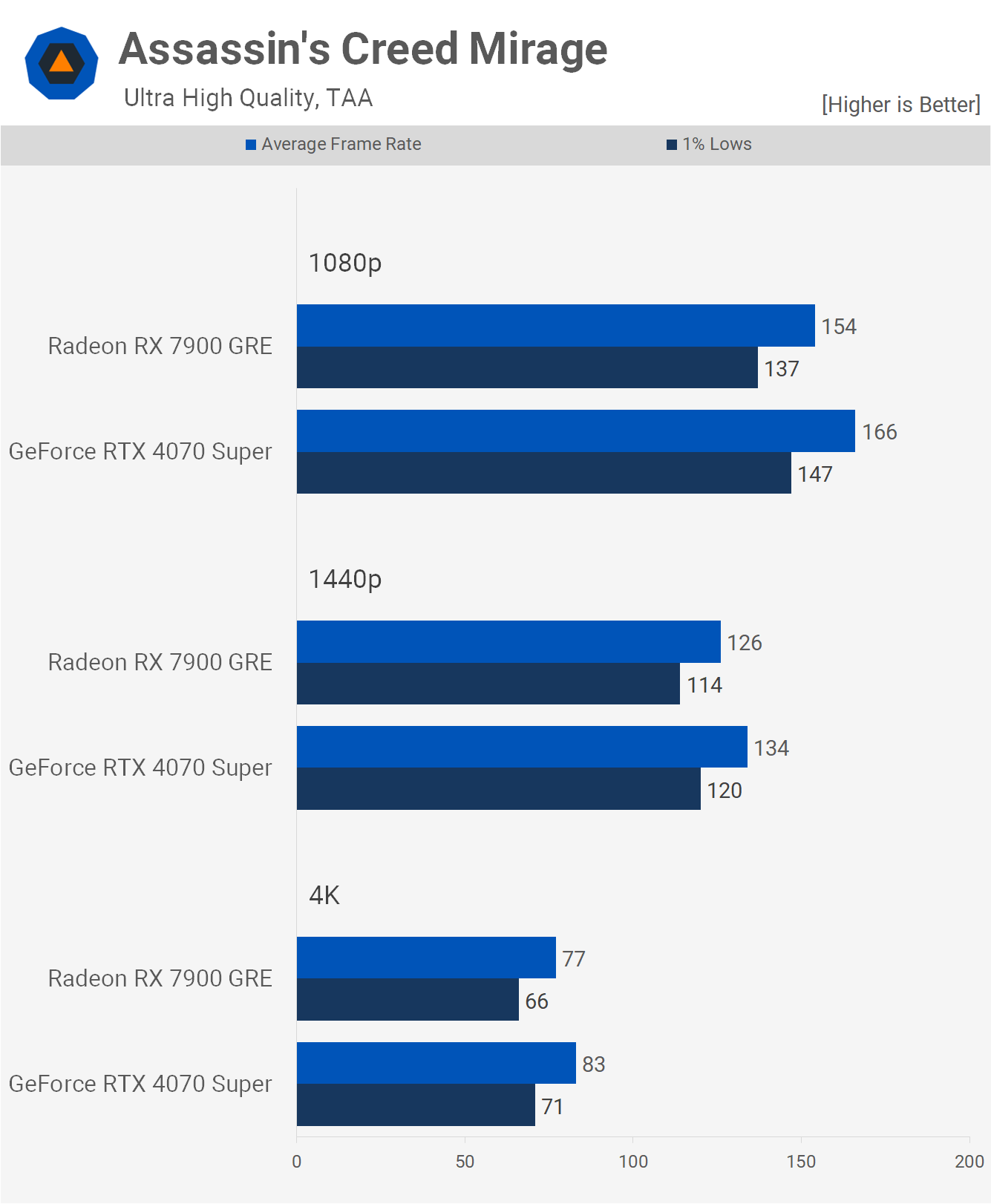

In Assassin’s Creed Mirage, the GeForce GPU holds a minor performance advantage, delivering around 8% more frames. The difference is probably not significant enough to be noticeable, even at the 4K resolution.

Enabling upscaling doesn’t change the situation significantly. Both GPUs encounter a hard CPU limit at 1080p, while the 4070 Super is faster at 1440p and 4K, by up to a 9% margin, which is again not substantial.

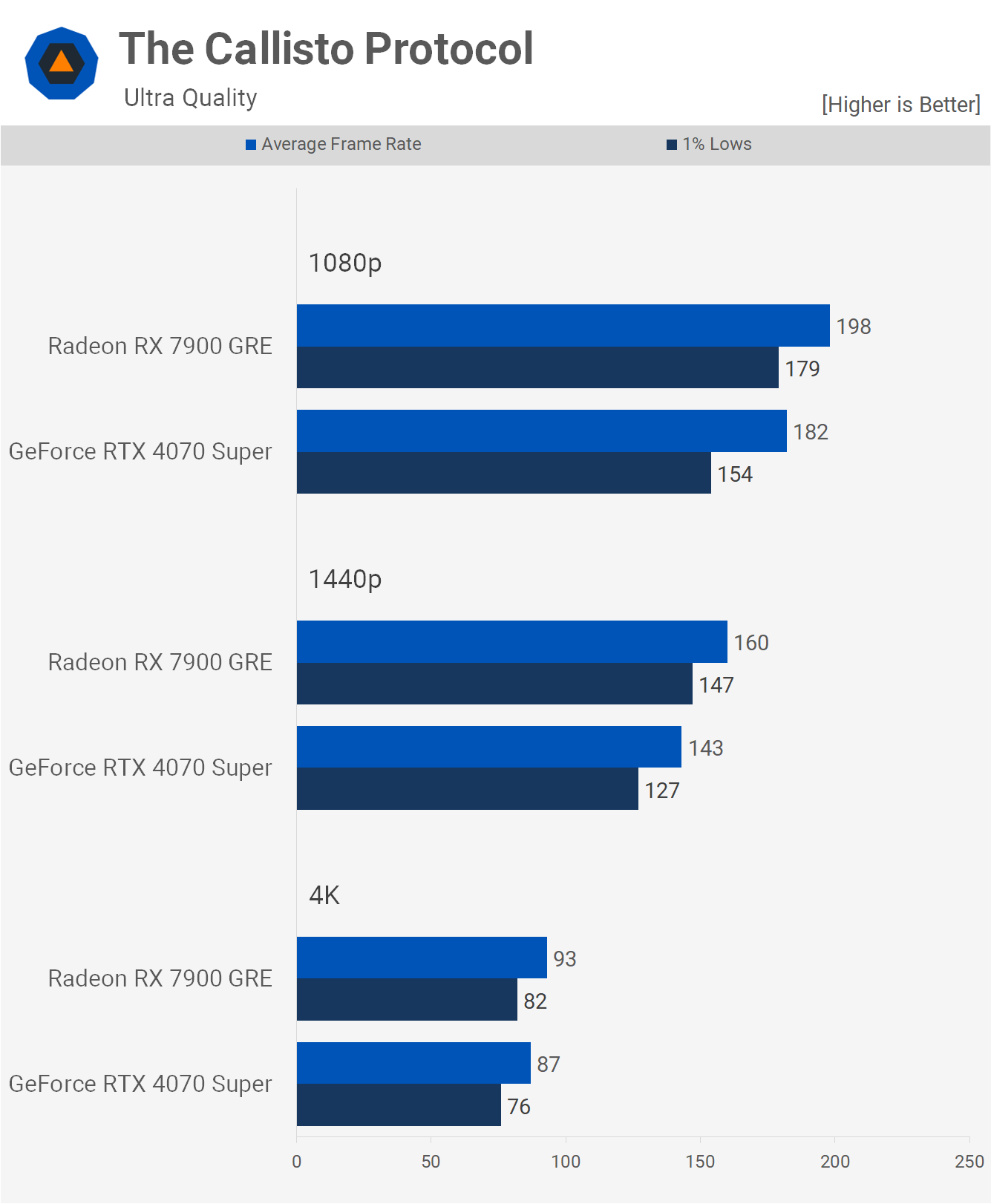

We’re now examining the rasterization and ray tracing performance in several titles, starting with The Callisto Protocol. Here, the 7900 GRE holds a performance advantage, delivering 9% better performance at 1080p, 12% at 1440p, and 7% at 4K.

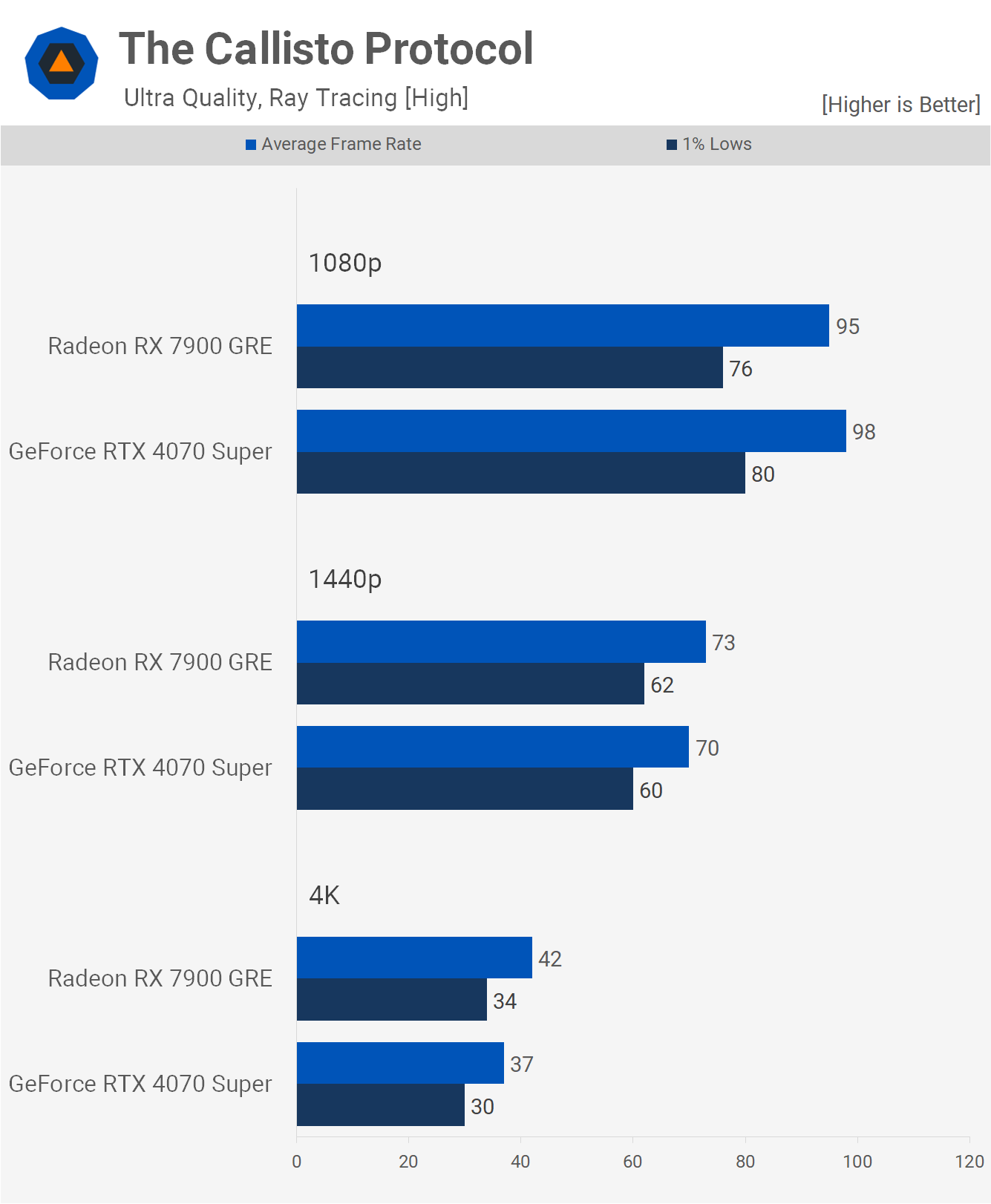

When ray tracing is enabled, both GPUs deliver similar performance, which is unexpected. The difference is no more than 3 fps at 1080p and 1440p, while the GRE was 14% faster at 4K. However, performance for both GPUs wasn’t stellar in this scenario.

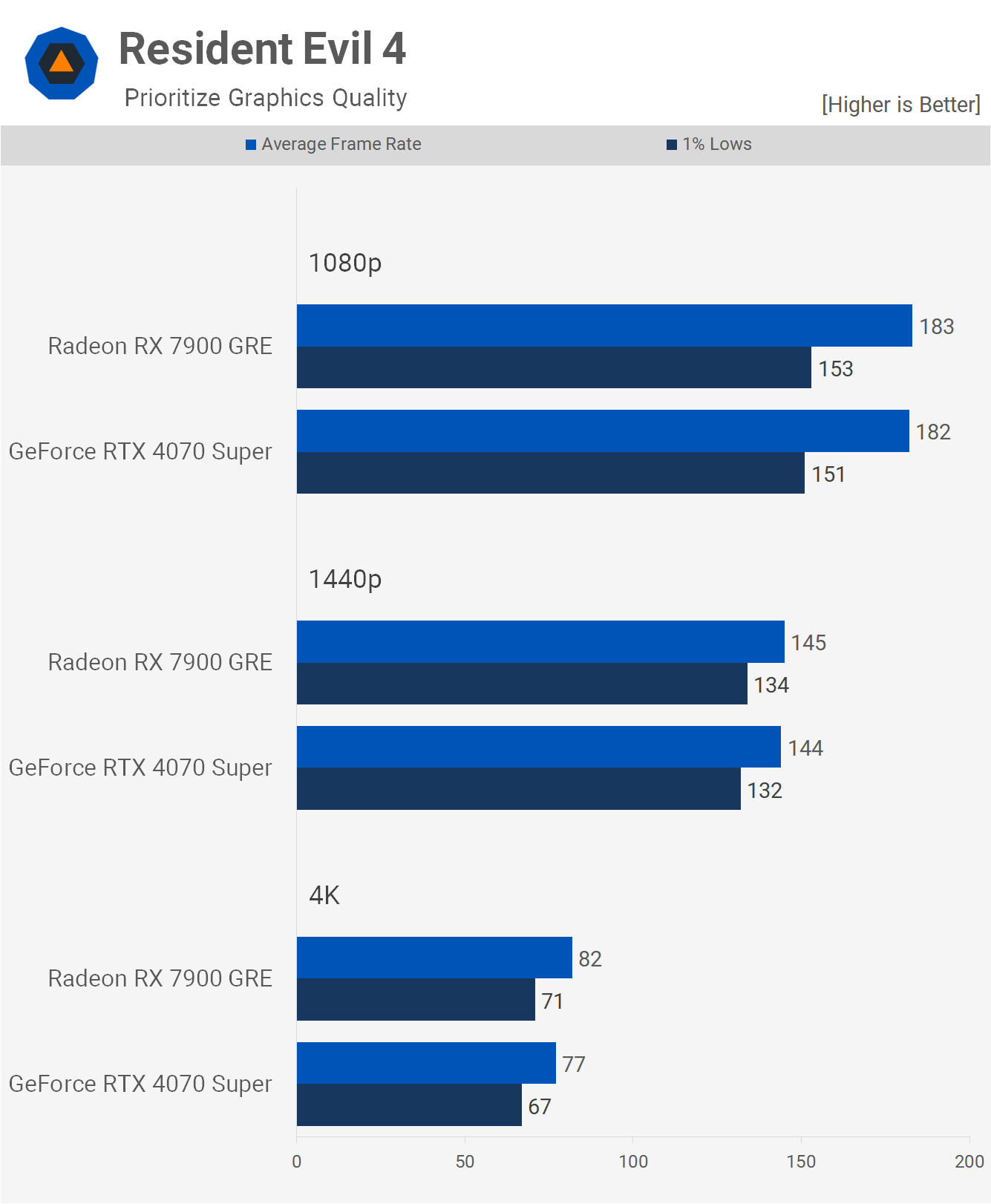

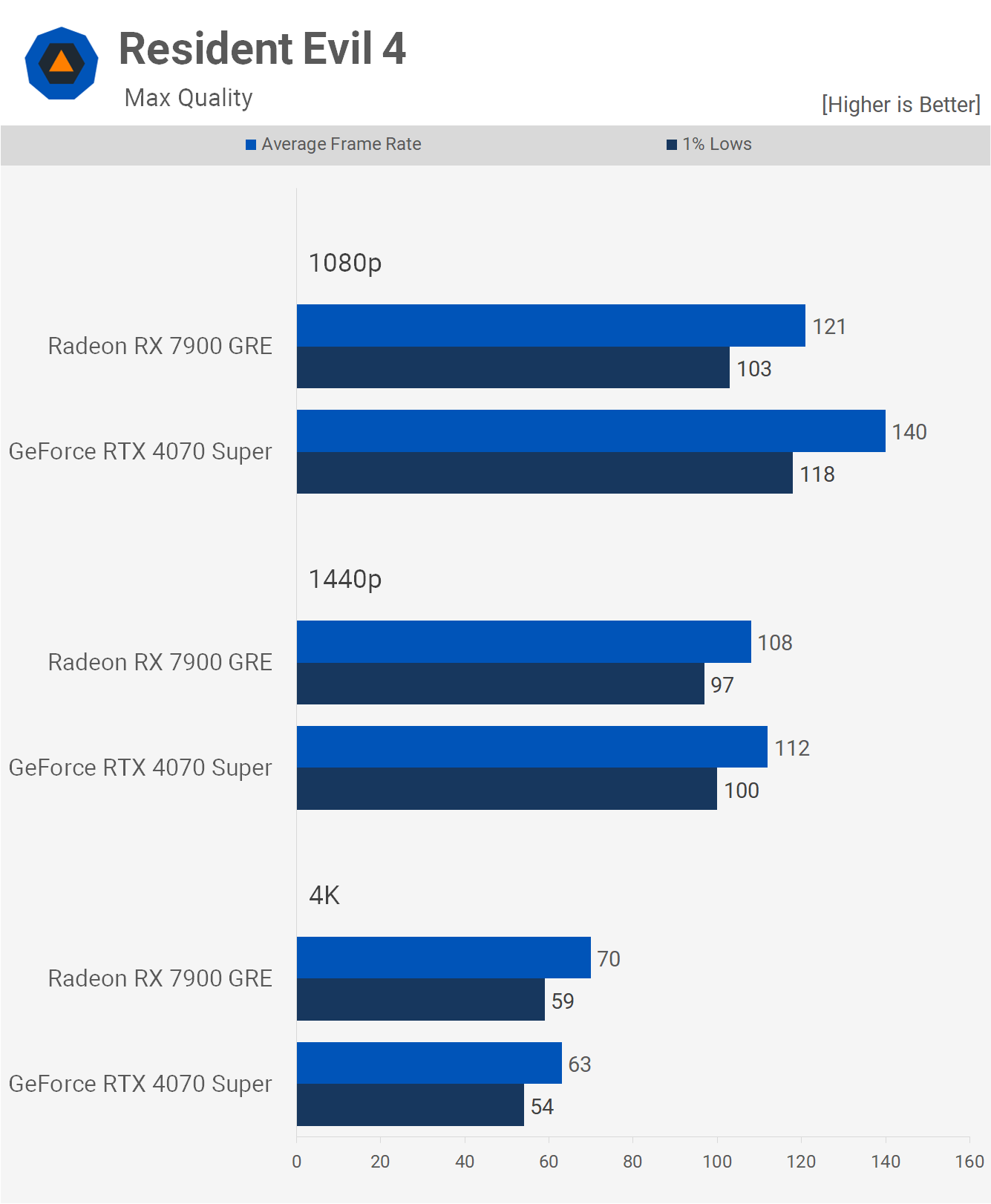

The results for Resident Evil 4, using the prioritize graphics quality preset, are very close, leaving little else to discuss.

As expected, enabling ray tracing gives the 4070 Super an edge, at least at 1080p and 1440p. The significant difference is only at 1080p, where the GeForce GPU is 16% faster. However, by 1440p, that margin decreases to just 4% before reversing at 4K, where the 7900 GRE was 11% faster. It’s worth noting that the RT effects in this title are not particularly impressive, a sentiment applicable to most games currently supporting the technology.

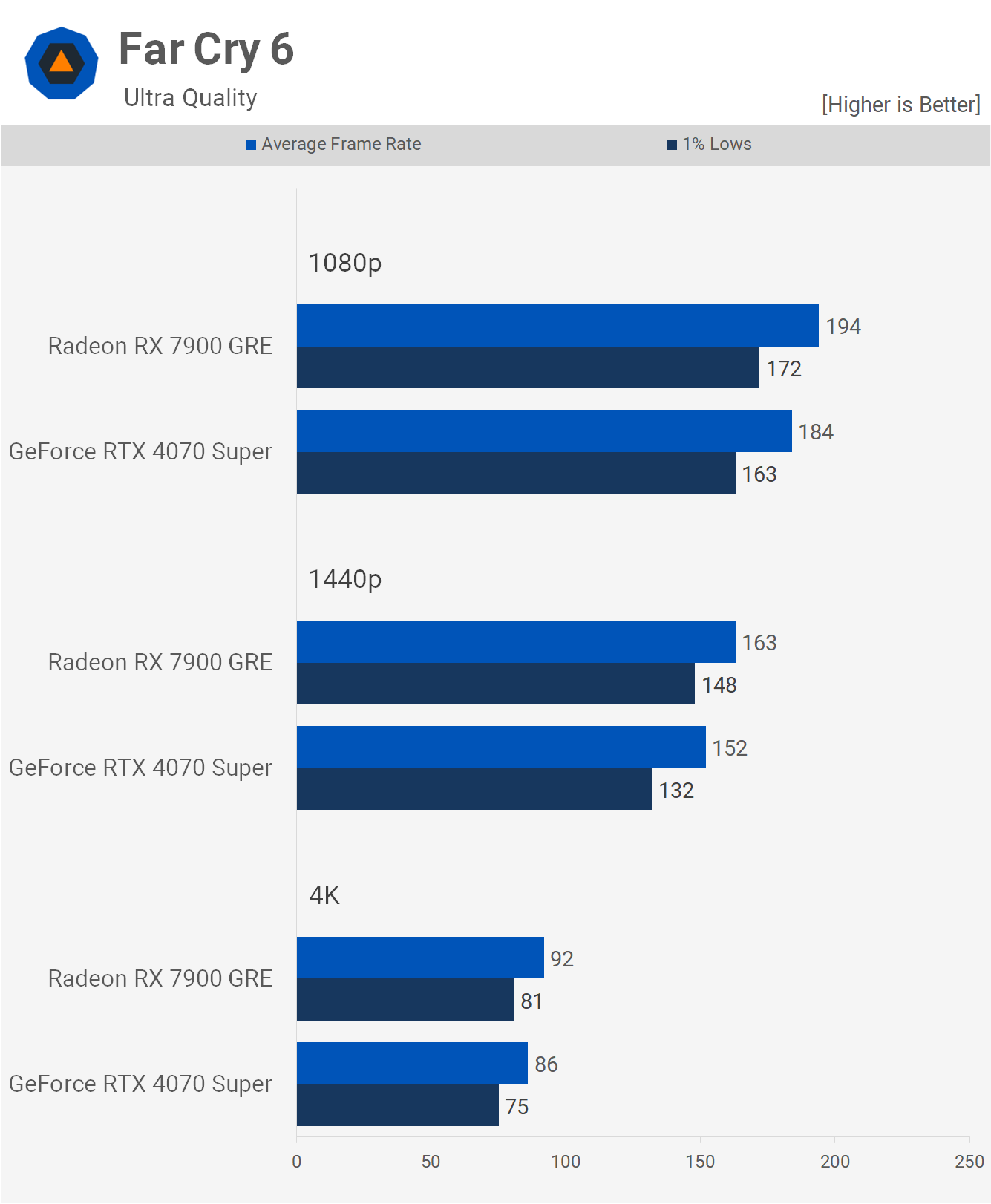

Far Cry 6 doesn’t pose a significant challenge to these modern GPUs, even at 4K, where frame rates exceed 60 fps. The 7900 GRE is up to 7% faster, which isn’t a considerable margin. Given the strong overall performance, the difference between the two products is unlikely to be noticeable.

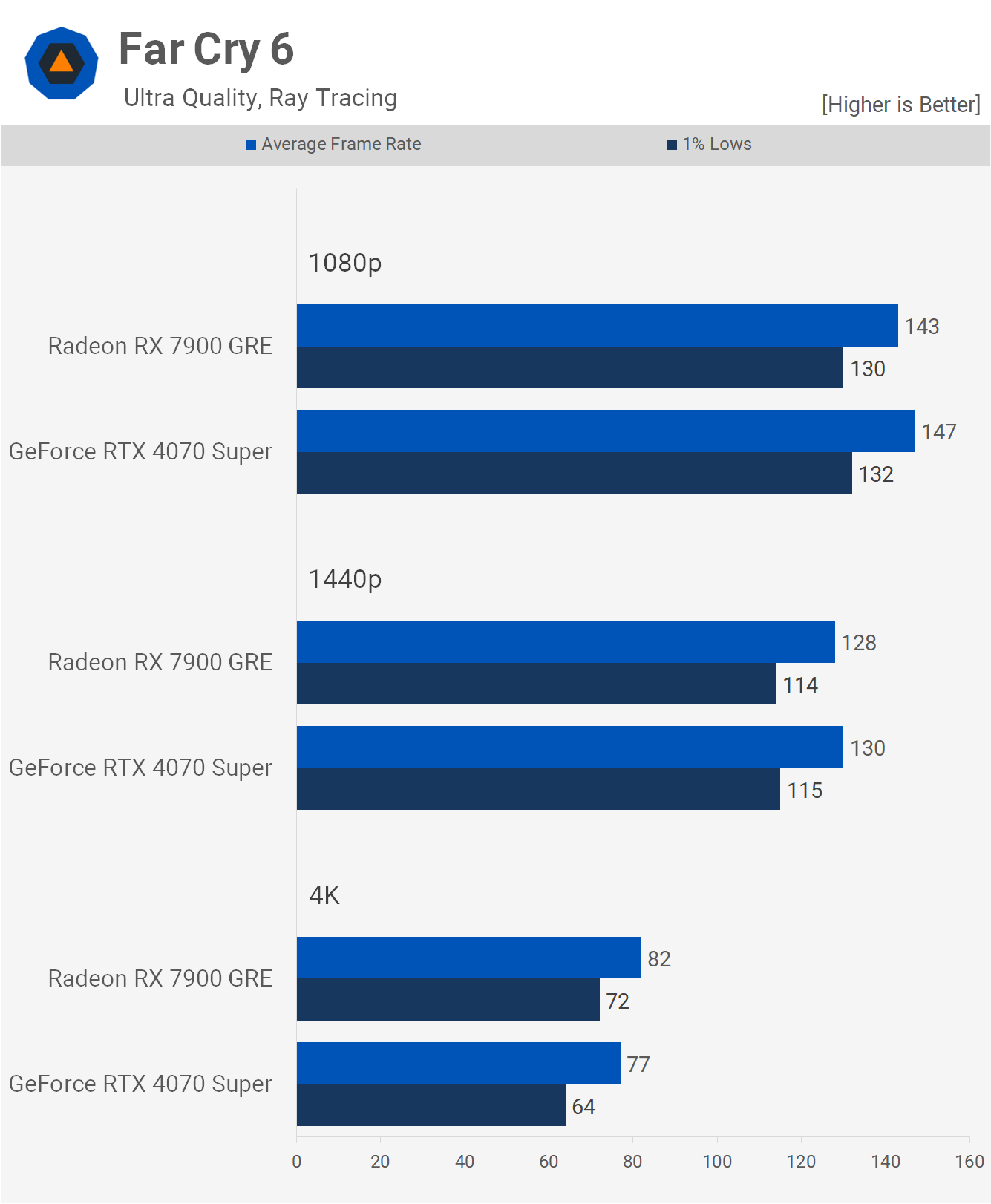

Even with ray tracing enabled, performance remains excellent, and again, the 7900 GRE manages to outperform the 4070 Super at 4K.

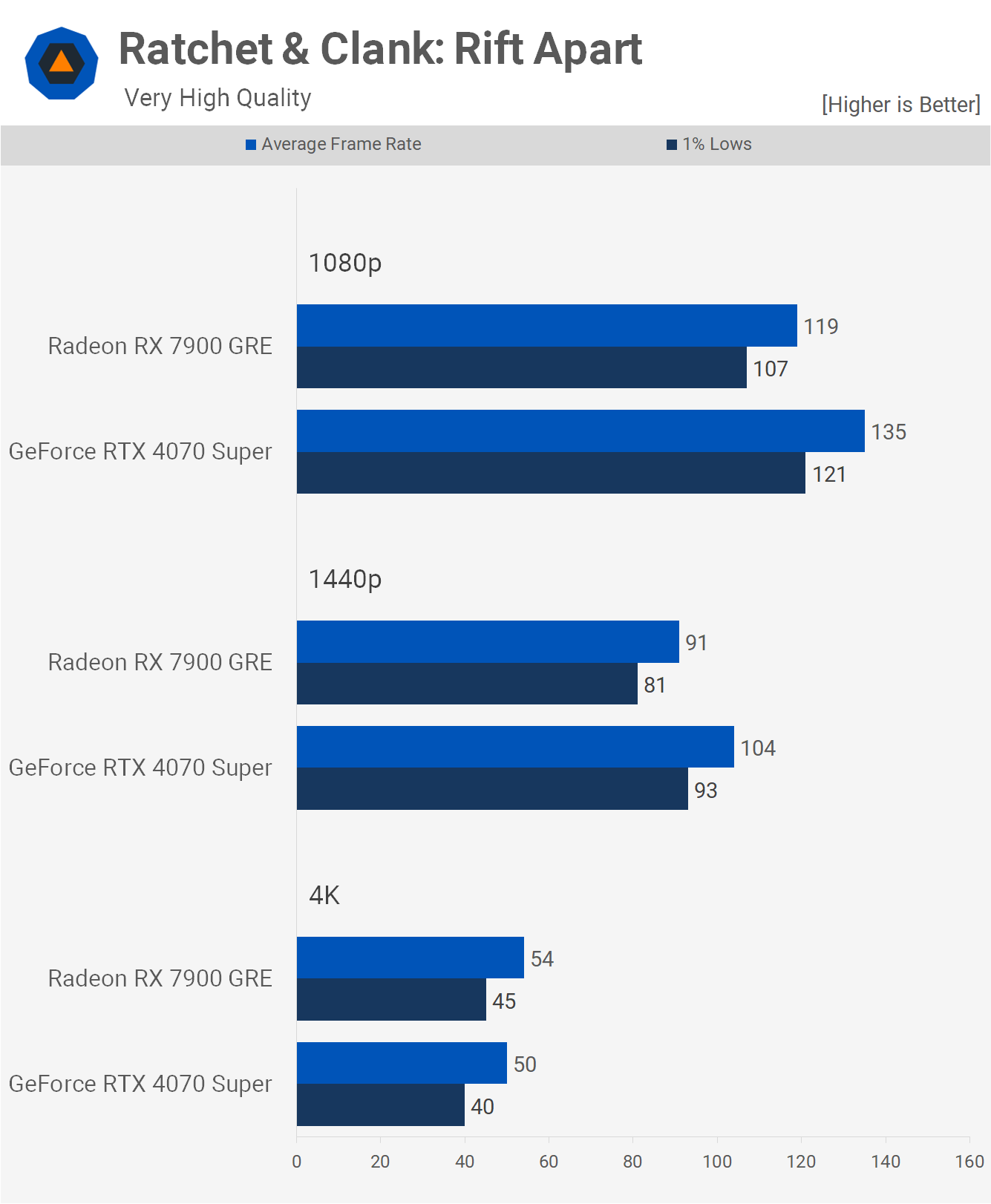

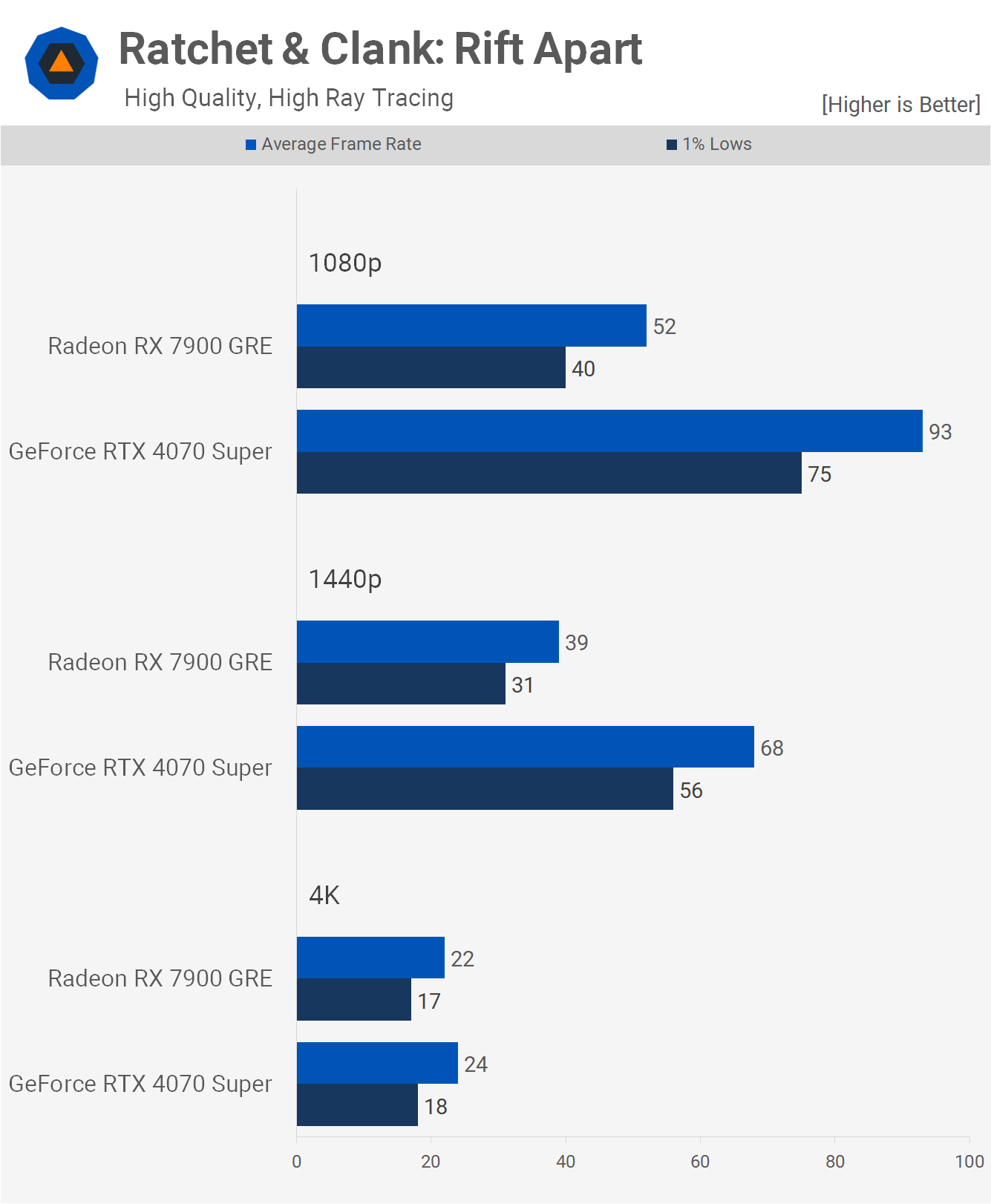

Moving on to Ratchet & Clank: Rift Apart among other titles, tested for rasterization, ray tracing, and ray tracing with upscaling. For rasterization results using the very high preset, at 1080p, the 4070 Super is 13% faster, 14% faster at 1440p, and then 7% slower at 4K. Thus, the Radeon GPU typically shows more power at 4K.

However, AMD struggles with ray tracing performance in this title, as demonstrated by the 4070 Super significantly outperforming the 7900 GRE, with almost 80% greater performance at 1080p and 74% at 1440p. The results even out at 4K, though neither GPU delivers acceptable performance, rendering these results somewhat moot.

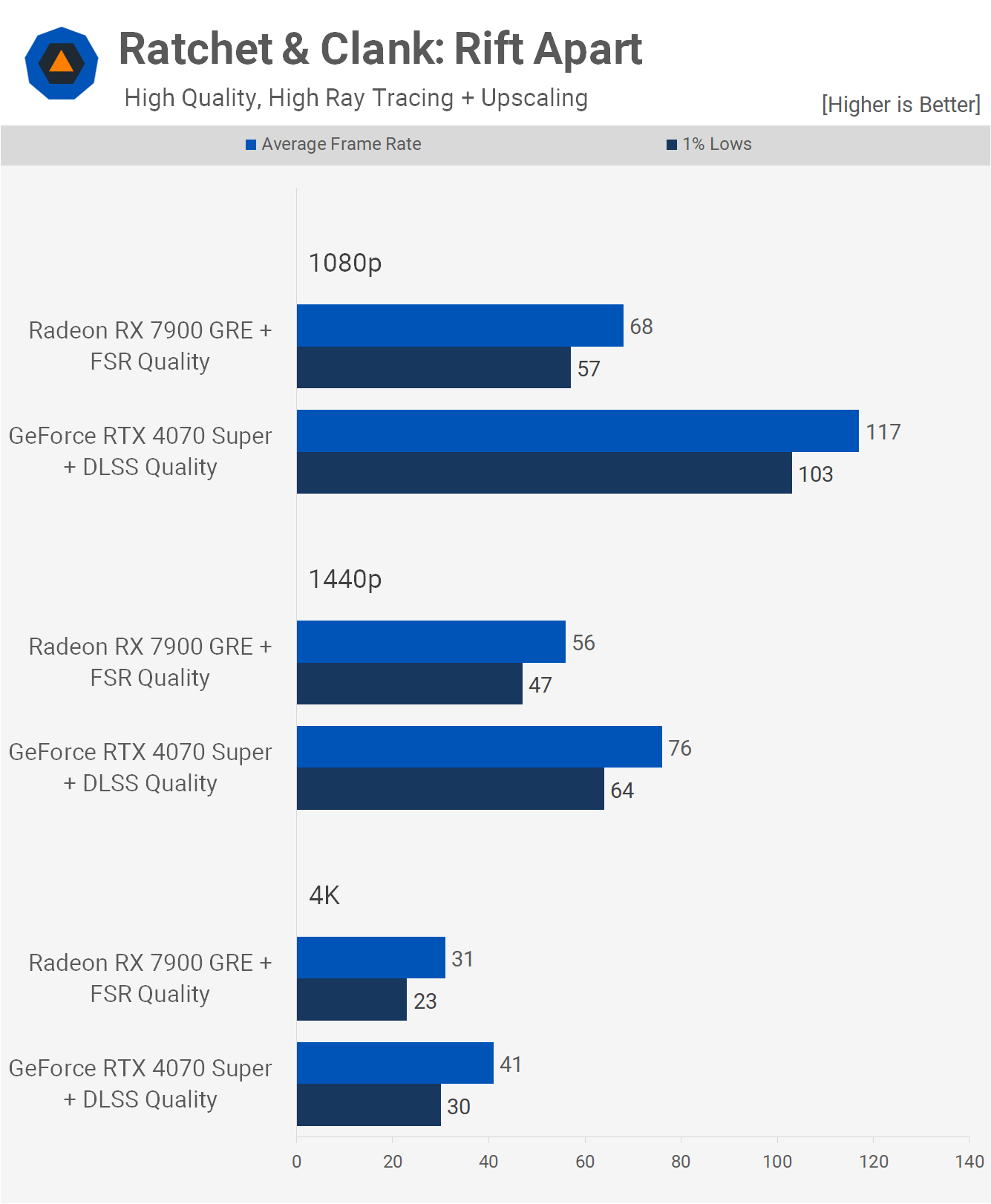

Enabling upscaling allows the 7900 GRE to achieve decent performance at 1440p, with an average of 56 fps. While not outstanding, it’s certainly sufficient for most players to enjoy. Nevertheless, the 4070 Super still provides a substantial 36% performance increase, with a much more optimized 76 fps on average.

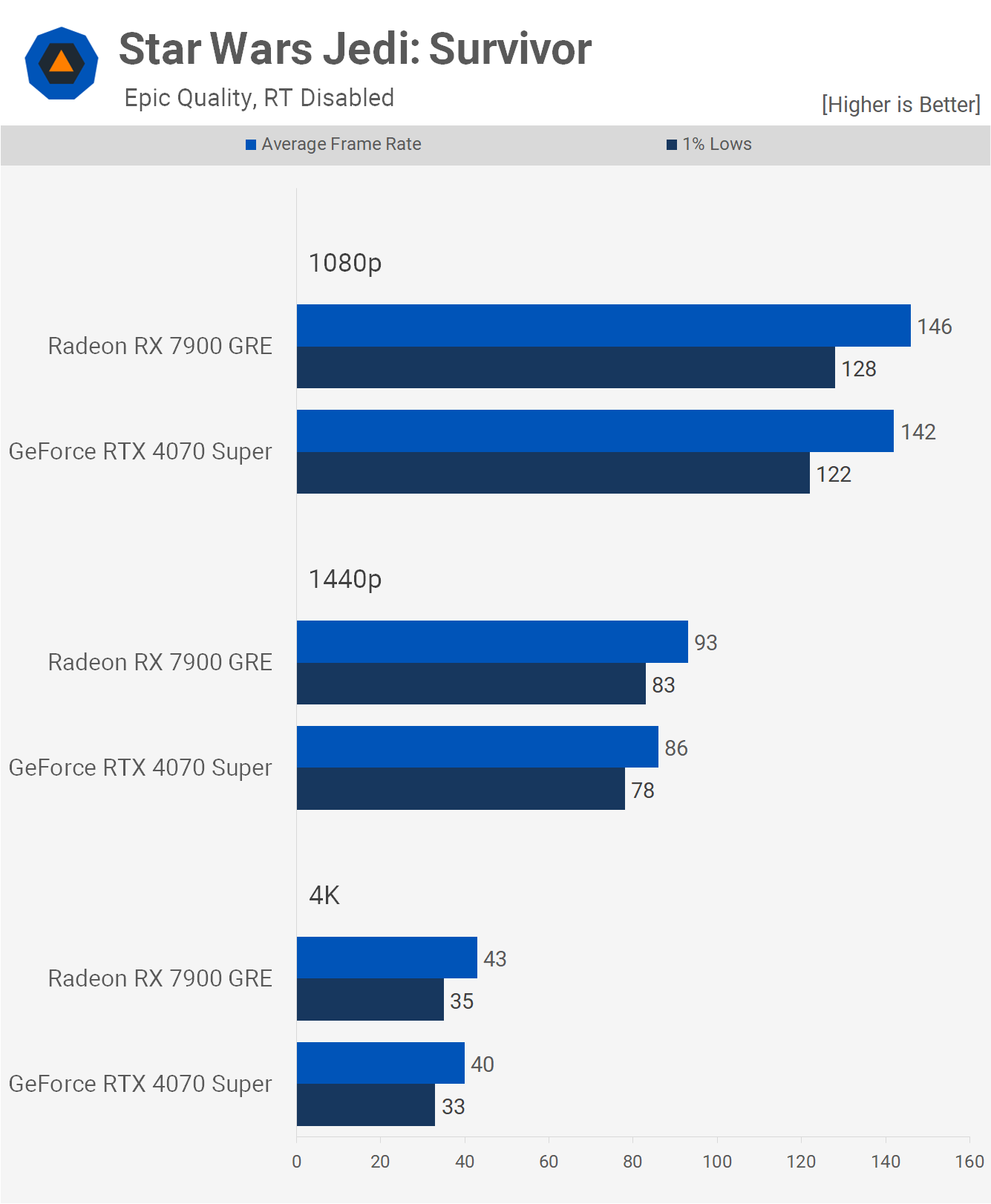

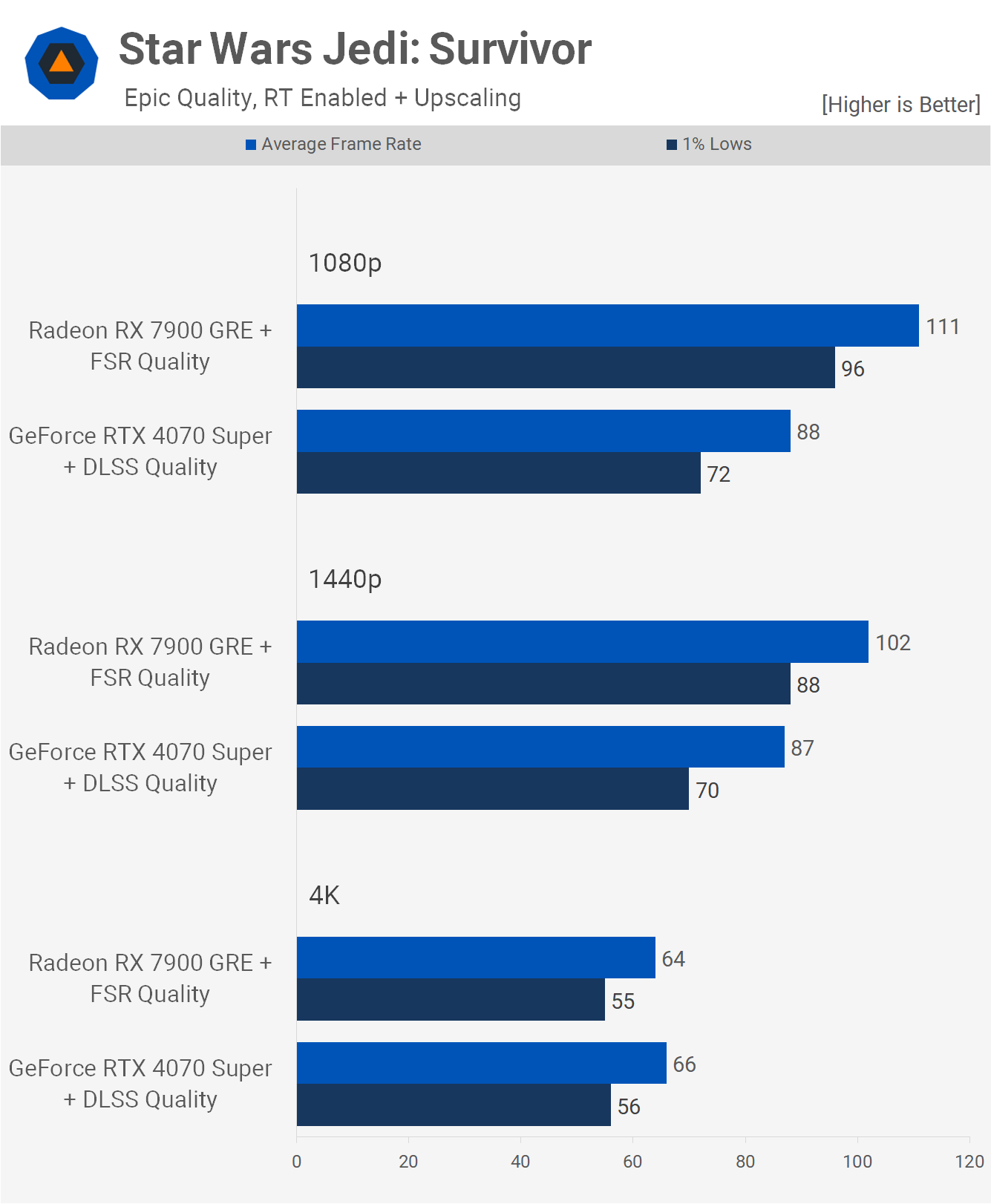

In Star Wars Jedi: Survivor, using the ‘epic’ quality preset gives the Radeon GPU a very slight performance advantage, though it proves to be too demanding for 4K gaming.

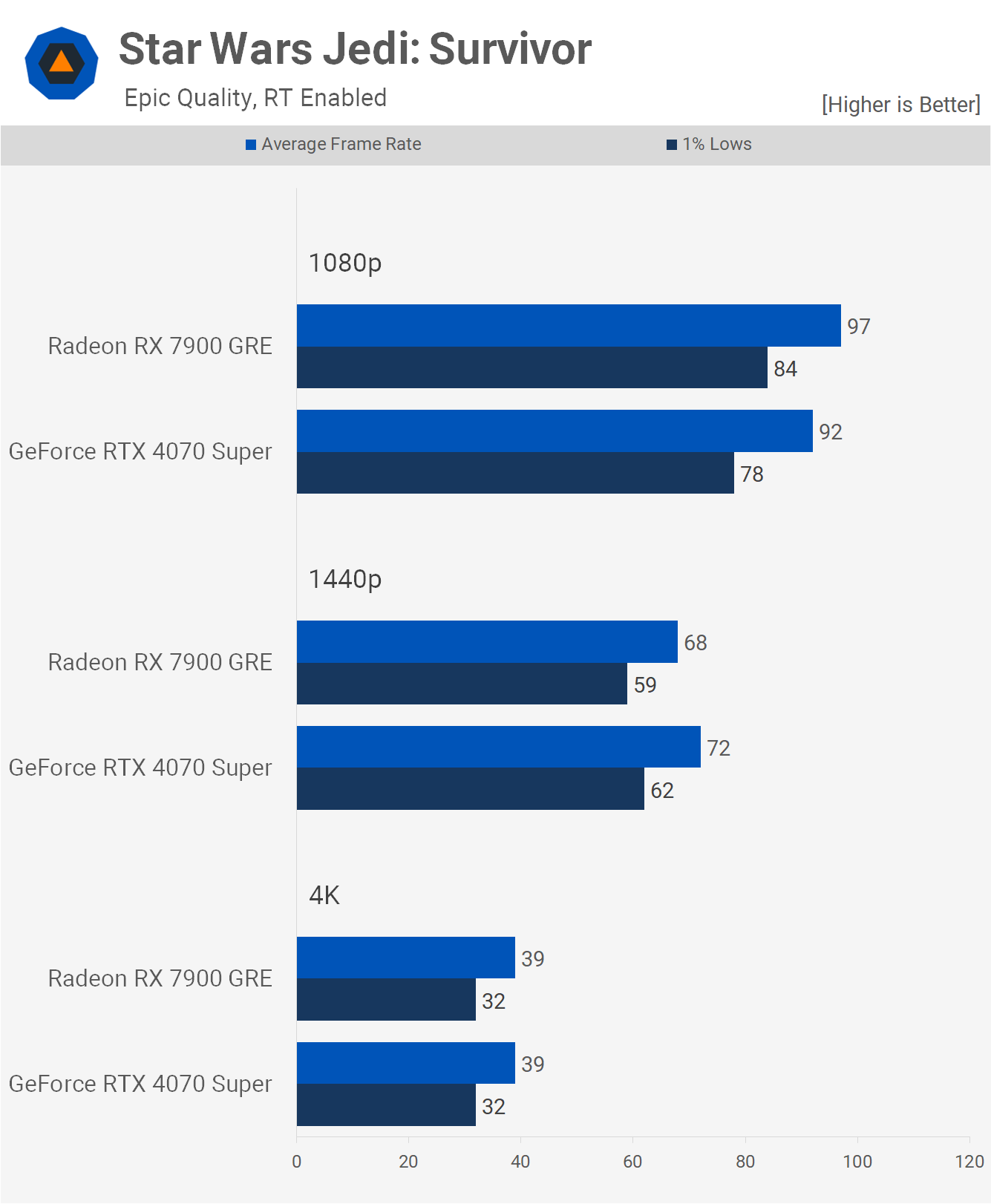

In this case, enabling ray tracing doesn’t significantly impact performance, but even so, the results at 1440p are acceptable, with minimal differences.

Ideally, when using ray tracing, you’ll want to enable upscaling, which grants the 7900 GRE a substantial performance advantage at 1080p and 1440p, while frame rates at 4K are similar. Of course, FSR image quality might not be as high as DLSS at those lower resolutions, but for those prioritizing upscaling results, this is a subjective issue that must be accepted.

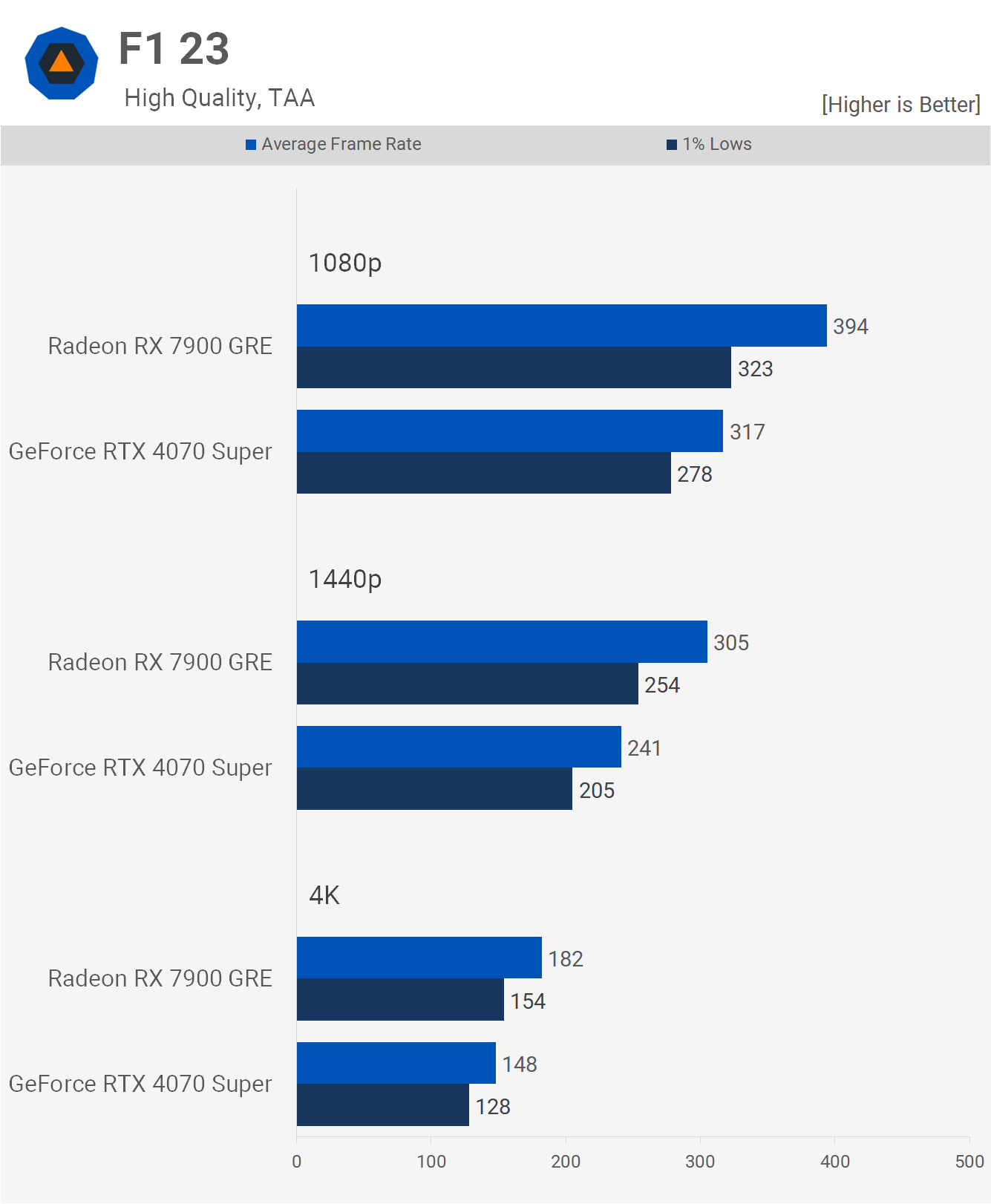

F1 23, tested using the ‘high preset’ for rasterization results, sees the 7900 GRE dominating, delivering 24% greater performance at 1080p, 27% at 1440p, and 23% at 4K.

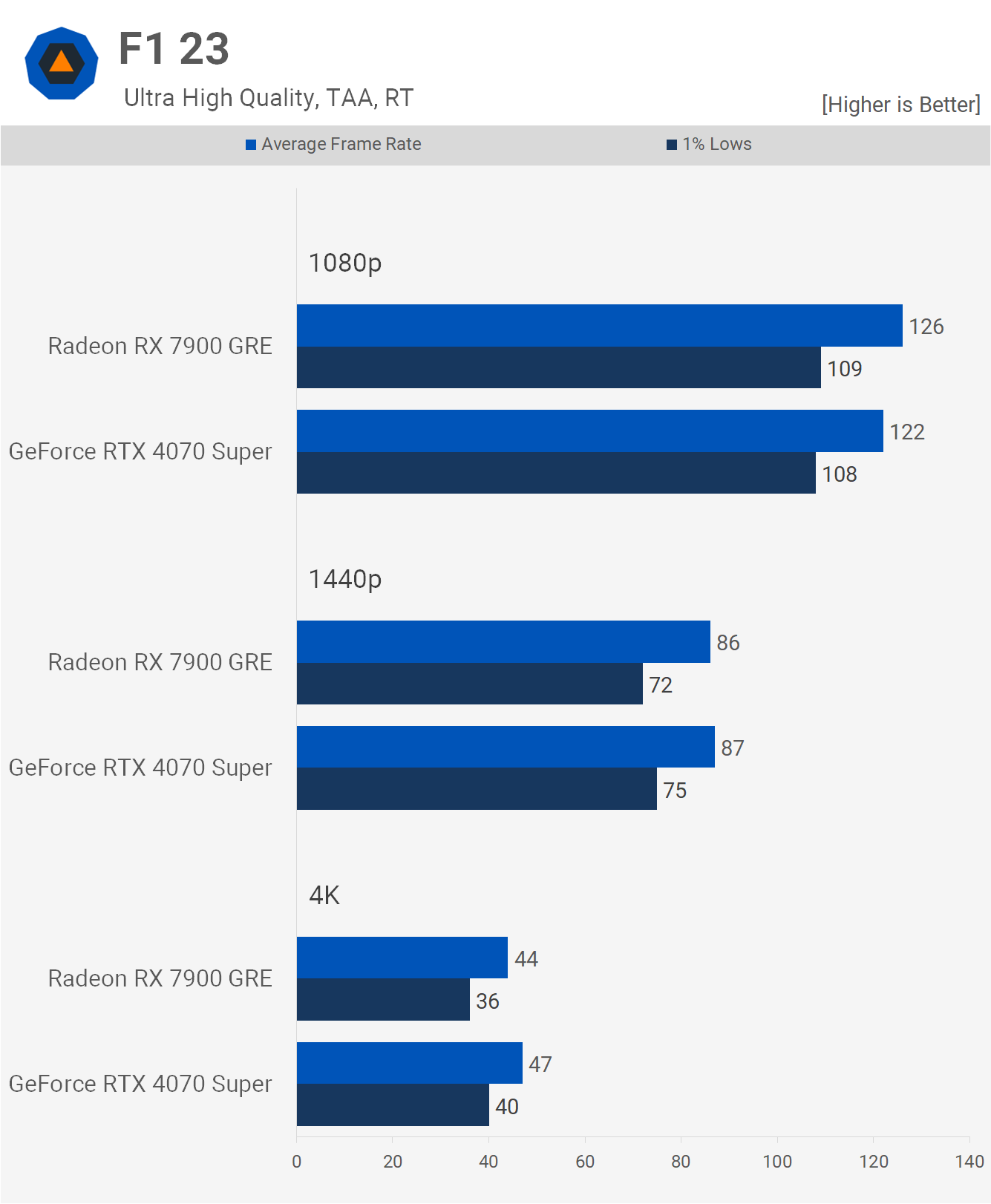

Then, enabling the ‘ultra high preset,’ which activates ray tracing, results in nearly identical performance between the GPUs. Importantly, frame rates are more than halved for what is perceived as an extremely minor visual upgrade, suggesting that RT effects may not be worthwhile in this title.

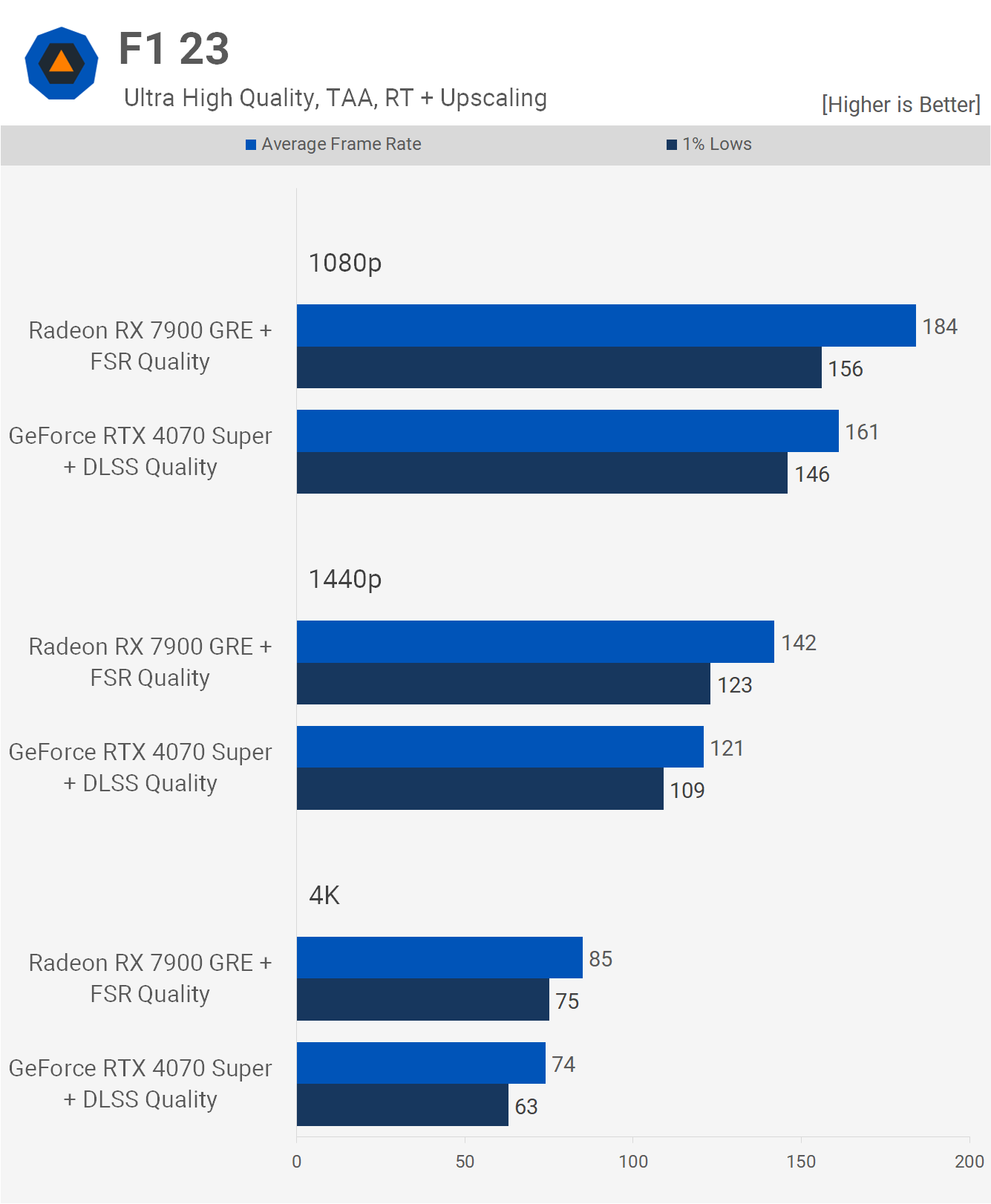

Once more, ray tracing greatly benefits from upscaling. With quality upscaling modes enabled, the Radeon GPU becomes quite dominant, offering up to 17% better performance.

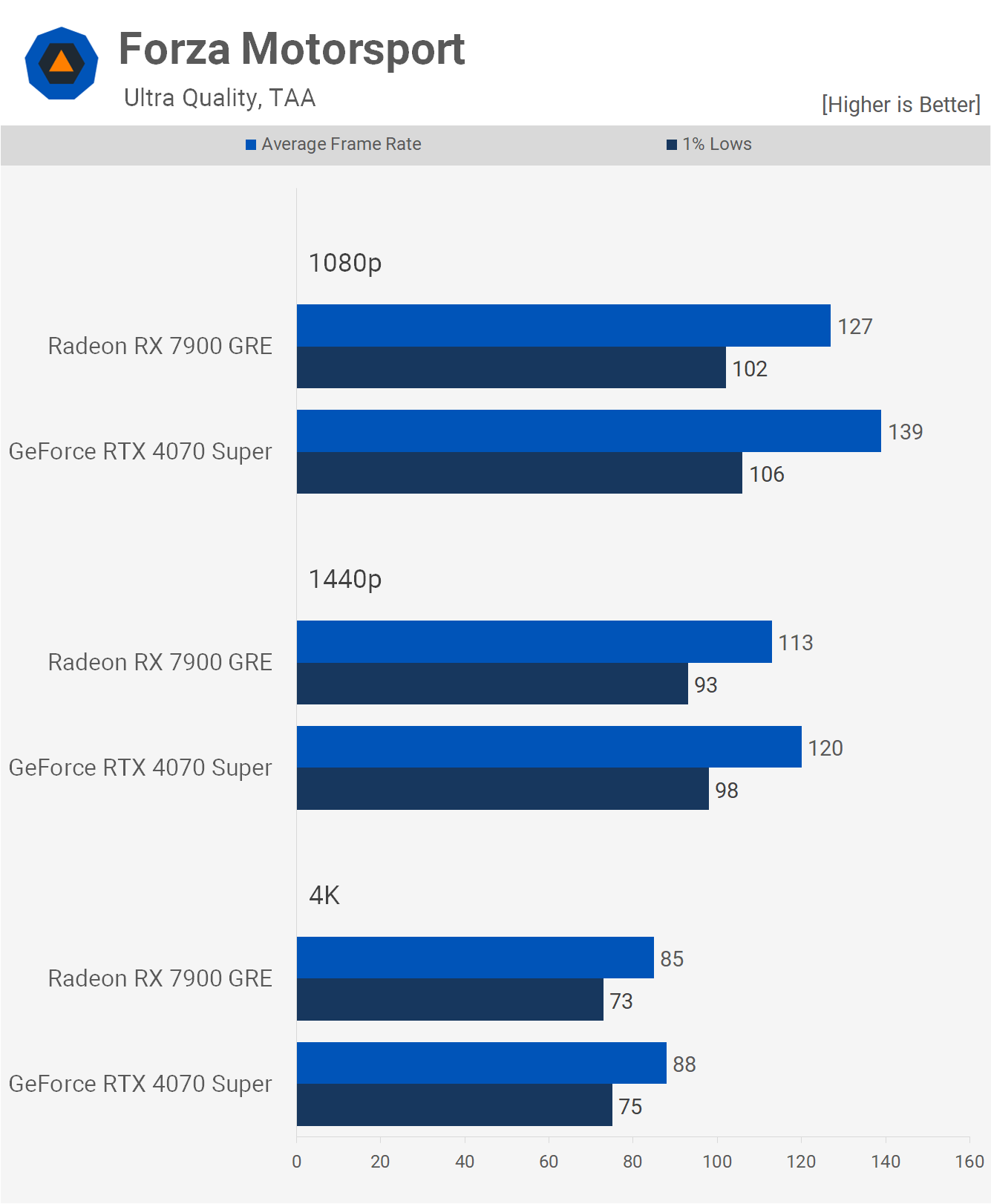

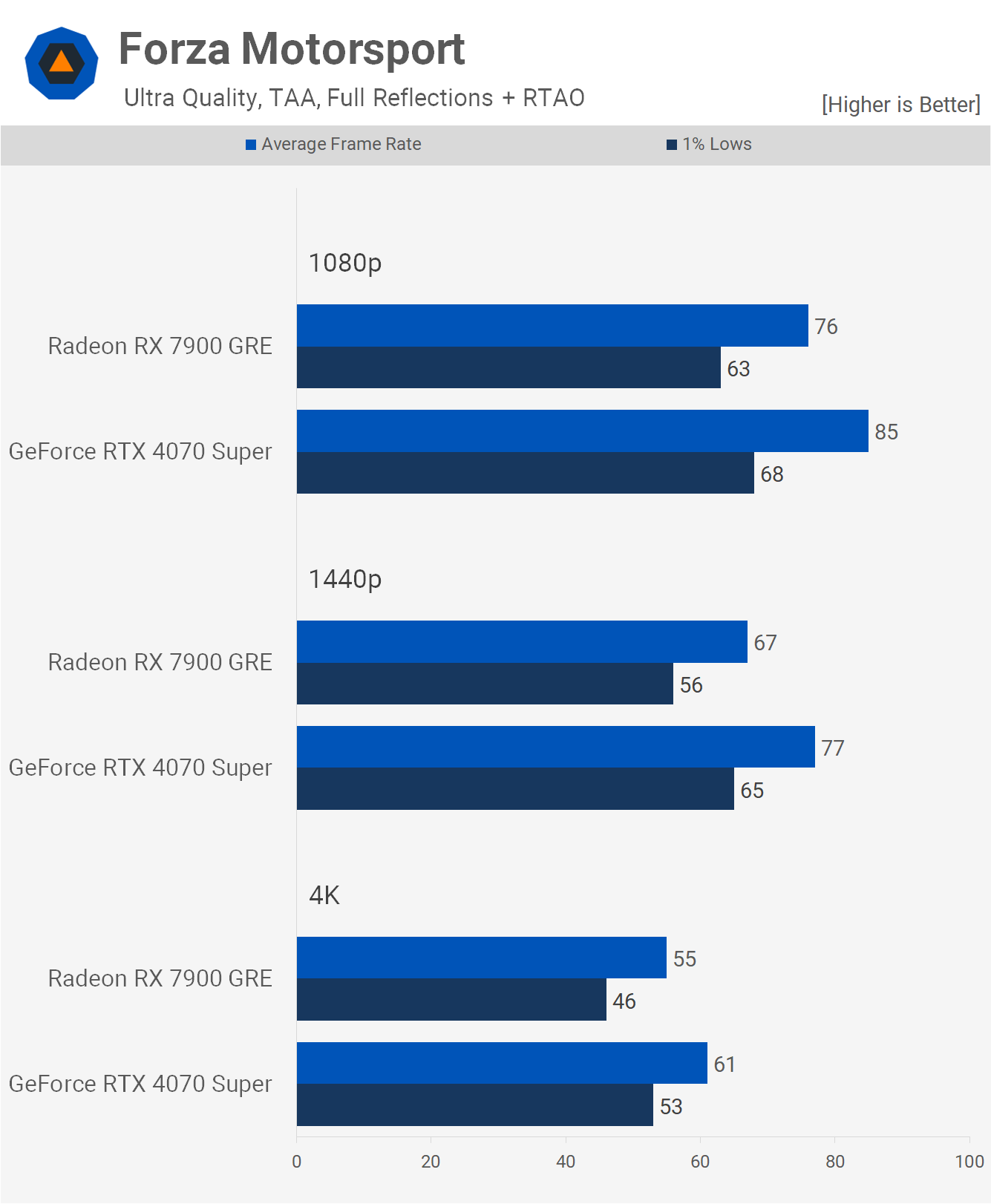

Moving to Forza Motorsport and starting with rasterization results, the GeForce GPU leads, at least at 1080p and 1440p, where it’s up to 9% faster. However, the margin at 4K is negligible, with the 4070 Super just 4% faster.

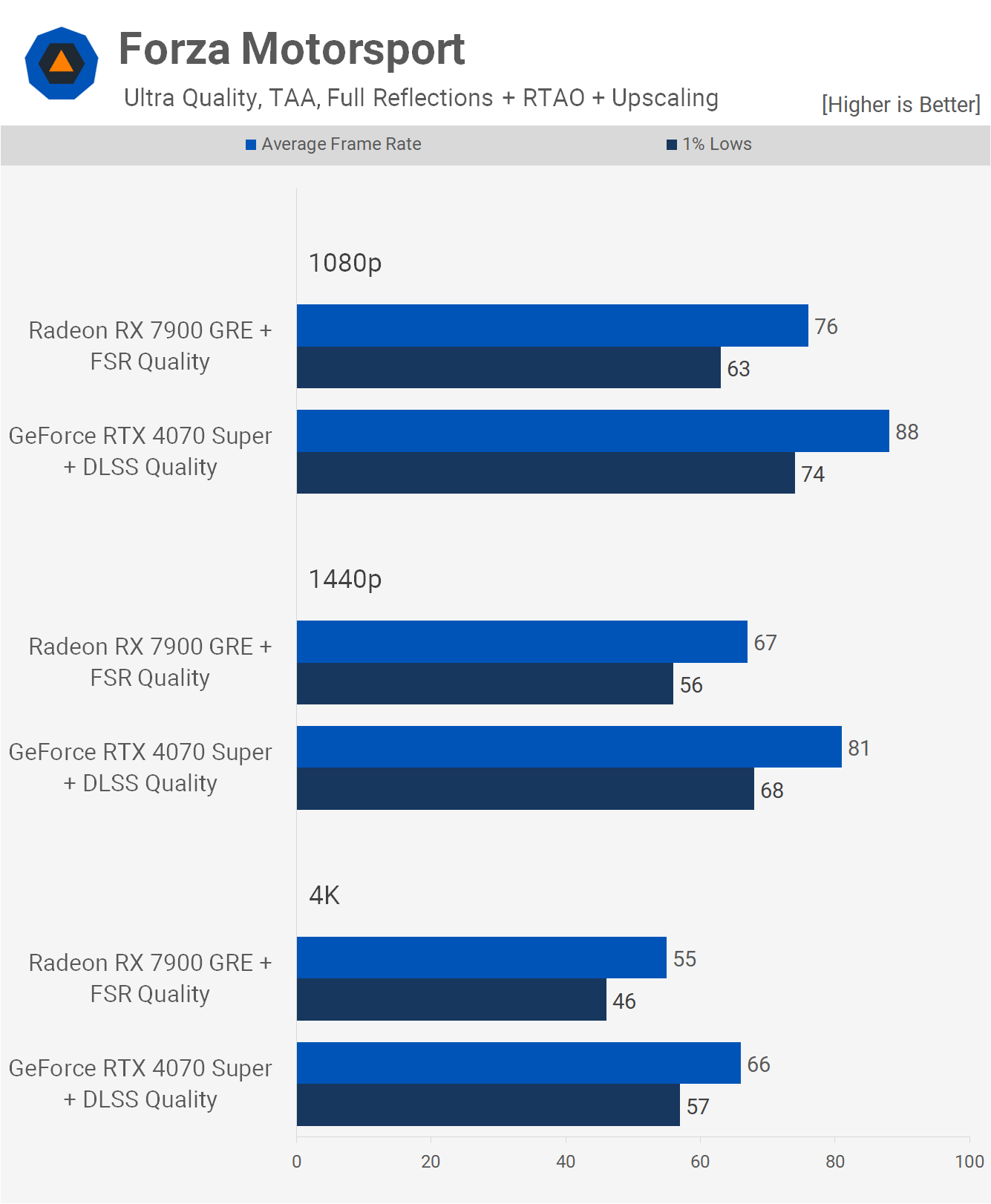

Enabling ray tracing gives the 4070 Super an advantage, allowing it to render 12% more frames at 1080p, 15% more at 1440p, and 11% more at 4K.

As previously discovered, although Forza Motorsport supports FSR, it’s non-functional, a significant frustration since game owners have reported this issue for months without resolution. Consequently, the 4070 Super is now 16% faster at 1080p, 21% faster at 1440p, and 20% faster at 4K.

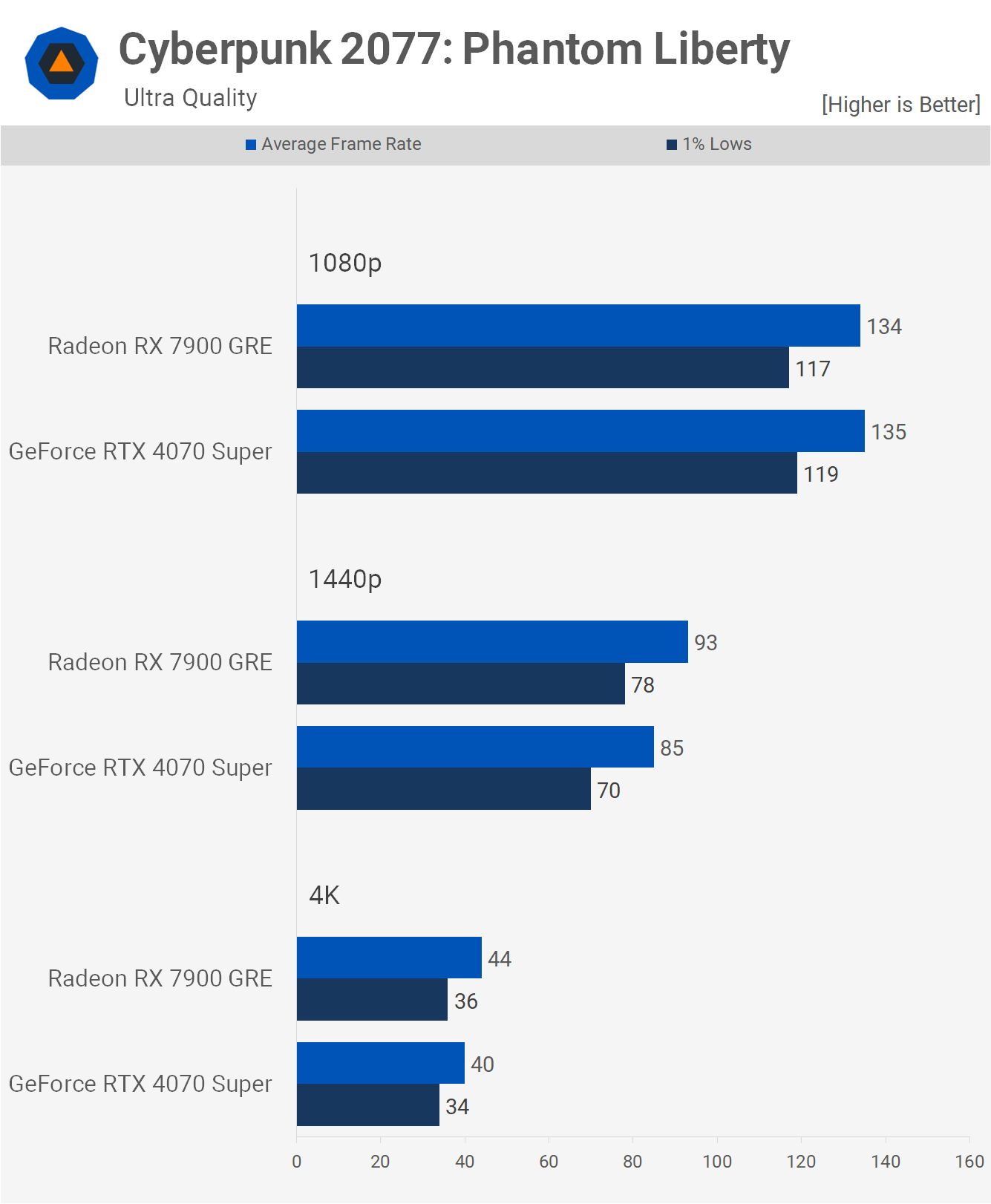

Cyberpunk 2077: Phantom Liberty is well-optimized for both AMD and Nvidia GPUs, resulting in similar performance using the ultra-quality preset. Both GPUs deliver acceptable performance at 1440p, but 4K likely necessitates quality setting adjustments.

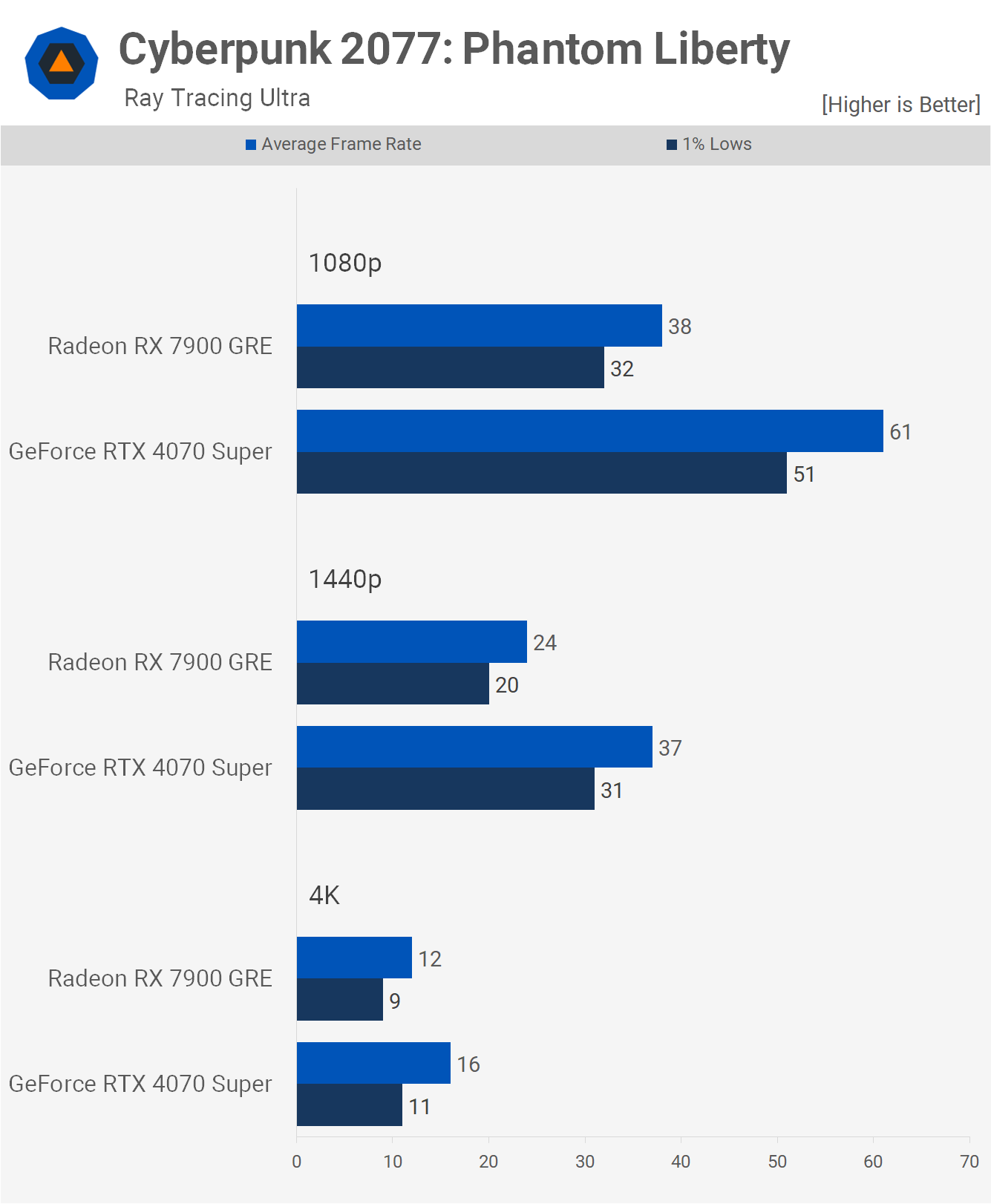

With the Ray Tracing Ultra preset enabled, performance is poor with the 4070 Super and downright terrible for the 7900 GRE, which could only achieve 38 fps at 1080p, making the GeForce GPU 61% faster.

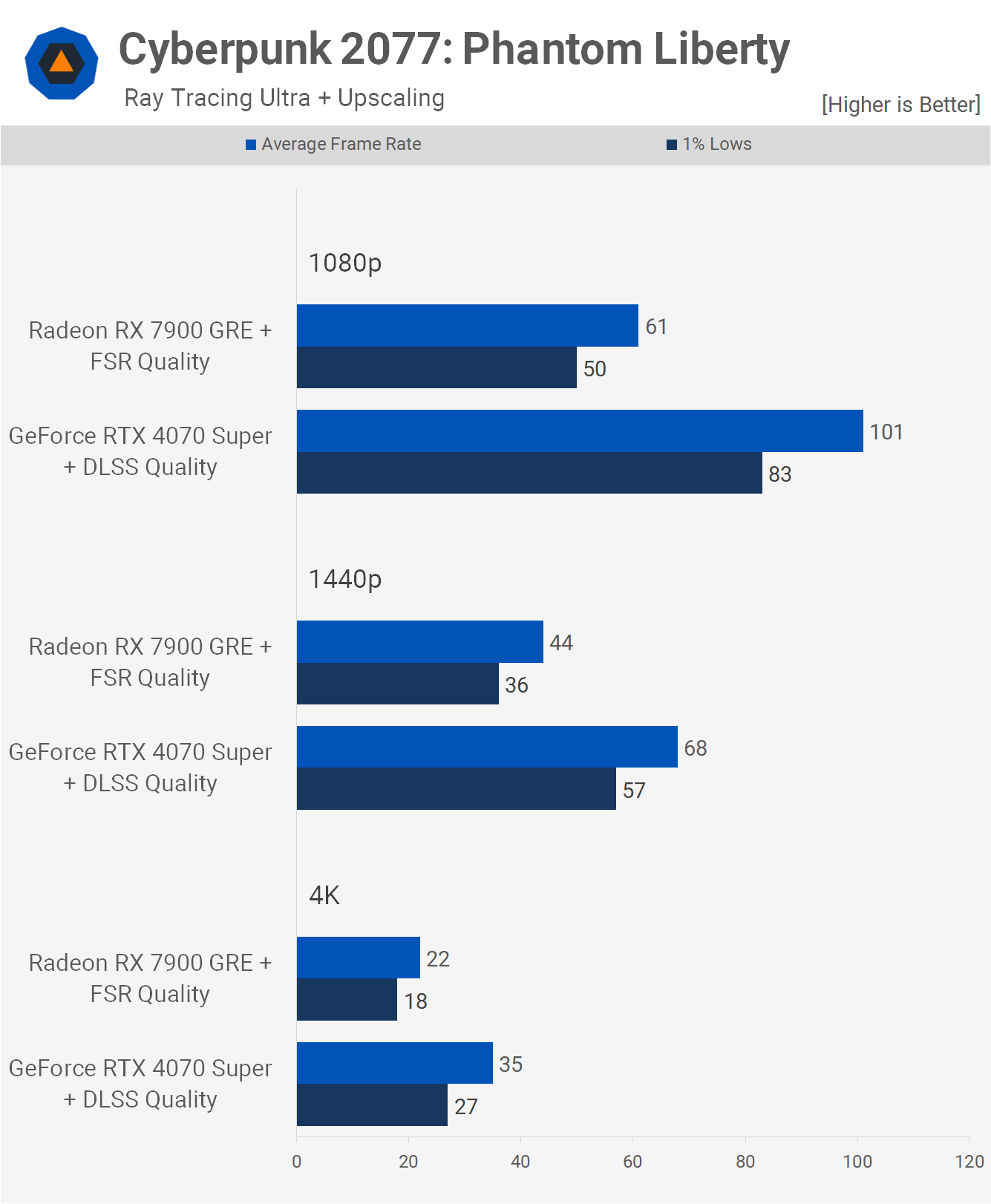

As typical, upscaling is necessary, with FSR enabling the 7900 GRE to reach an average of 61 fps at 1080p, making the 4070 Super 66% faster. The GeForce GPU also achieves 68 fps at 1440p, making it 55% faster, which is a respectable performance.

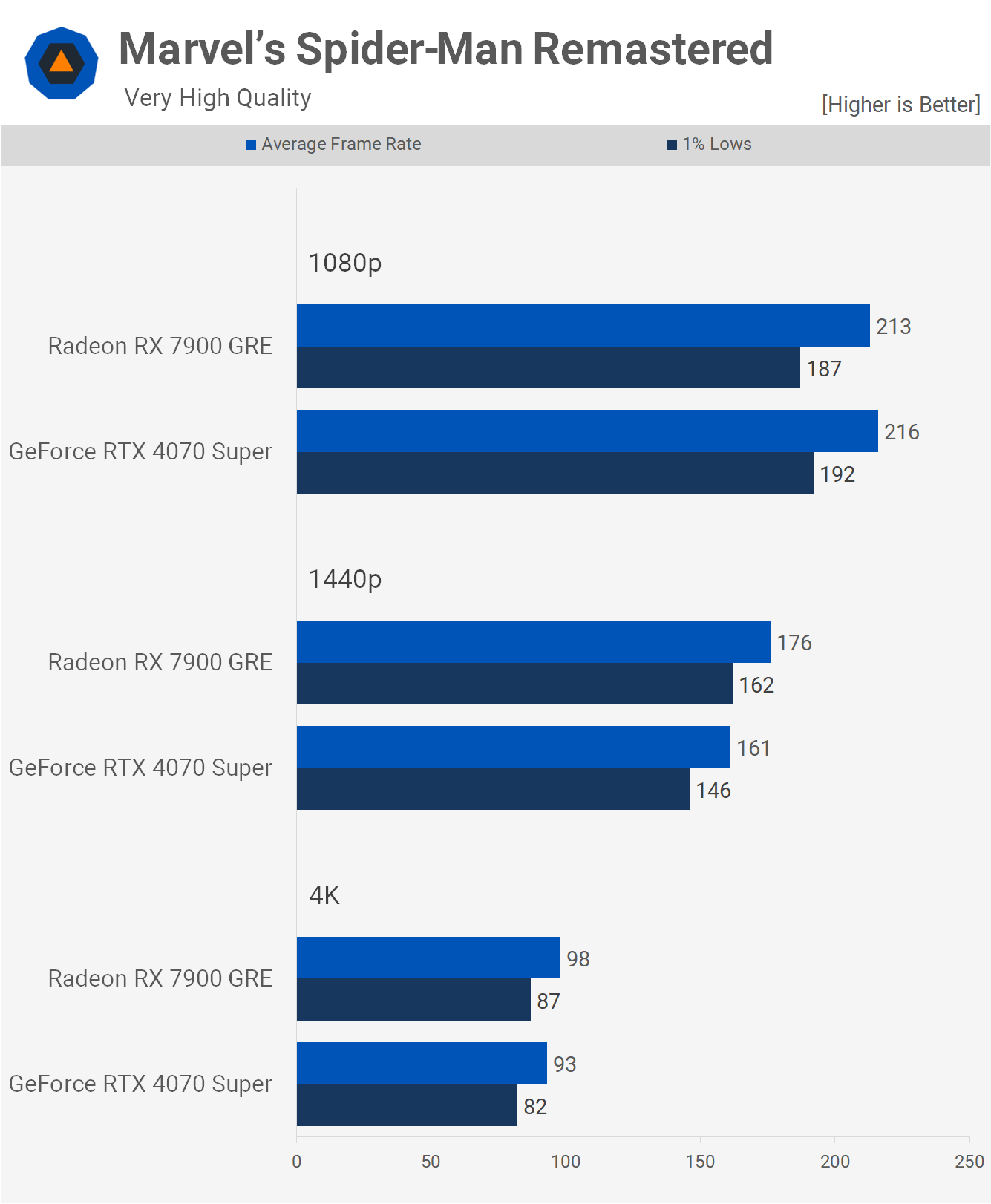

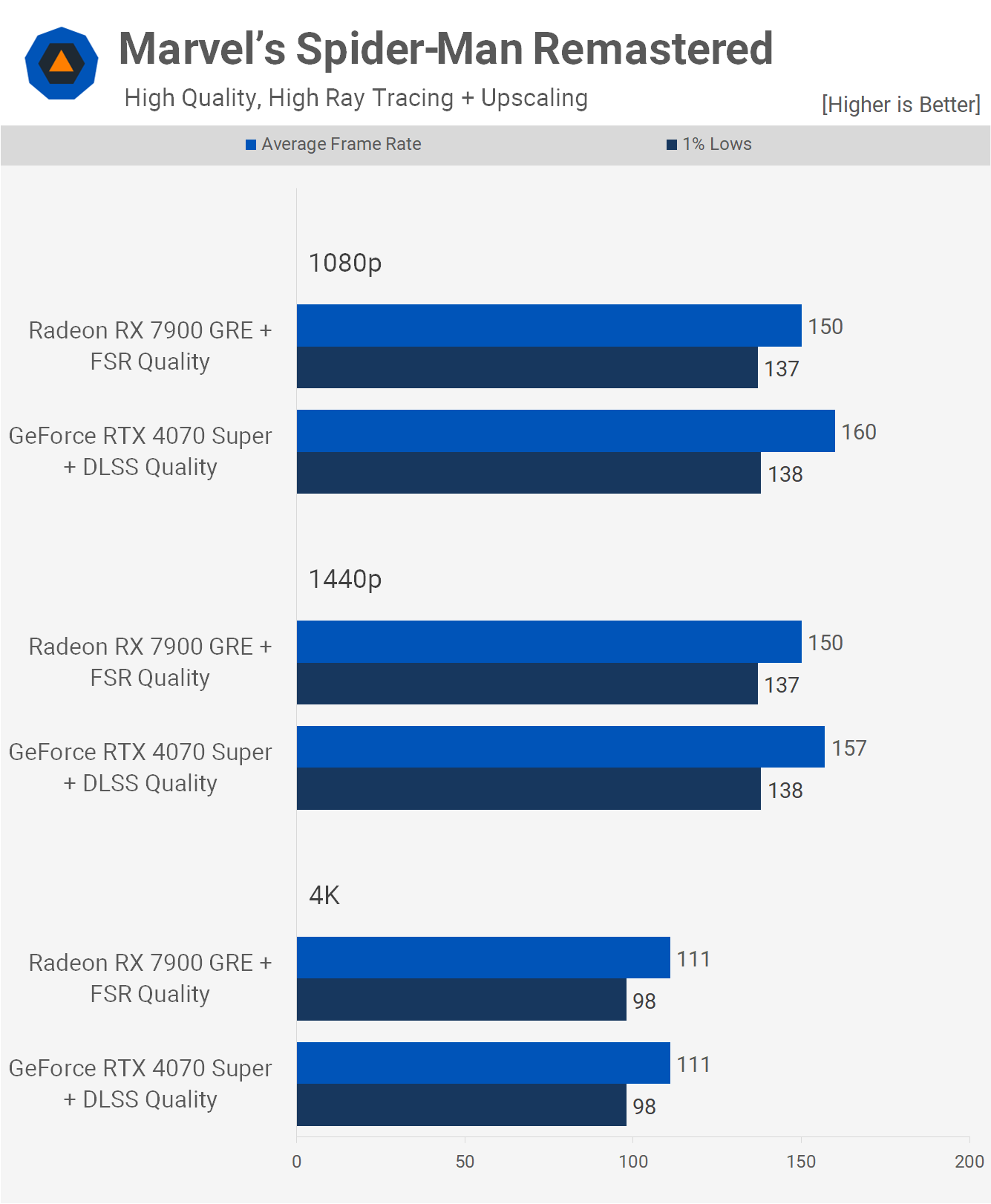

Marvel’s Spider-Man Remastered, another well-optimized title, saw both GPUs max out the 7800X3D at 1080p. At 1440p, the GRE took a 9% lead, and then just 5% at 4K. Performance at all three tested resolutions was excellent.

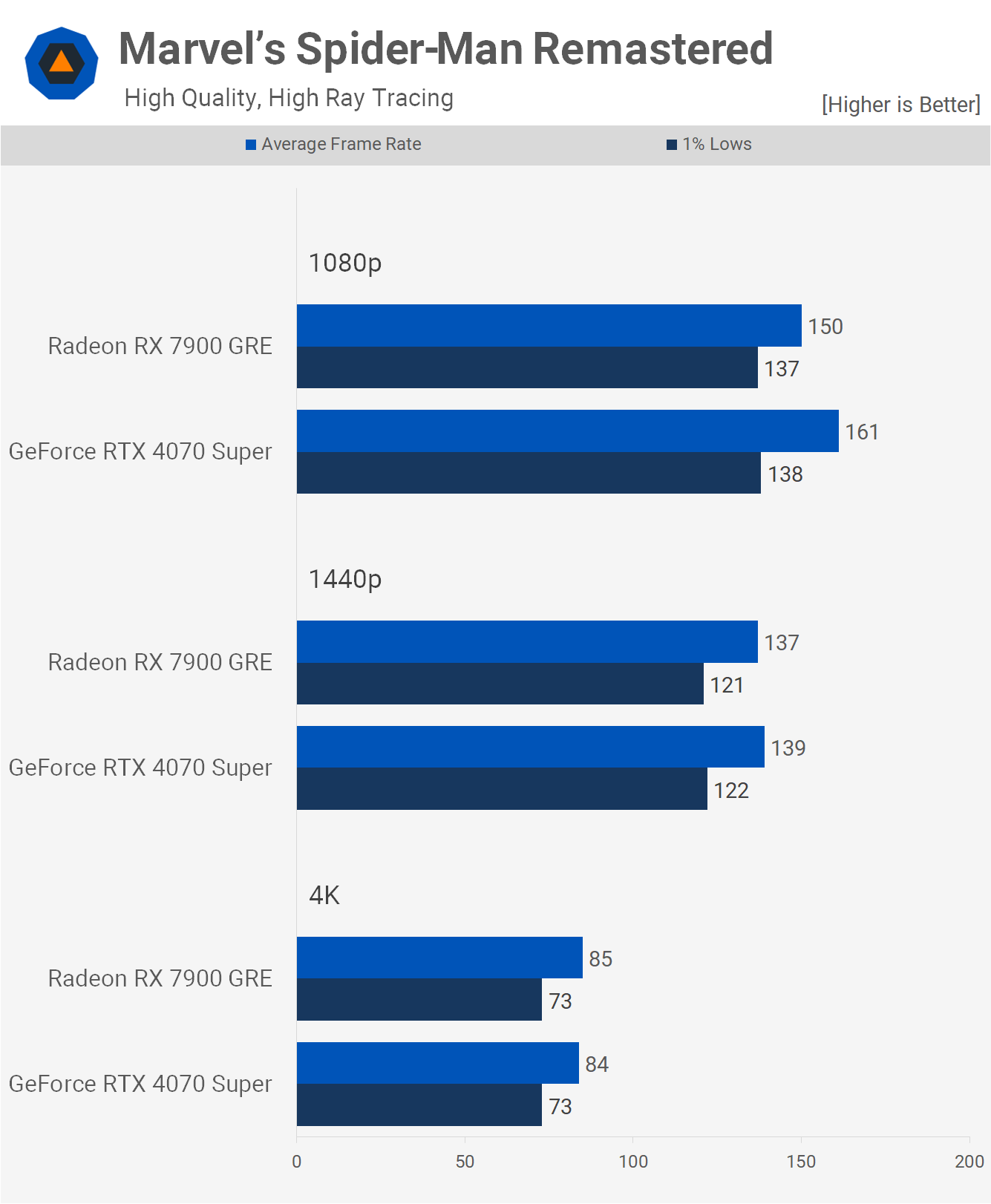

Even with ray tracing enabled, performance remained excellent and highly competitive, with nearly identical results at 1440p and 4K. The game was perfectly enjoyable at 4K with just over 80 fps.

With upscaling enabled, which seems unnecessary for these GPUs at 1080p and 1440p, performance remains competitive. Both products achieved the same 111 fps at 4K, indicating excellent overall performance in this title.

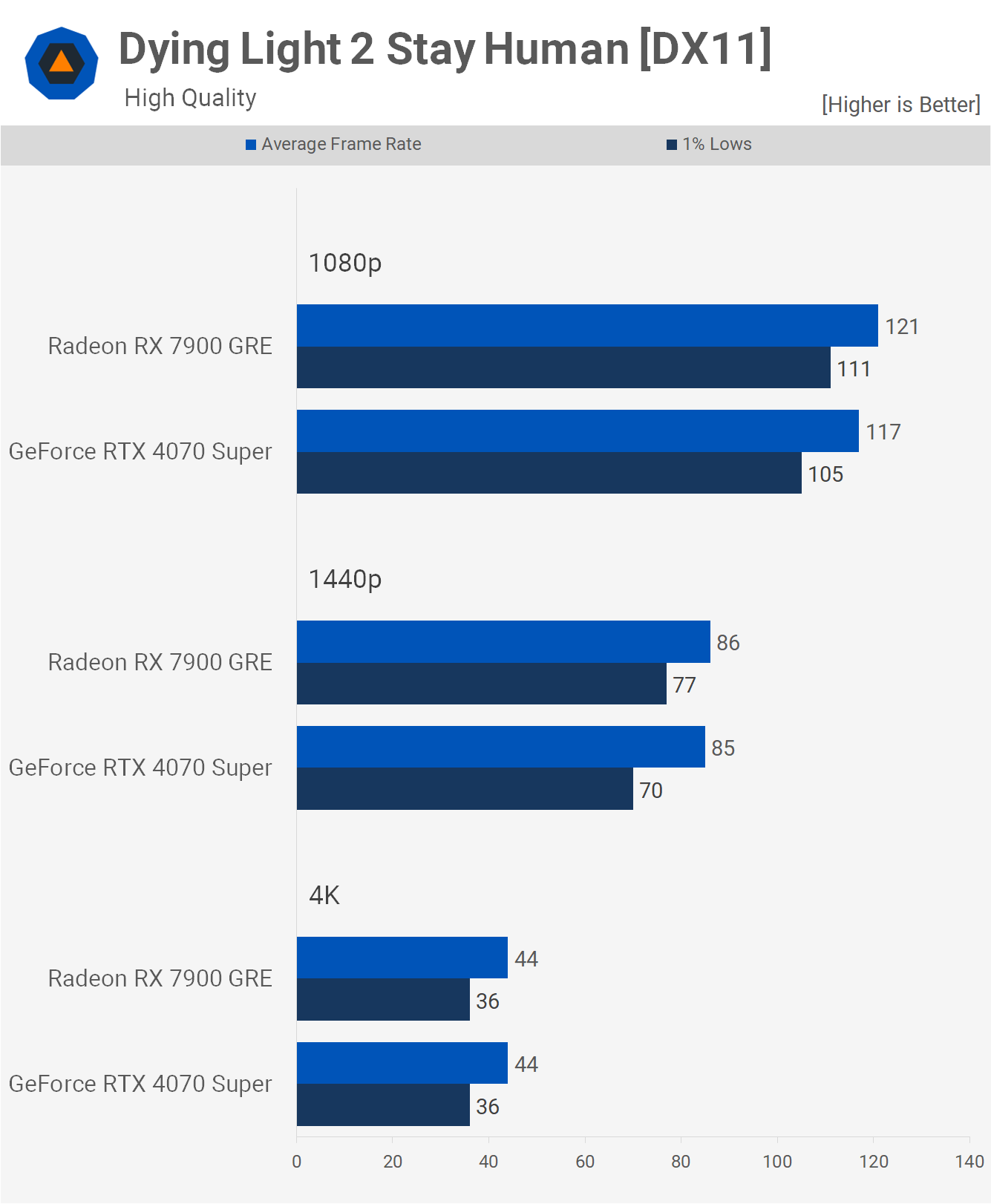

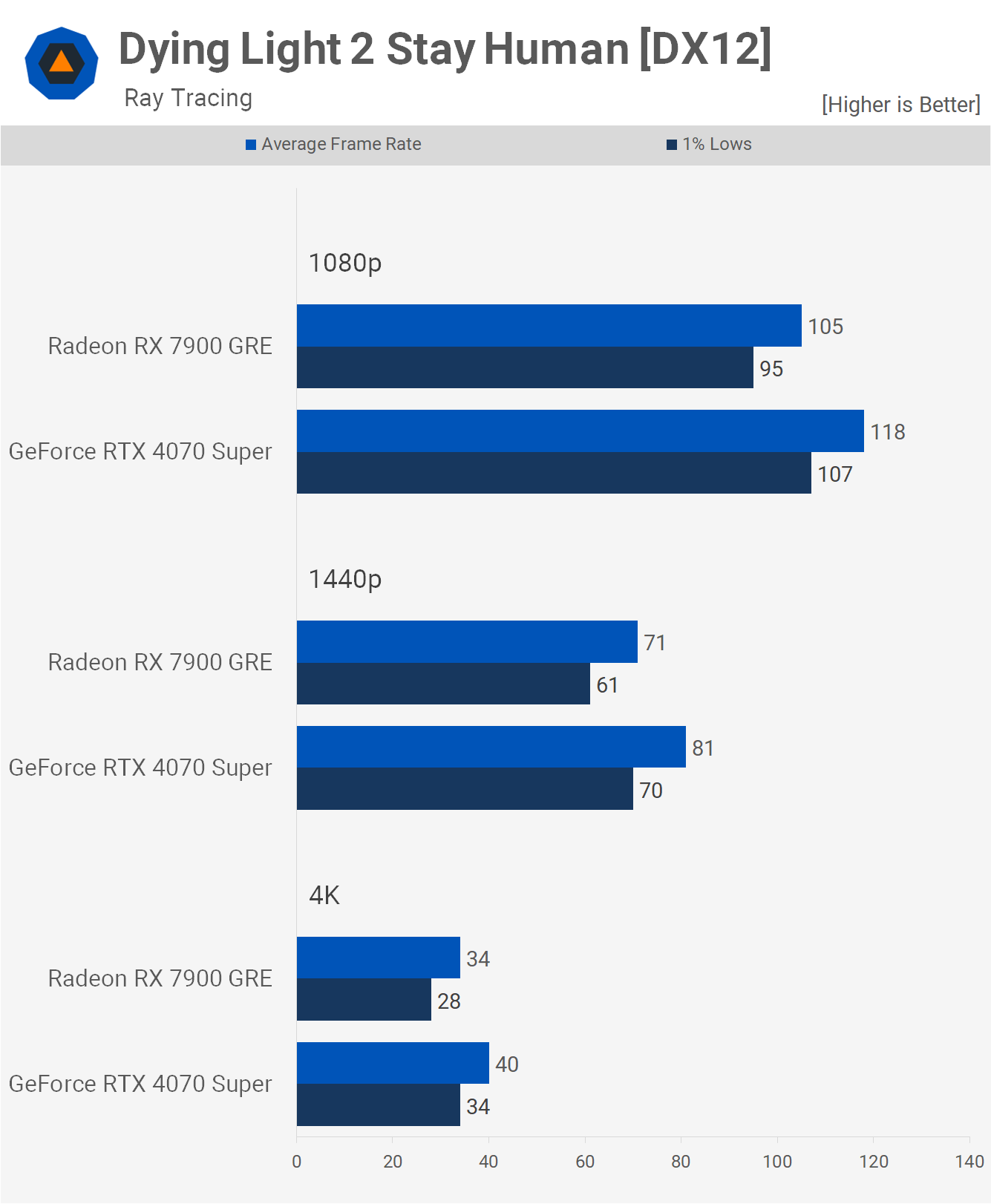

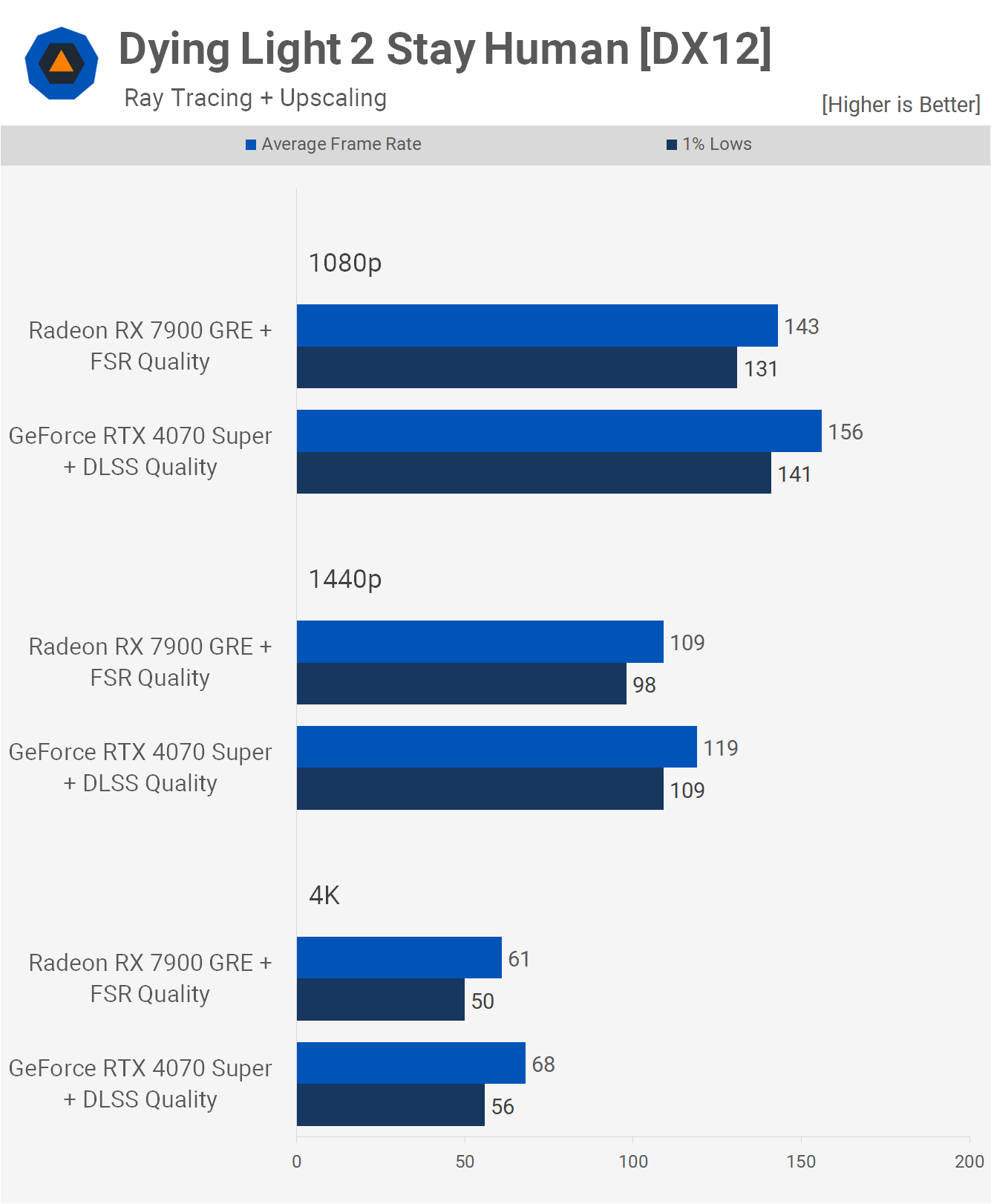

Dying Light 2, when using the high-quality preset, showed similar performance between both GPUs. The 7900 GRE offered slightly better 1% lows, but overall, the experience was comparable.

Enabling ray tracing favored the 4070 Super, which delivered 12% greater performance at 1080p, 14% at 1440p, and 18% at 4K. While frame rates at 1440p and 1080p were acceptable, the Radeon GPU provided a good experience.

With upscaling enabled, 60 fps or more at 4K is achievable. Although the 4070 Super was 11% faster here, playing with the 7900 GRE remained a viable option.

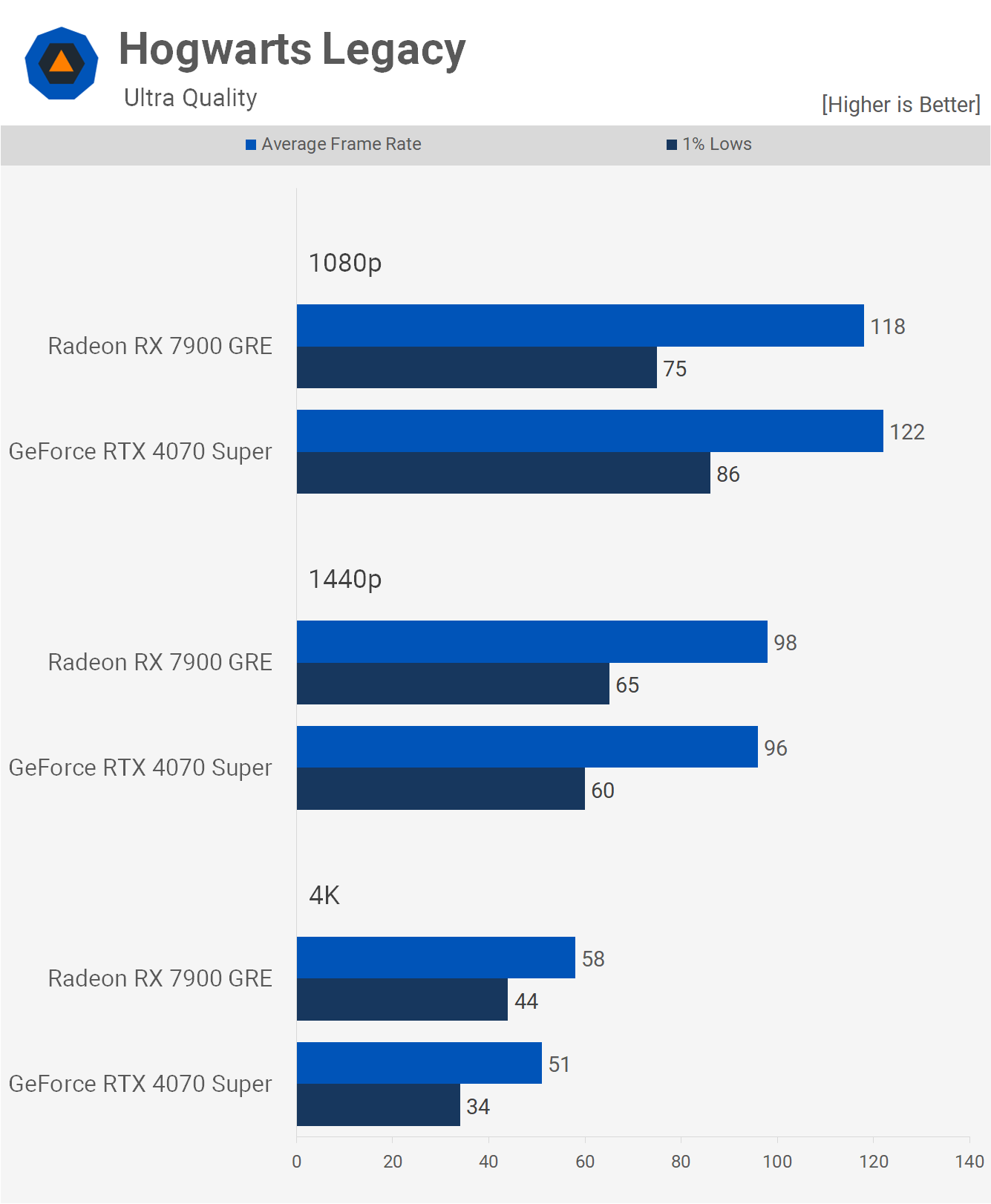

In Hogwarts Legacy, results were very similar at 1080p and 1440p. The 4070 Super delivered better 1% lows at 1080p, but the 7900 GRE showed slightly more power at 4K.

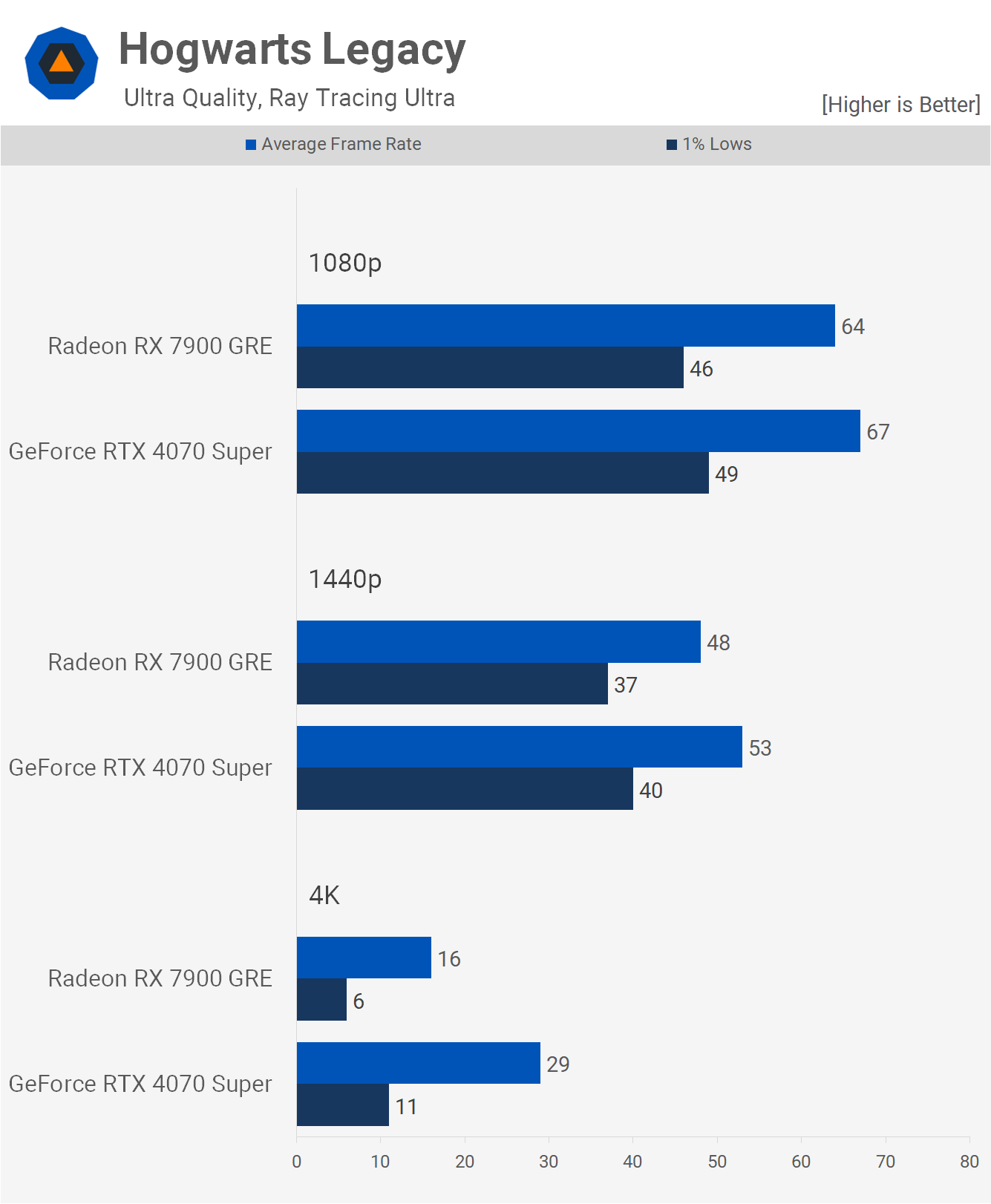

Enabling ray tracing negatively impacted the 7900 GRE in this title, and while the GeForce GPU was faster, the advantage was only about 5% at 1080p and 10% at 1440p. Performance at 4K was problematic for both models.

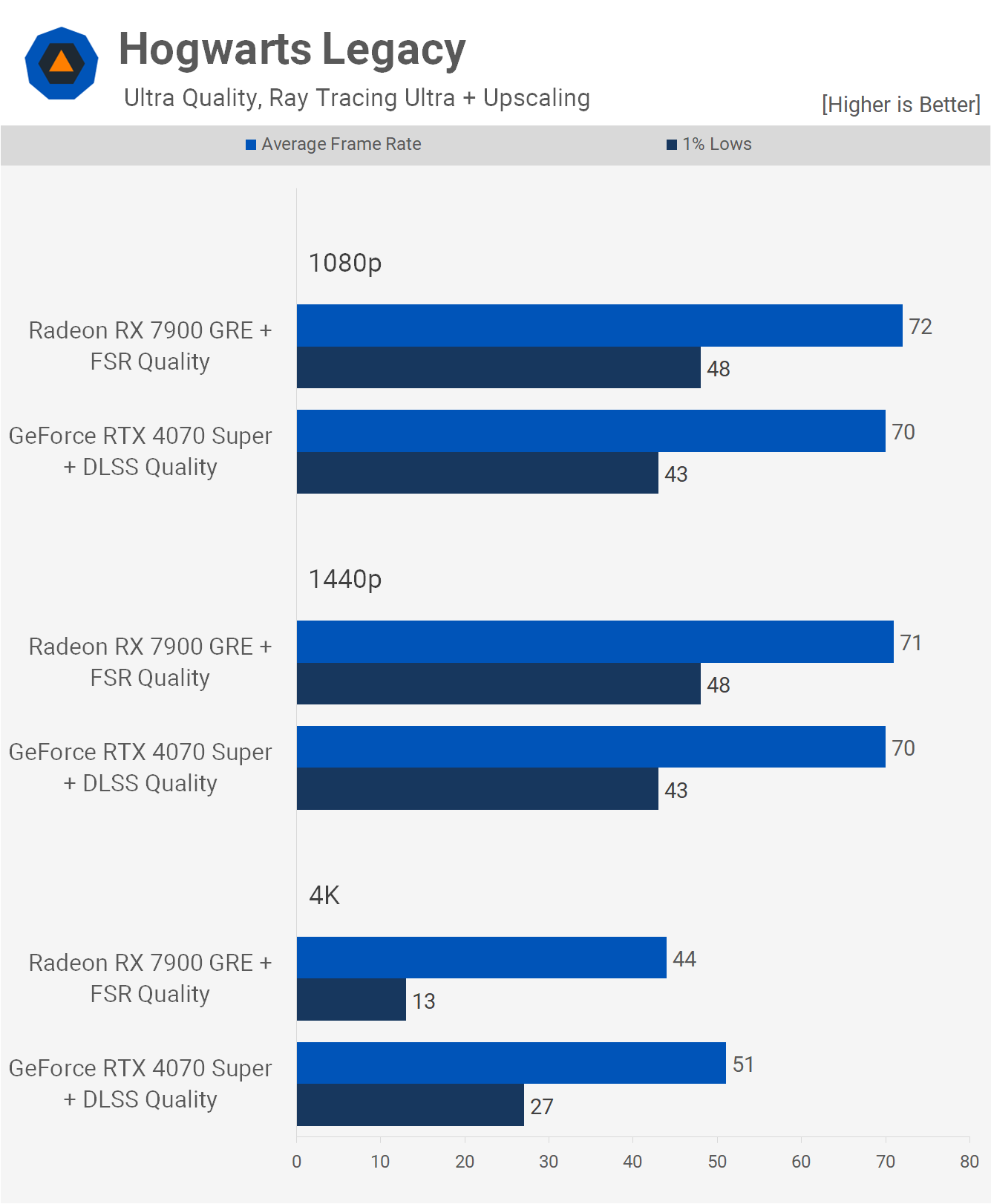

Upscaling significantly aids 4K performance, but even so, the frame time performance was poor, leading to a suboptimal experience. At 1080p and 1440p, both GPUs were CPU limited to around 70 fps.

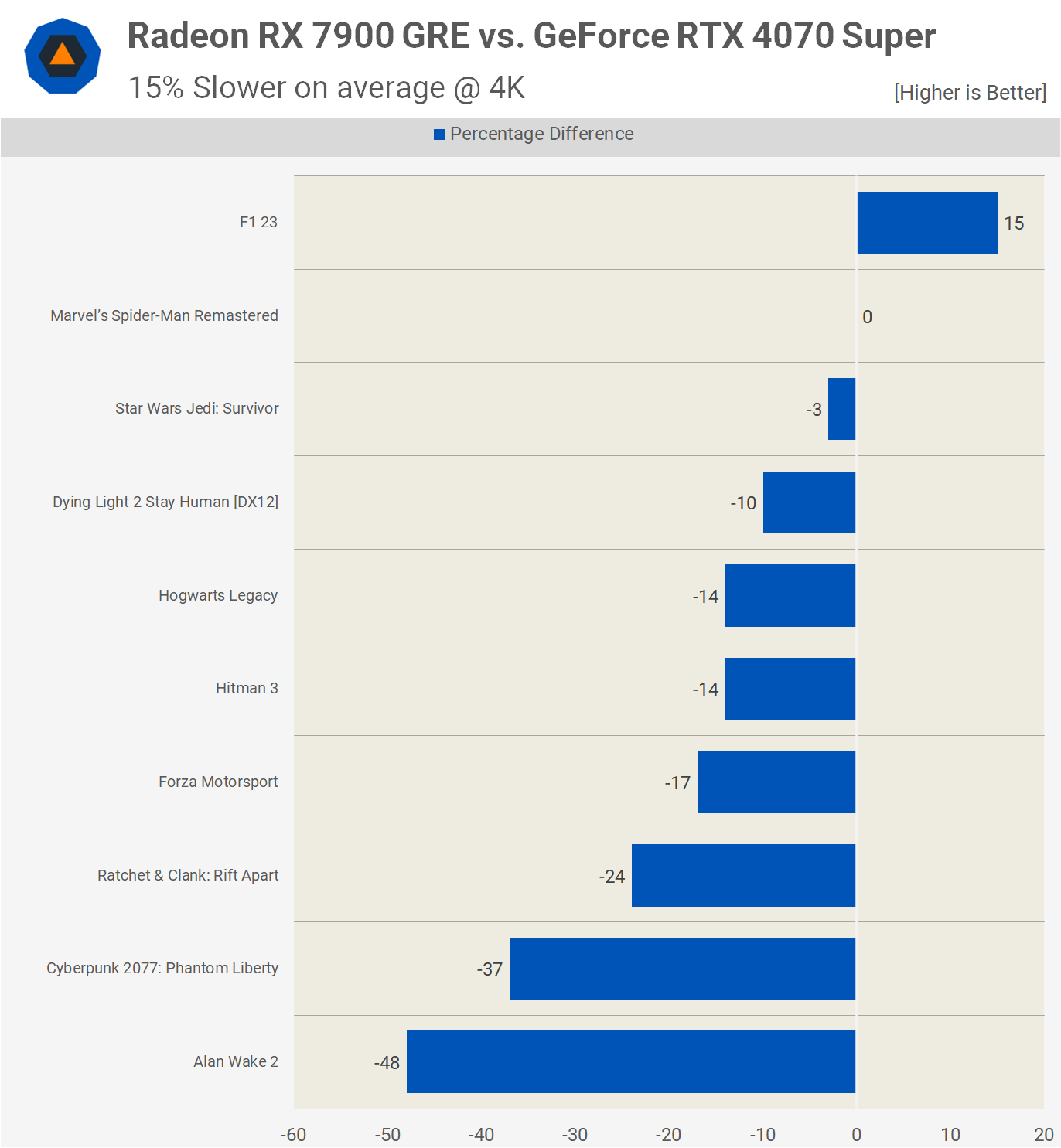

Putting It All Together: Rasterization Performance

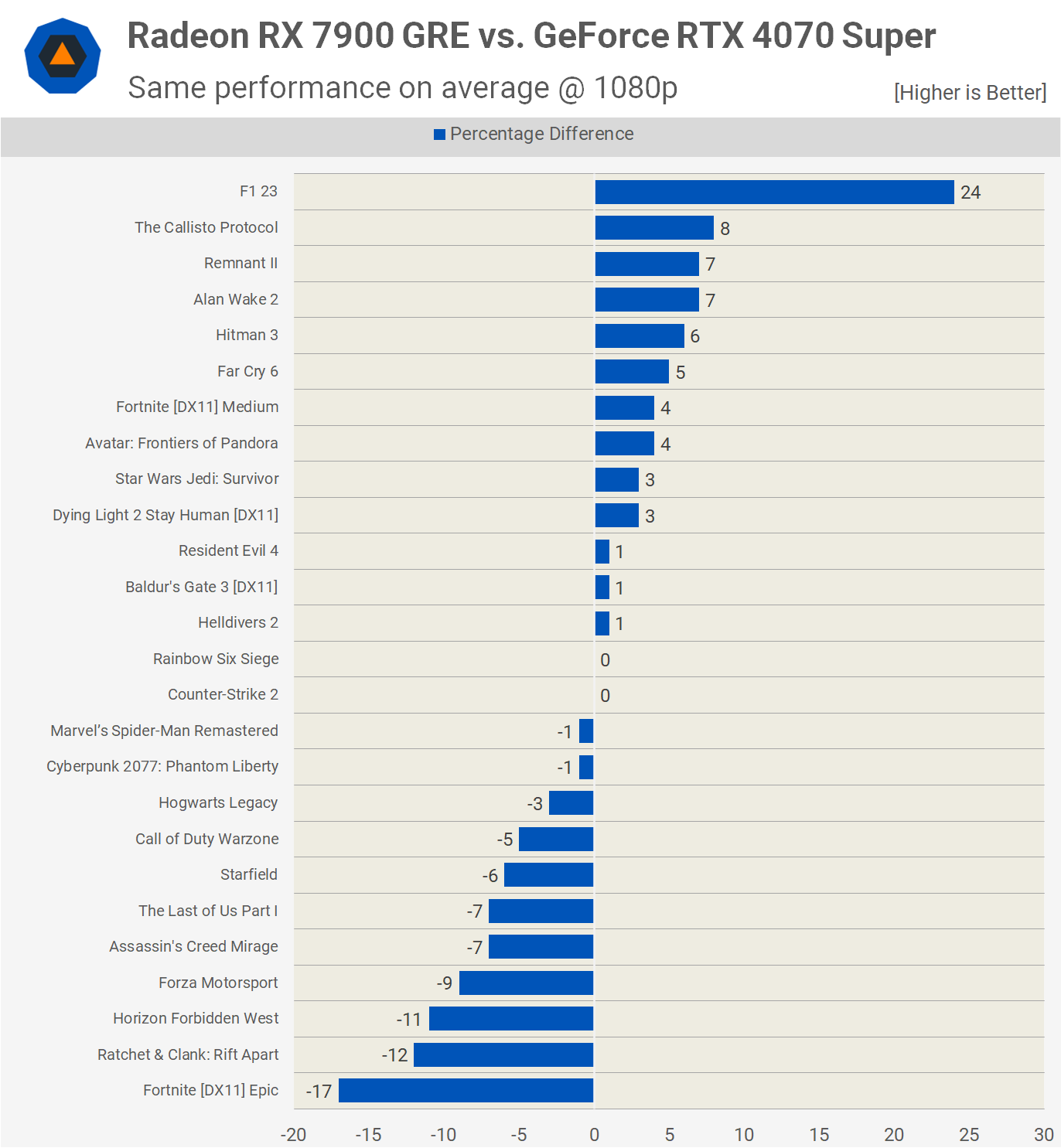

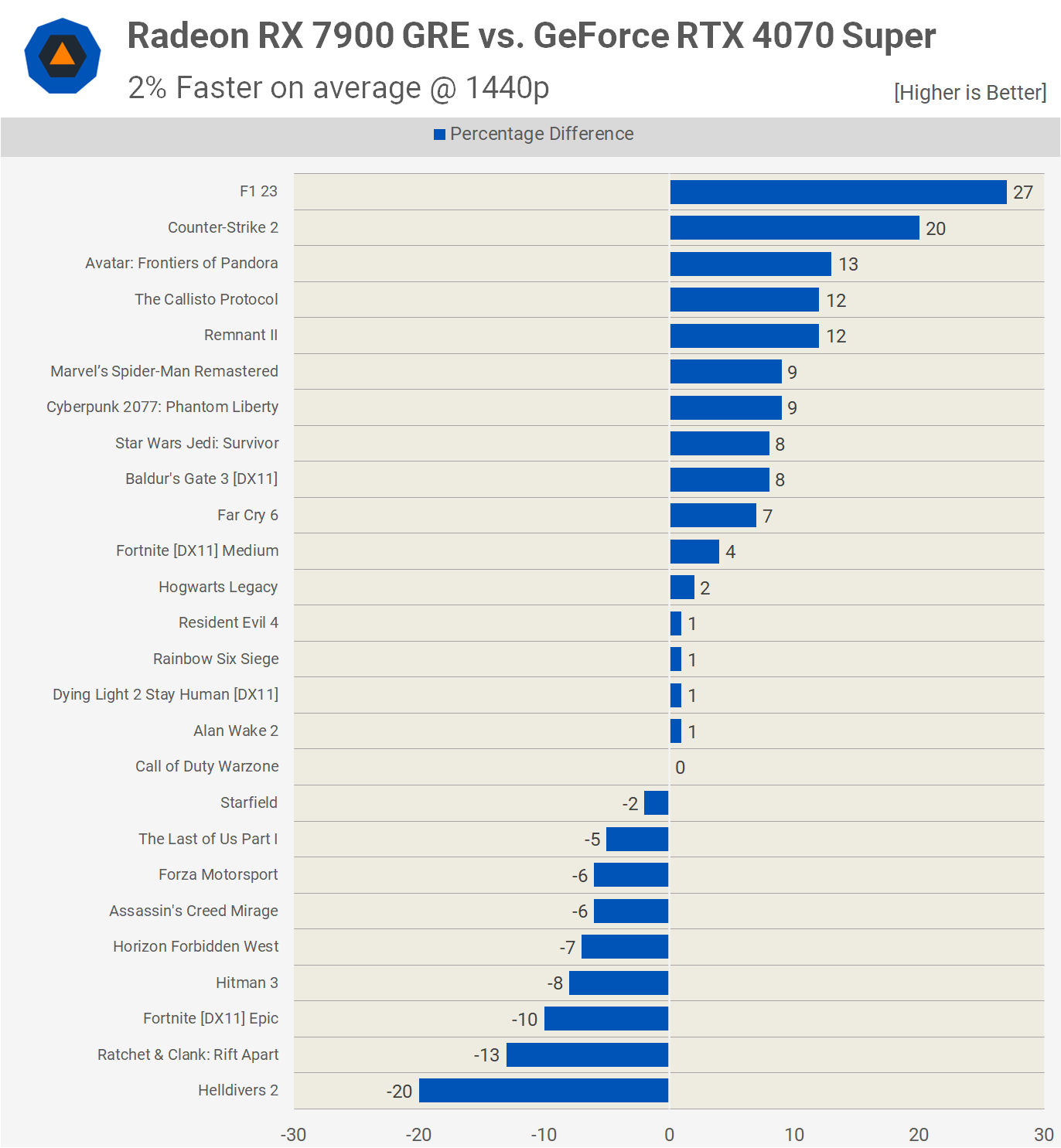

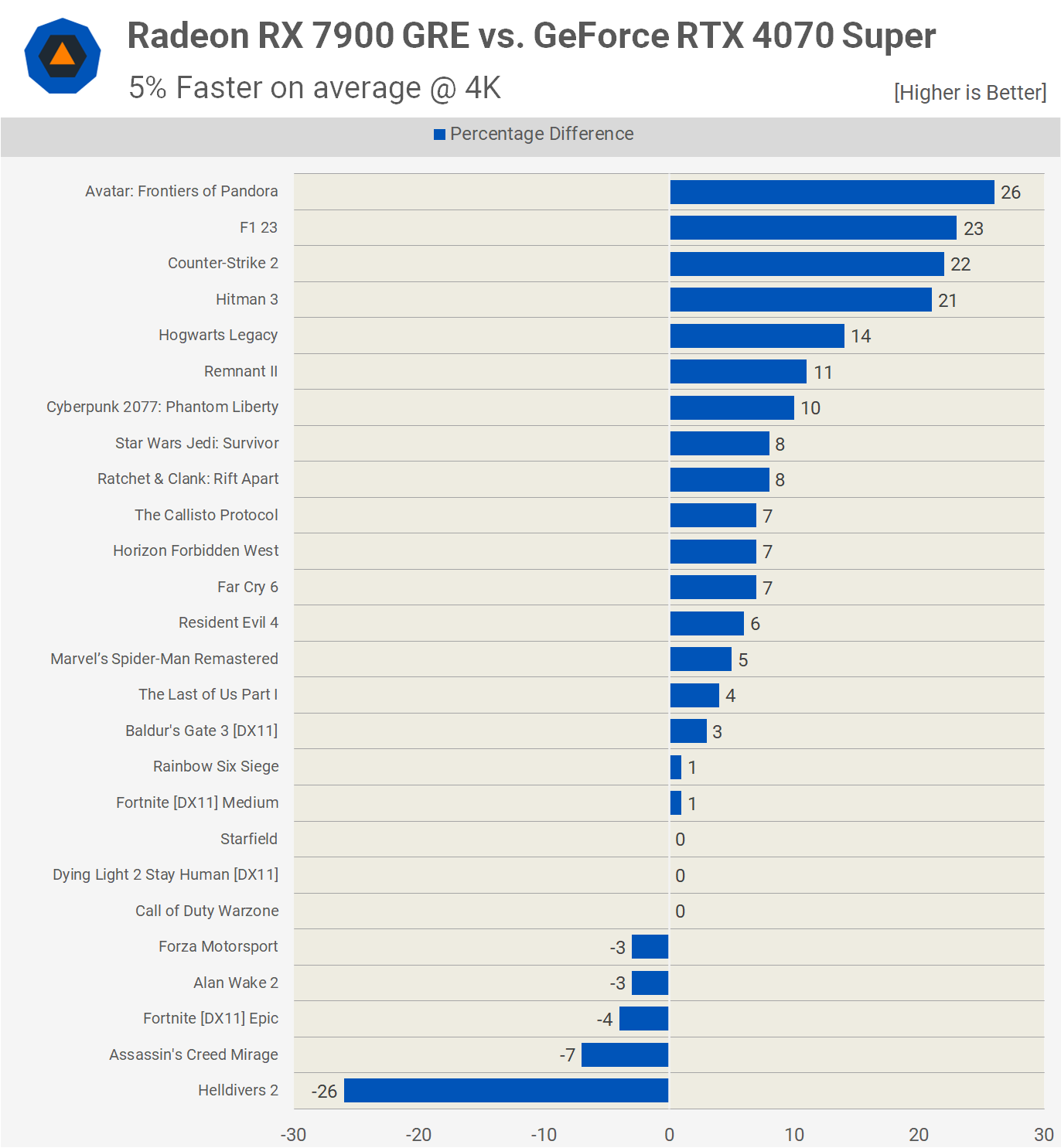

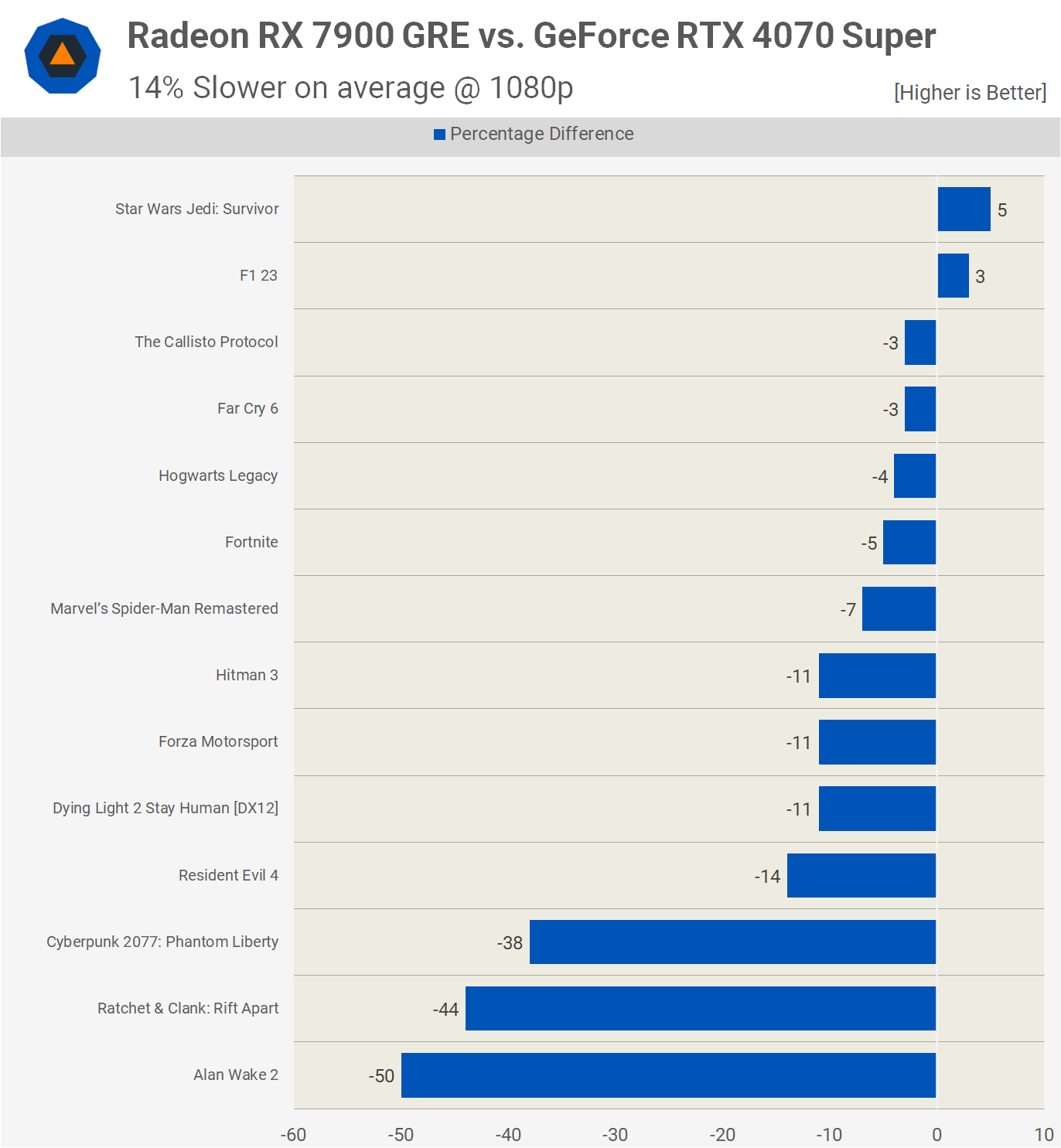

Now, it’s time to review the average results, starting with the rasterization benchmarks, which cover 25 games. At 1080p, the Radeon 7900 GRE was a match for the 4070 Super.

The Radeon GRE excelled in F1 23, while it lagged by double digits in Horizon Forbidden West, Ratchet & Clank, Helldivers 2, and Fortnite using the Epic quality settings.

At 1440p, the 7900 GRE was 2% faster on average, so once again, the performance overall was evenly matched. The Radeon GPU performed particularly well in F1 23, but also stood out in Counter-Strike 2 with a 20% margin.

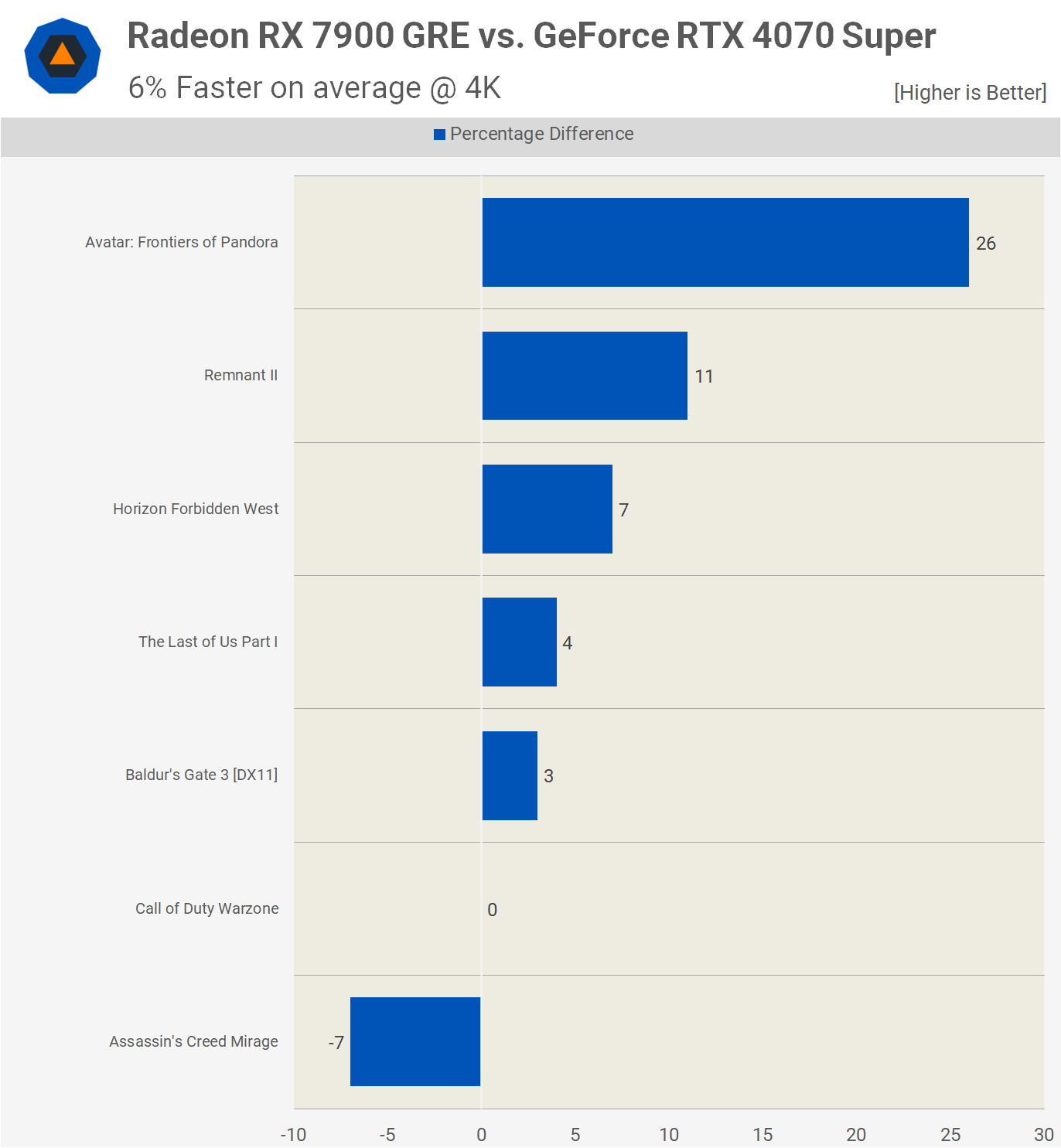

At the 4K resolution, the GRE was 5% faster on average, with Helldivers 2 being the only game where it showed a weaker performance. Apart from that, the Radeon GPU was dominant at this higher resolution.

Putting It All Together: Upscaling vs. No Upscaling Performance

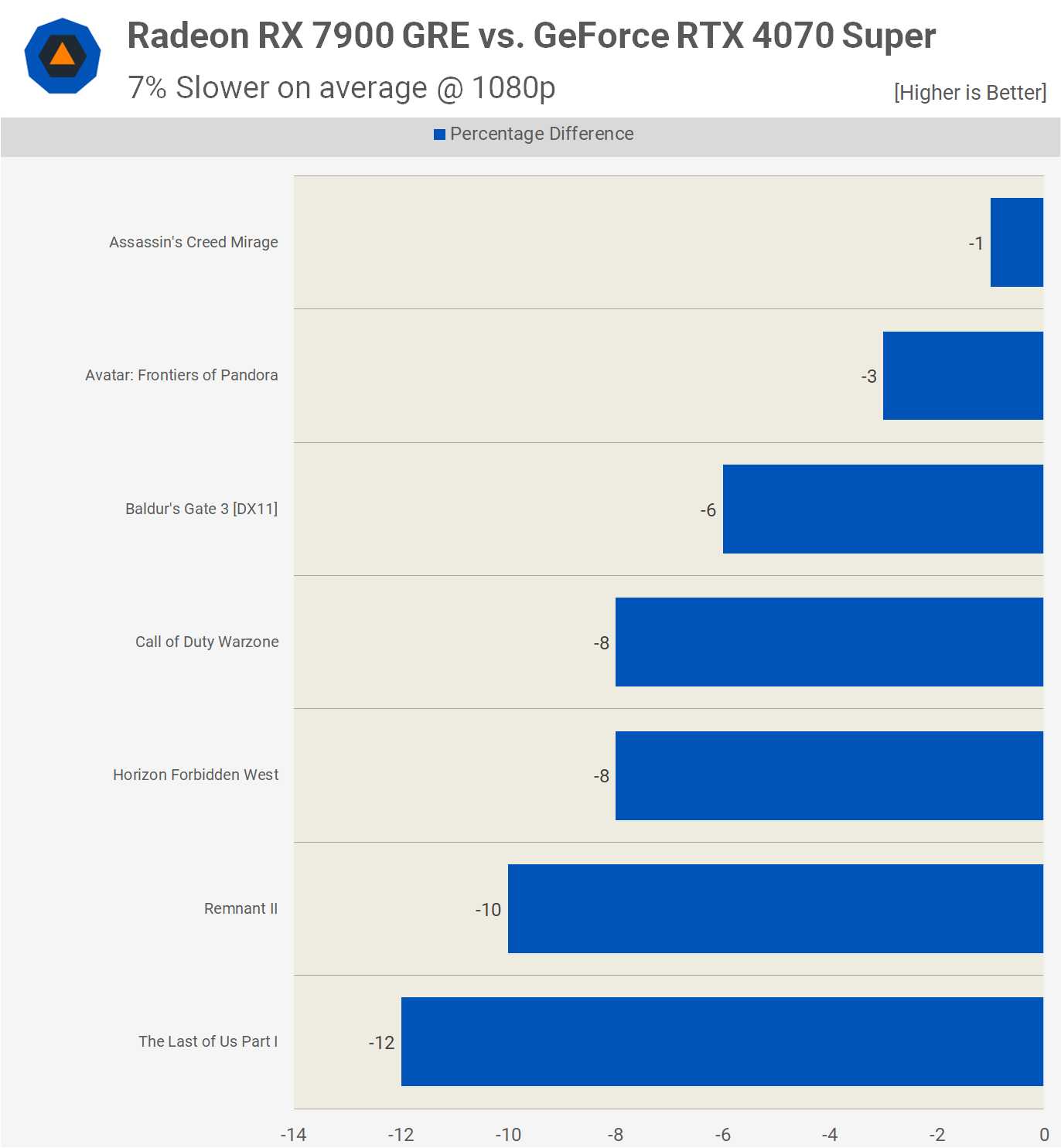

A quick look at the rasterization results with upscaling shows that the GRE was 7% slower on average at 1080p, though this comparison involves a smaller selection of games.

Interestingly, without upscaling, within the same selection of games, the GRE was just 3% slower, indicating that the RTX 4070 fared better at 1080p with upscaling enabled.

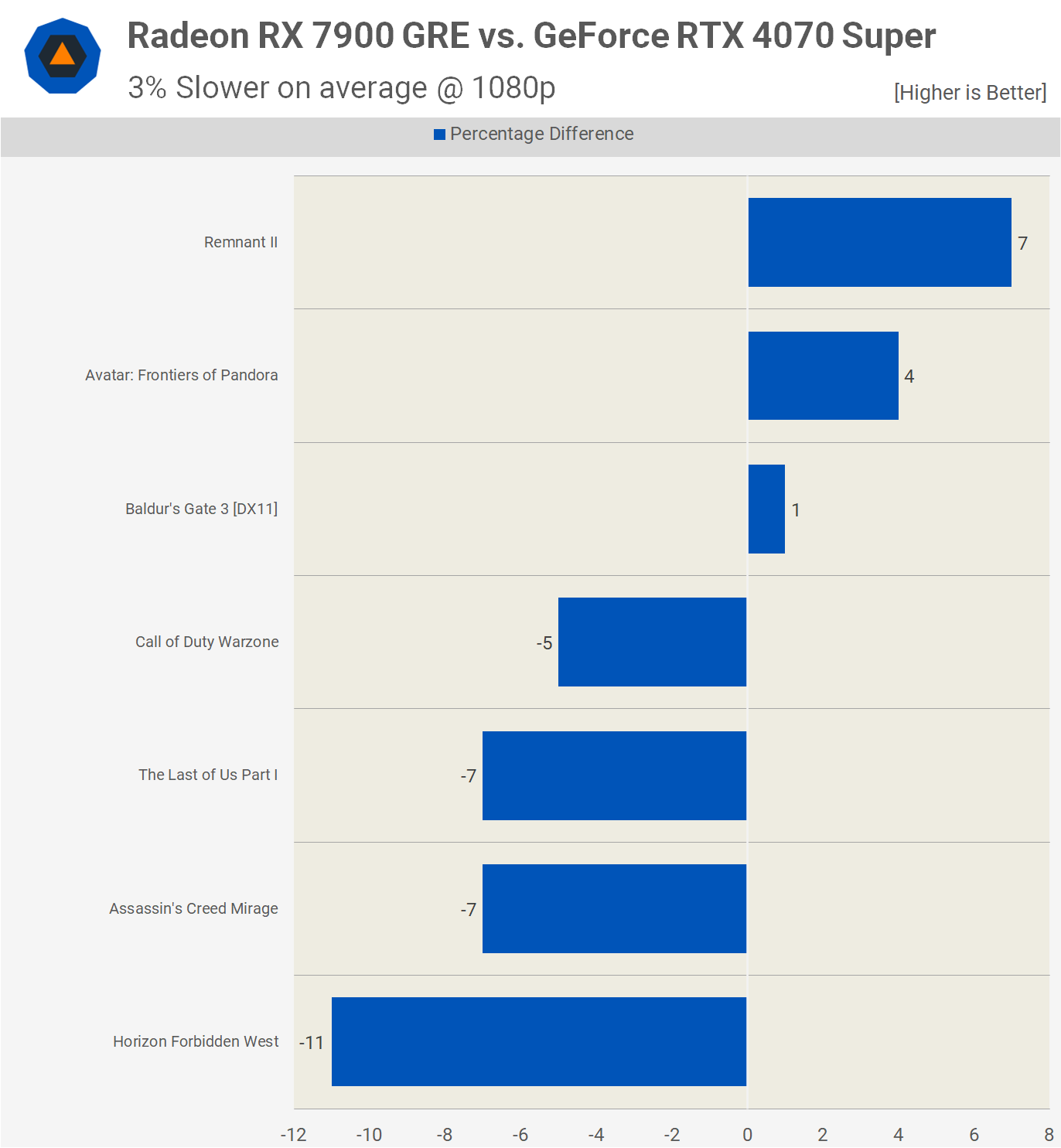

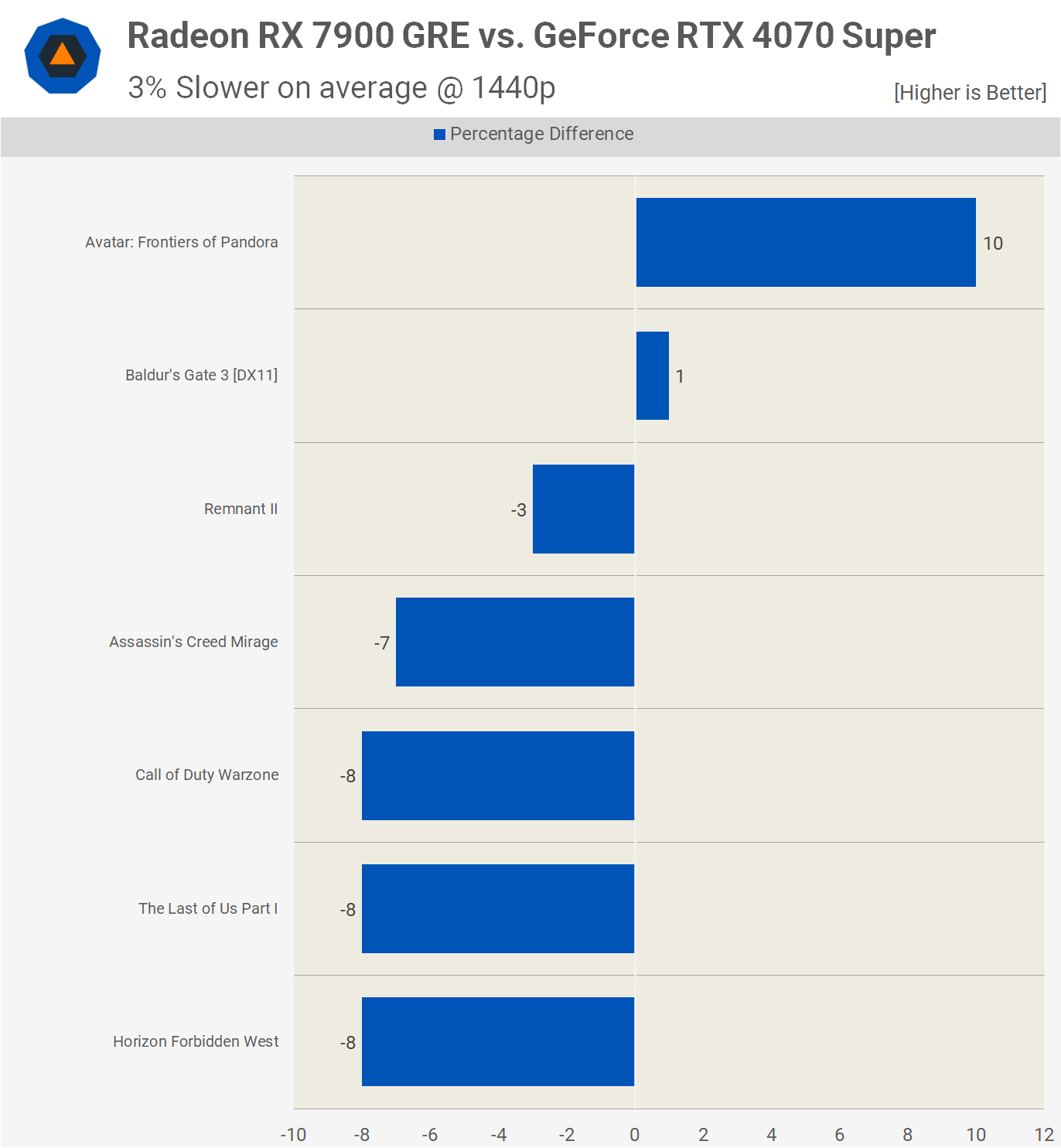

At 1440p with upscaling, the GRE was just 3% slower on average, indicating closely matched results.

However, without upscaling, in the same set of games at 1440p, the GRE was actually 2% faster, suggesting that the GeForce GPU benefits more from upscaling.

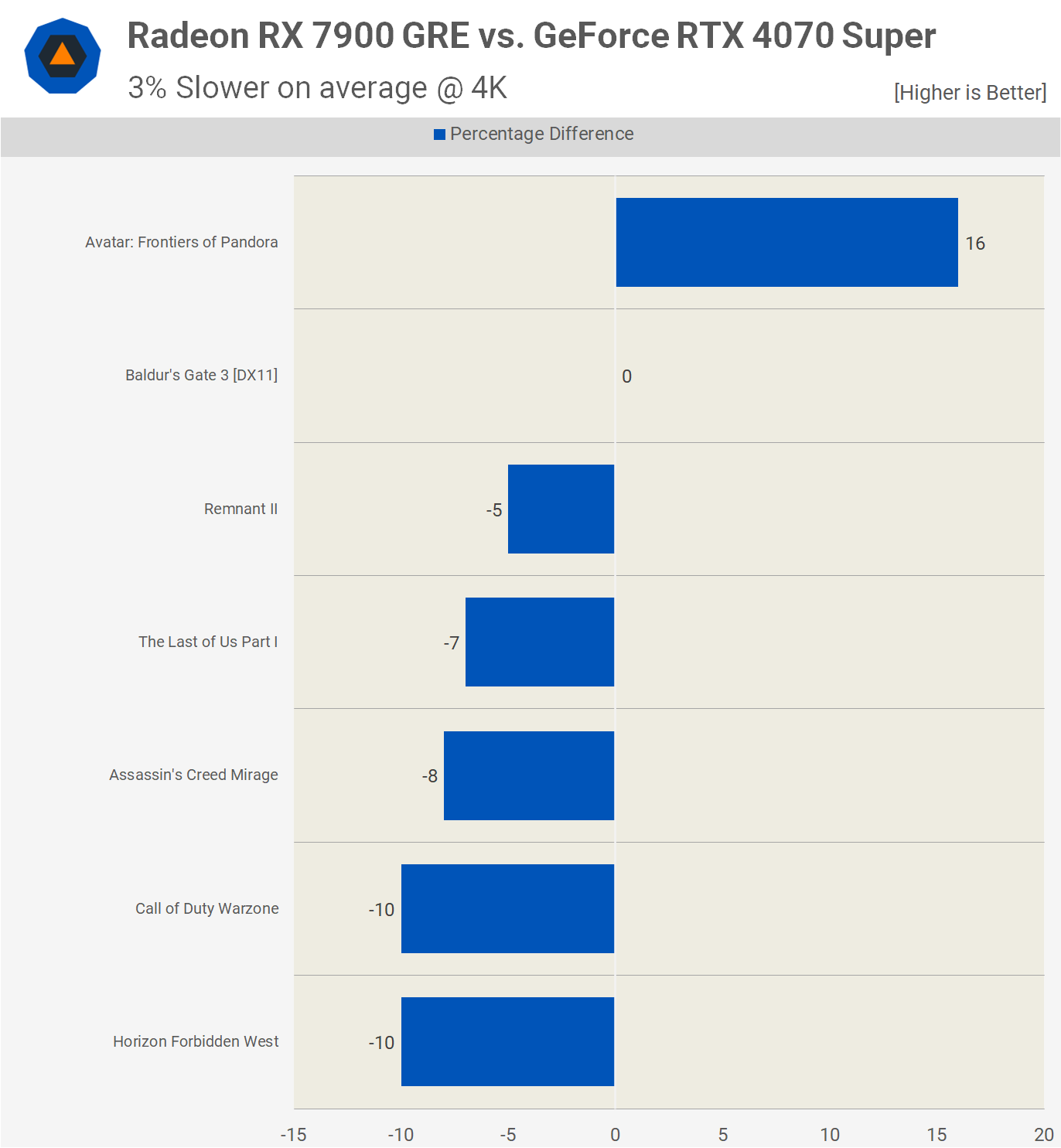

At 4K, the GRE was again 3% slower, ironically achieving its only win in Avatar.

This contrasts with the 6% advantage it would have had without upscaling.

Putting It All Together: Ray Tracing Performance

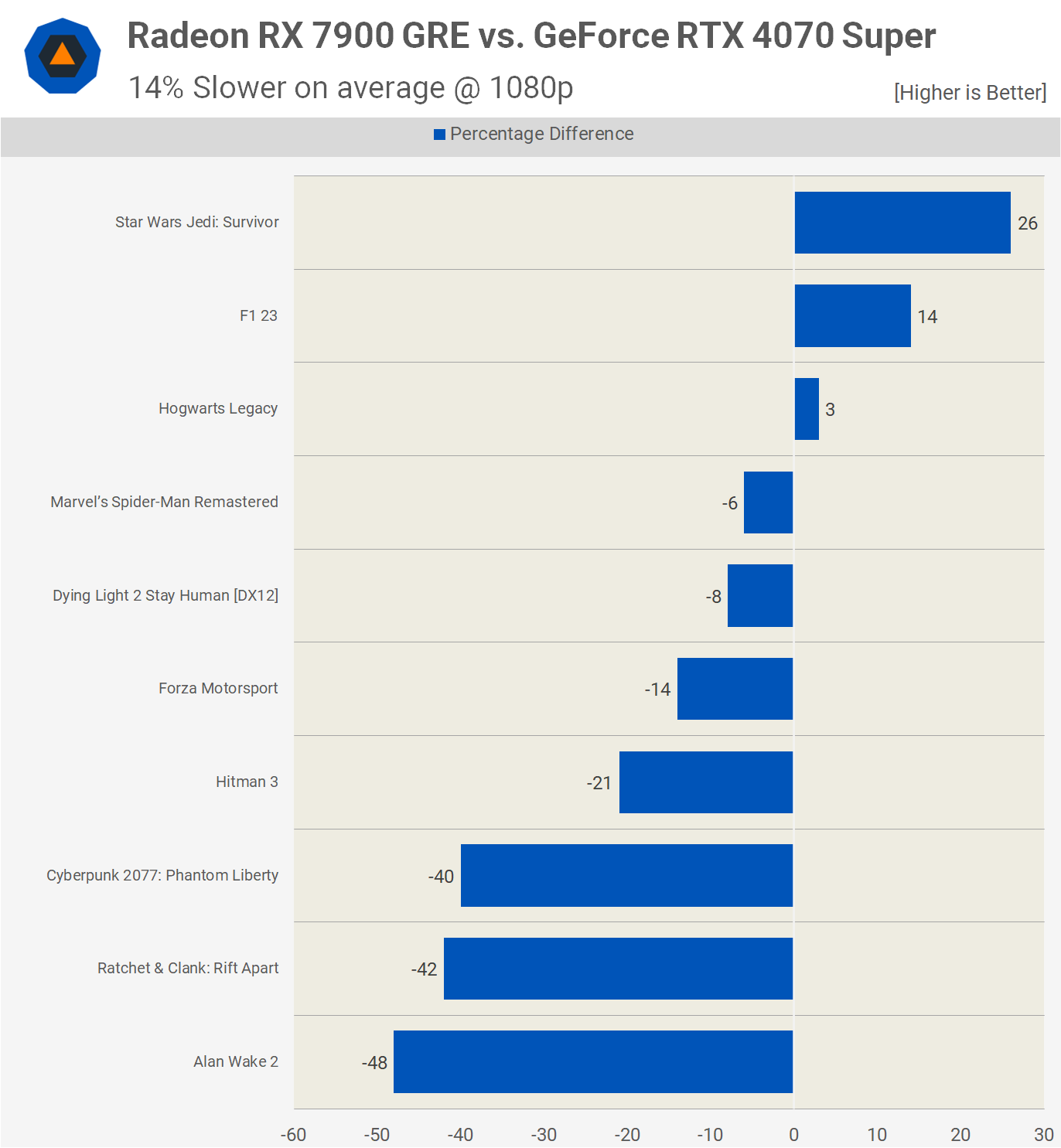

Now, let’s check out the ray tracing results, starting with 1080p. On average, the Radeon 7900 GRE was 14% slower, with significant disparities on a per-game basis. All major advantages were in favor of the RTX 4070 Super, particularly in Cyberpunk, Ratchet & Clank, and Alan Wake 2.

The only other game where the RT effects seem significant is Fortnite, though its competitive nature typically favors lower quality settings for optimal performance. Many of these games, including F1 23 and Resident Evil 4 as notable examples, show minimal visual difference with ray tracing enabled, primarily affecting the frame rate. This trend is common across most ray tracing-supported games, with few exceptions demonstrating a substantial improvement.

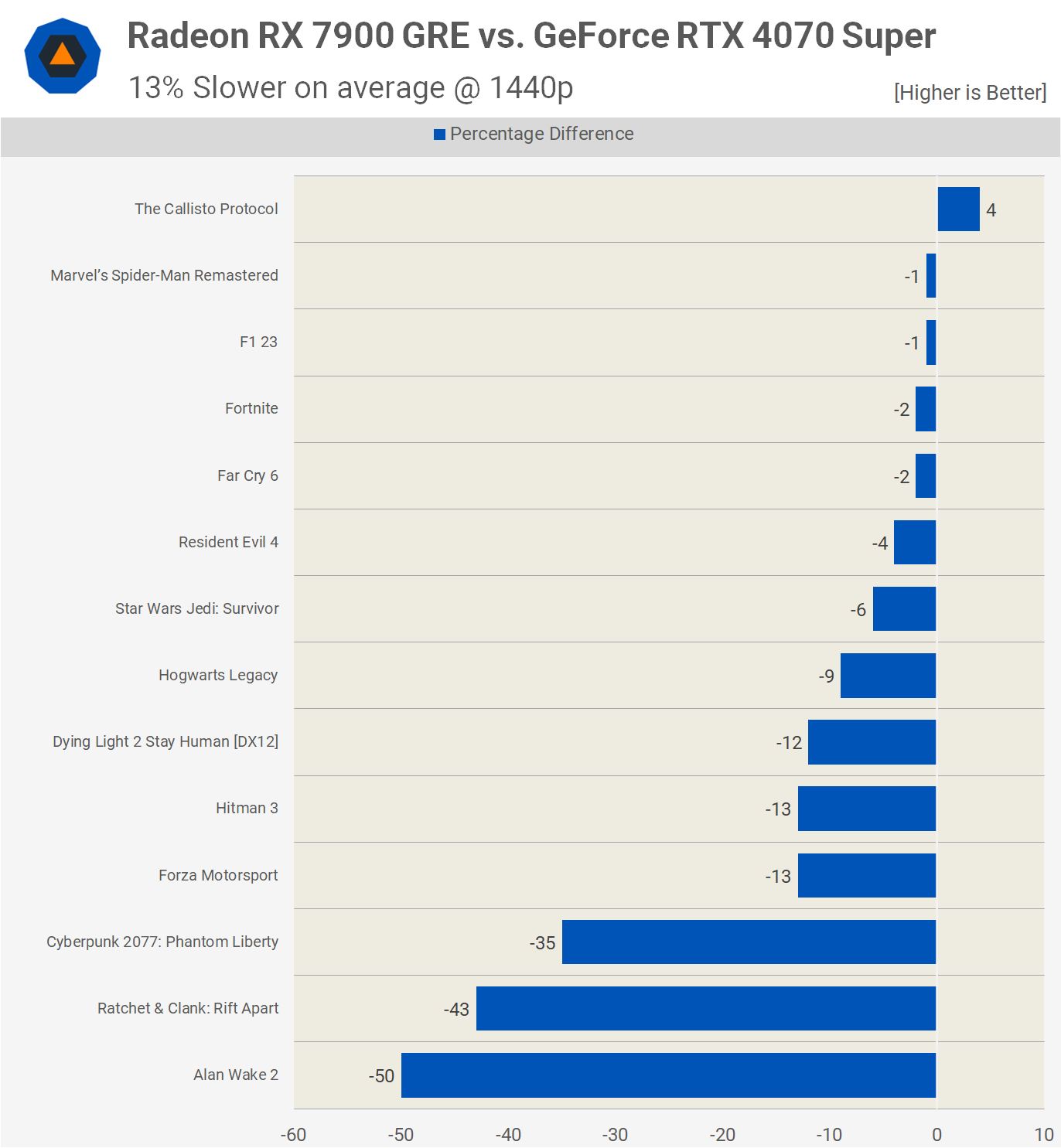

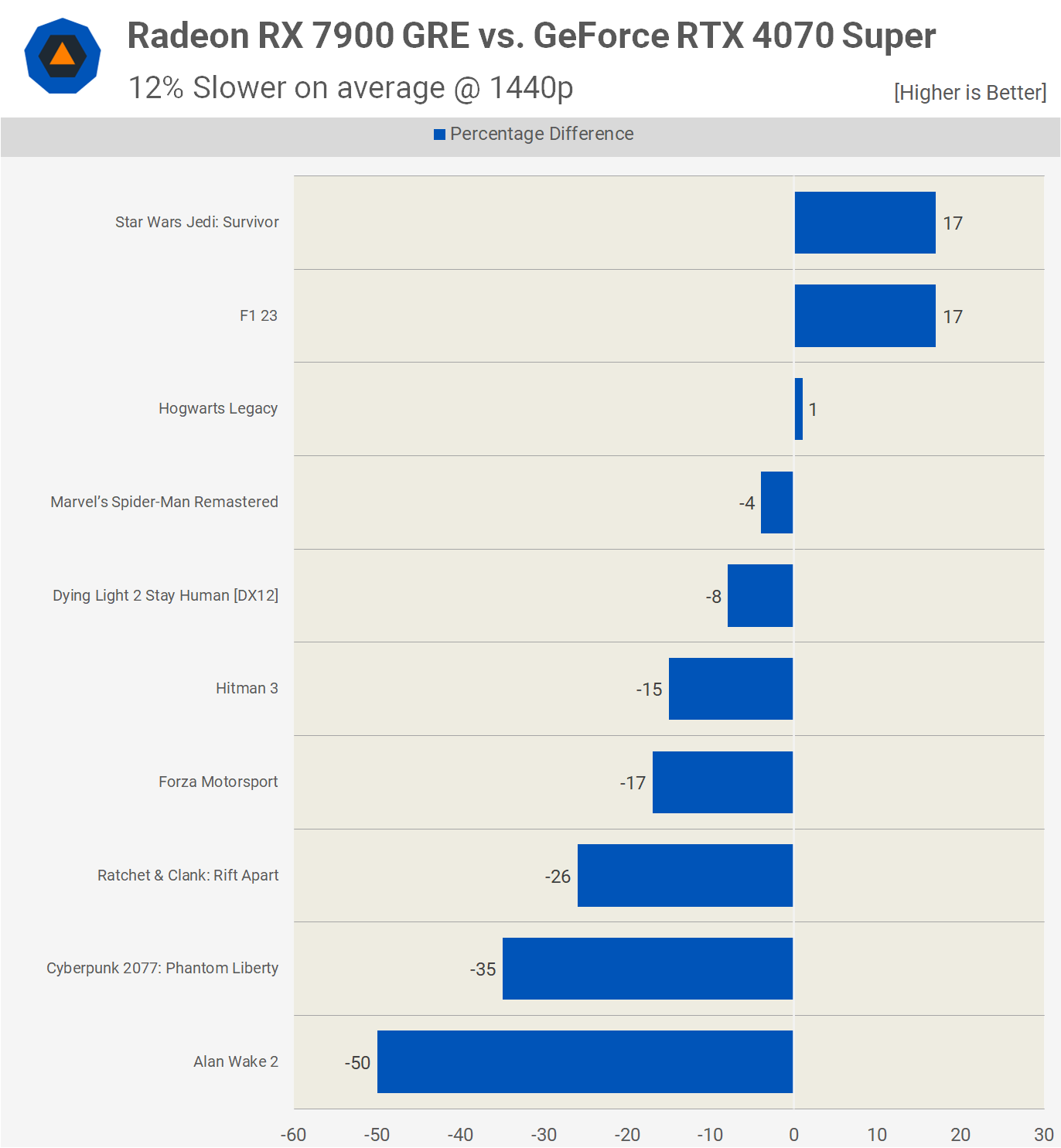

Advancing to 1440p, the 7900 GRE was 13% slower on average. The key titles where the Radeon GPU significantly underperformed were Ratchet & Clank, Cyberpunk, and Alan Wake 2, which were only viable at 1080p without upscaling.

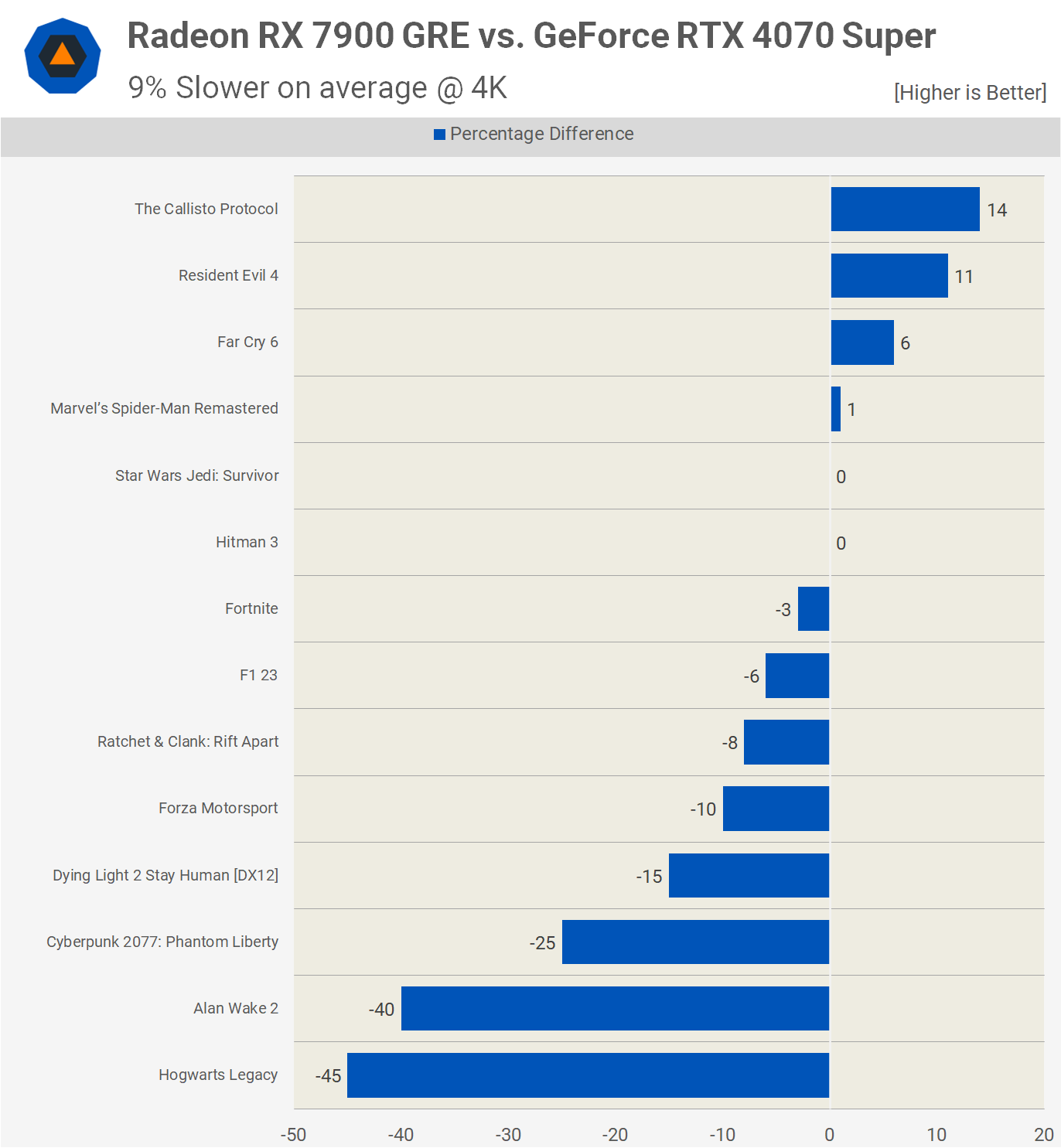

At 4K, with ray tracing enabled, few games achieved acceptable frame rates, with Hogwarts Legacy, Alan Wake 2, and Cyberpunk 2077 being poor examples. The GRE was 9% slower overall, but given the generally low frame rates, this comparison is somewhat irrelevant.

With upscaling enabled for ray tracing data, the 7900 GRE was 14% slower on average at 1080p. In several cases, it was slower by a 20% margin, and in three instances, the gap widened to 40%.

At 1440p, the GRE was 12% slower on average. For many of these titles, performance, even with upscaling, was unsatisfactory on the Radeon GPU.

At the 4K resolution, ray tracing performance, even with upscaling, was not viable. For example, the 4070 Super averaged just 35 fps in Cyberpunk, 21 fps in Alan Wake 2, and 41 fps in Ratchet & Clank.

What We Learned: Ray Tracing, Future Proofing, and Performance Implications

That was a lot of data, and we can be sure we lost a few of you along the way, but those who made it here with or without skipping to this point using the article index, well done. Now the question is, which one of these GPUs should you buy, and why?

Starting with rasterization, overall performance was much the same at 1080p and 1440p between these two GPUs, with a 6% advantage going the way of the Radeon 7900 GRE at 4K, which is nice considering it’s 9% cheaper and packs some extra VRAM.

So if you primarily care about raster performance and the GRE stands up well in the games you’re currently playing, it would be the better value option. That said, complicating things slightly is upscaling and if you’re prone to employing upscaling then the 4070 Super will close in on the GRE, and should do so with slightly better visuals based on our recent investigations into DLSS vs. FSR image quality.

Then, there’s ray tracing and our opinions here haven’t changed since the introduction of the current generation GPUs. That is, if you care about ray tracing performance, you’re best off ignoring Radeon GPUs. But opinions aside, the GeForce RTX 4070 Super was clearly superior in titles that are known to best showcase the technology, such as Alan Wake 2 and Cyberpunk.

In the case of the RTX 4070 Super, in order to play these titles at 1440p using ray tracing you will require upscaling and even then performance isn’t always great.

Alan Wake 2, for example, played at just 42 fps, which you could argue is enough for that title, but it still felt a bit janky to us. Cyberpunk was much better at 68 fps, certainly not amazing, and I’d personally rather play with the ray tracing effects dialed down for north of 100 fps, but if you’re a “single player visual buff” then it might be fine. Ratchet & Clank was quite good at 76 fps, and we’d say for that particular title that’s a good frame rate.

Outside of those titles, we’re not sure how many other ray tracing enabled games there are where it’s worth using it. We’d need to invest some time into an industry wide investigation to determine just how many titles actually offer worthwhile ray tracing effects. The list of ray tracing supported games is massive, but in our experience it would seem like 80% or so of those titles just shouldn’t bother offering the option, as all it does is smash your frame rate with no real noticeable visual improvement.

But maybe we’re wrong here, and this is why we want to spend a good amount of time this year analyzing what’s on offer. As a side note, we often follow readers commenting that they would purchase the GeForce GPU as its ray tracing support makes it more future-proof, but that’s simply not true. Instead, buy the RTX 4070 Super to play what’s on offer now, not what might be on offer in a few years, because chances are it won’t be powerful enough to play those titles with any significant degree of RT effects enabled.

For example, the usefulness of an RTX 2060 Super in today’s best RT titles is questionable and while there are those that will argue that with the right settings it works, there’s no denying it’s a highly compromised experience, and you’ve got to be pretty comfortable with 30 fps gaming.

So how much value gamers should place on ray tracing, even today, 5 years after the release of the GeForce 20 series, is hard to say, and we think that’s a problem. But to simplify the issue, if ray tracing support is important to you, buy a GeForce GPU.

The only other noteworthy consideration in this matchup is VRAM capacity, the GeForce RTX 4070 Super comes with just 12GB for $600 which is weak. At minimum it should have been armed with 16 GB like the 7900 GRE. But how important this will be moving forward is difficult to gauge, it’s likely to become a factor if you’re buying today in the hope of keeping it for the next 3-4 years.

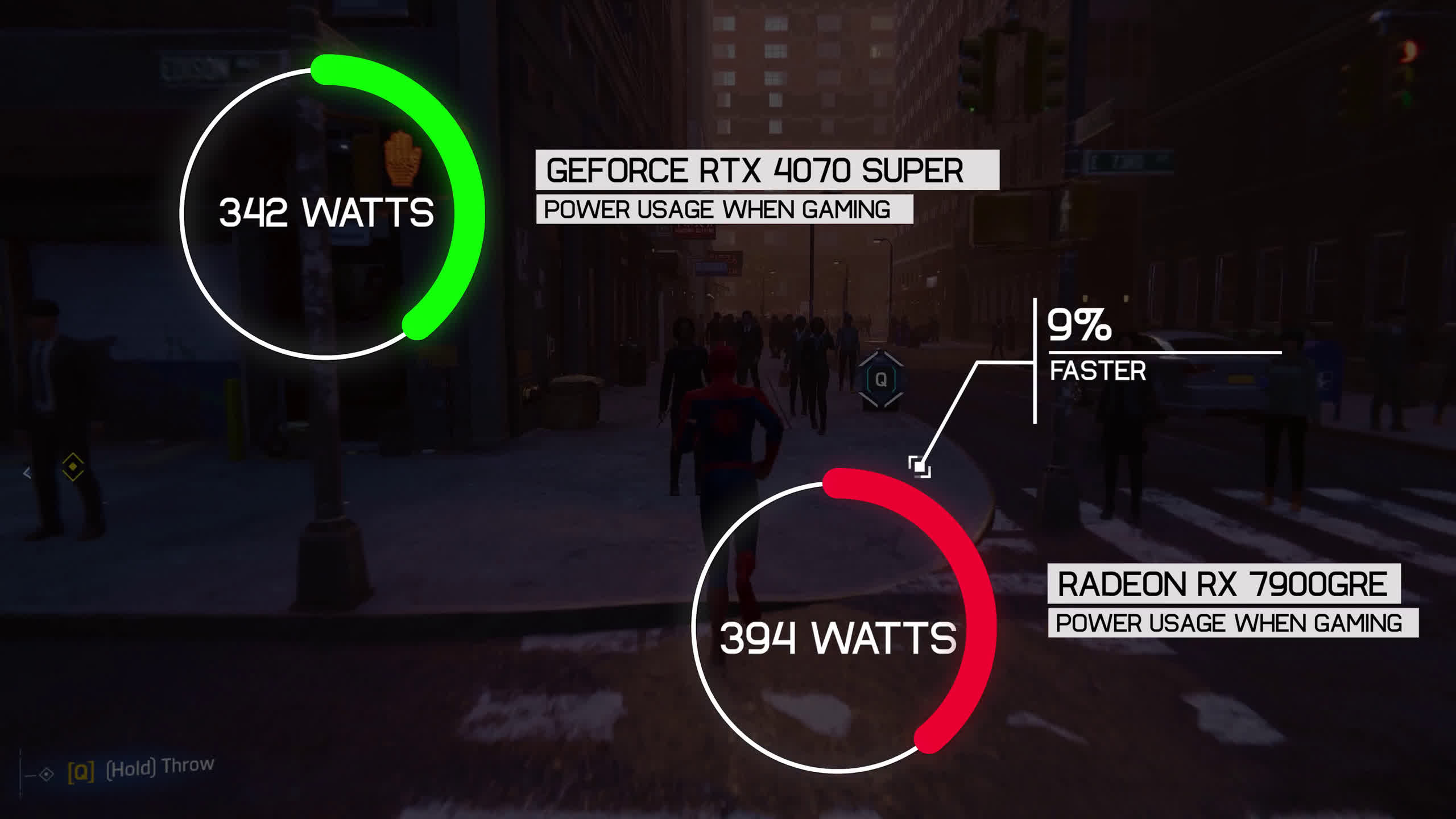

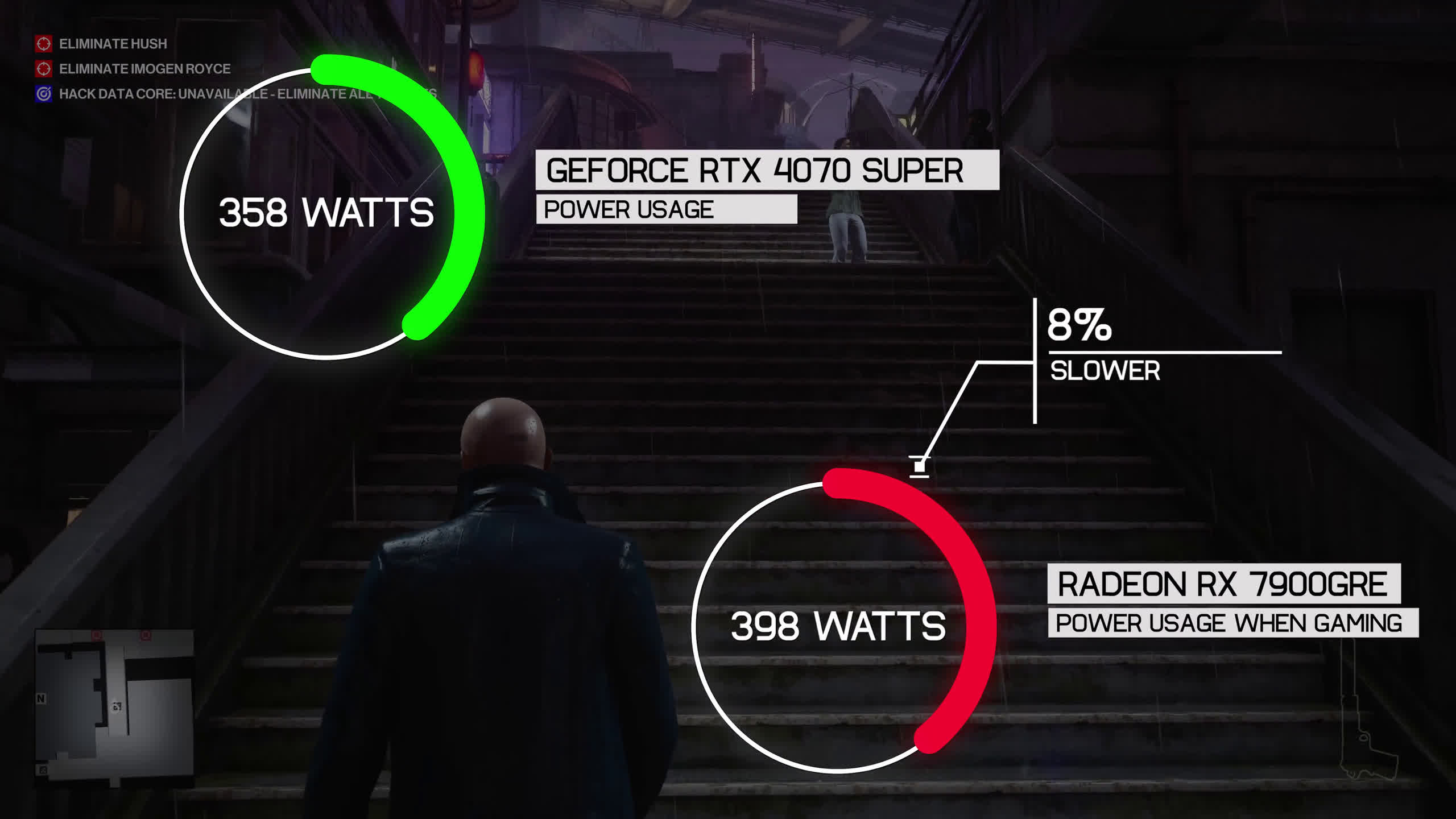

As a side note, if you care about power consumption, the RTX 4070 Super is generally more power efficient, reducing total system usage by as much as 50 watts when gaming, which was a 13% power saving in our test system when playing Spider-Man, though the GRE was also 9% faster. That said, it also increased total system power usage by 11% in Hitman 3 and was 8% slower, so the GeForce GPU is certainly more power efficient, it’s just not a significant factor in our opinion.

Of course, you can tinker with both models, either overclocking or undervolting, and in our experience the 7900 GRE responds the best to either method. So if you are going to fine-tune your graphics card, you might find even more value with the Radeon GPU.

Finally, we should probably just touch on frame generation, as we didn’t test this today, and it’s our opinion that it doesn’t belong on benchmark graphs. It’s a frame smoothing technology, not an FPS boosting technology – it’s been poorly marketed by Nvidia and AMD to mislead gamers. Both companies now support frame generation, we believe Nvidia offers the superior version at present, so that is to say Nvidia currently has the best frame smoothing technology.

At the end of the day, the Radeon 7900 GRE isn’t super compelling for just $50 off, it’s an 8% discount for similar raster performance, much worse RT performance in the titles where we think it counts, inferior upscaling technology, and weaker power efficiency. The only real ticks other than the $50 discount are the slightly better 4K results and the extra 4GB of VRAM.

But you could go either way, and AMD’s market share doesn’t put them in a position where they can afford for potential buyers to just go with the popular option.